In a recent ASP.NET MVC application I’m involved with, we had a late in the process request to handle Content Negotiation: Returning output based on the HTTP Accept header of the incoming HTTP request. This is standard behavior in ASP.NET Web API but ASP.NET MVC doesn’t support this functionality directly out of the box.

Another reason this came up in discussion is last week’s announcements of ASP.NET vNext, which seems to indicate that ASP.NET Web API is not going to be ported to the cloud version of vNext, but rather be replaced by a combined version of MVC and Web API. While it’s not clear what new API features will show up in this new framework, it’s pretty clear that the ASP.NET MVC style syntax will be the new standard for all the new combined HTTP processing framework.

Why negotiated Content?

Content negotiation is one of the key features of Web API even though it’s such a relatively simple thing. But it’s also something that’s missing in MVC and once you get used to automatically having your content returned based on Accept headers it’s hard to go back to manually having to create separate methods for different output types as you’ve had to with Microsoft server technologies all along (yes, yes I know other frameworks – including my own – have done this for years but for in the box features this is relatively new from Web API).

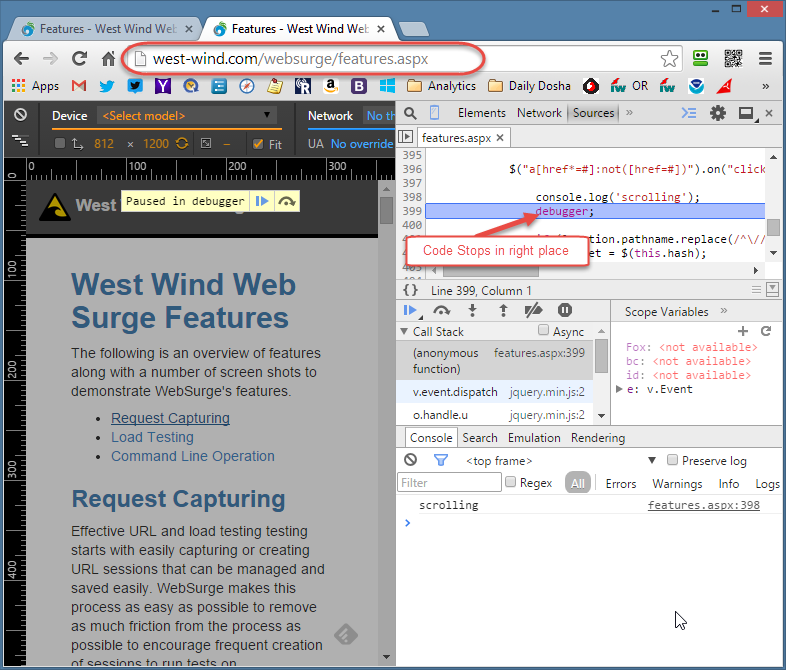

As a quick review, Accept Header content negotiation works off the request’s HTTP Accept header:

POST http://localhost/mydailydosha/Editable/NegotiateContent HTTP/1.1

Content-Type: application/jsonAccept: application/json

Host: localhost

Content-Length: 76

Pragma: no-cache

{ ElementId: "header", PageName: "TestPage", Text: "This is a nice header" }If I make this request I would expect to get back a JSON result based on my application/json Accept header. To request XML I‘d just change the accept header:

Accept: text/xml

and now I’d expect the response to come back as XML. Now this only works with media types that the server can process. In my case here I need to handle JSON, XML, HTML (using Views) and Plain Text. HTML results might need more than just a data return – you also probably need to specify a View to render the data into either by specifying the view explicitly or by using some sort of convention that can automatically locate a view to match. Today ASP.NET MVC doesn’t support this sort of automatic content switching out of the box.

Unfortunately, in my application scenario we have an application that started out primarily with an AJAX backend that was implemented with JSON only. So there are lots of JSON results like this:

[Route("Customers")]public ActionResult GetCustomers() {return Json(repo.GetCustomers(),JsonRequestBehavior.AllowGet); }

These work fine, but they are of course JSON specific. Then a couple of weeks ago, a requirement came in that an old desktop application needs to also consume this API and it has to use XML to do it because there’s no JSON parser available for it. Ooops – stuck with JSON in this case.

While it would have been easy to add XML specific methods I figured it’s easier to add basic content negotiation. And that’s what I show in this post.

Missteps – IResultFilter, IActionFilter

My first attempt at this was to use IResultFilter or IActionFilterwhich look like they would be ideal to modify result content after it’s been generated using OnResultExecuted() or OnActionExecuted().Filters are great because they can look globally at all controller methods or individual methods that are marked up with the Filter’s attribute. But it turns out these filters don’t work for raw POCO result values from Action methods.

What we wanted to do for API calls is get back to using plain .NET types as results rather than result actions. That is you write a method that doesn’t return an ActionResult, but a standard .NET type like this:

public Customer UpdateCustomer(Customer cust) {… do stuff to customer :-)

return cust; }

Unfortunately both OnResultExecuted and OnActionExecuted receive an MVC ContentResultinstance from the POCO object. MVC basically takes any non-ActionResult return value and turns it into a ContentResult by converting the value using .ToString(). Ugh. The ContentResult itself doesn’t contain the original value, which is lost AFAIK with no way to retrieve it. So there’s no way to access the raw customer object in the example above. Bummer.

Creating a NegotiatedResult

This leaves mucking around with custom ActionResults. ActionResults are MVC’s standard way to return action method results – you basically specify that you would like to render your result in a specific format. Common ActionResults are ViewResults (ie. View(vn,model)), JsonResult, RedirectResult etc. They work and are fairly effective and work fairly well for testing as well as it’s the ‘standard’ interface to return results from actions. The problem with the this is mainly that you’re explicitly saying that you want a specific result output type. This works well for many things, but sometimes you do want your result to be negotiated.

My first crack at this solution here is to create a simple ActionResult subclass that looks at the Accept header and based on that writes the output. I need to support JSON and XML content and HTML as well as text – so effectively 4 media types: application/json, text/xml, text/html and text/plain. Everything else is passed through as ContentResult – which effecively returns whatever .ToString() returns.

Here’s what the NegotiatedResult usage looks like:

public ActionResult GetCustomers() {return new NegotiatedResult(repo.GetCustomers()); }public ActionResult GetCustomer(int id) {return new NegotiatedResult("Show", repo.GetCustomer(id)); }

There are two overloads of this method – one that returns just the raw result value and a second version that accepts an optional view name. The second version returns the Razor view specified only if text/html is requested – otherwise the raw data is returned. This is useful in applications where you have an HTML front end that can also double as an API interface endpoint that’s using the same model data you send to the View. For the application I mentioned above this was another actual use-case we needed to address so this was a welcome side effect of creating a custom ActionResult.

There’s also an extension method that directly attaches a Negotiated() method to the controller using the same syntax:

public ActionResult GetCustomers() {return this.Negotiated(repo.GetCustomers()); }public ActionResult GetCustomer(int id) {return this.Negotiated("Show",repo.GetCustomer(id)); }

Using either of these mechanisms now allows you to return JSON, XML, HTML or plain text results depending on the Accept header sent. Send application/json you get just the Customer JSON data. Ditto for text/xml and XML data. Pass text/html for the Accept header and the "Show.cshtml" Razor view is rendered passing the result model data producing final HTML output.

While this isn’t as clean as passing just POCO objects back as I had intended originally, this approach fits better with how MVC action methods are intended to be used and we get the bonus of being able to specify a View to render (optionally) for HTML.

How does it work

An ActionResult implementation is pretty straightforward. You inherit from ActionResult and implement the ExecuteResult method to send your output to the ASP.NET output stream. ActionFilters are an easy way to effectively do post processing on ASP.NET MVC controller actions just before the content is sent to the output stream, assuming your specific action result was used.

Here’s the full code to the NegotiatedResult class (you can also check it out on GitHub):

/// <summary> /// Returns a content negotiated result based on the Accept header./// Minimal implementation that works with JSON and XML content,/// can also optionally return a view with HTML. /// </summary> /// <example> /// // model data only/// public ActionResult GetCustomers()/// {/// return new NegotiatedResult(repo.Customers.OrderBy( c=> c.Company) )/// }/// // optional view for HTML/// public ActionResult GetCustomers()/// {/// return new NegotiatedResult("List", repo.Customers.OrderBy( c=> c.Company) )/// }/// </example>public class NegotiatedResult : ActionResult{/// <summary> /// Data stored to be 'serialized'. Public/// so it's potentially accessible in filters./// </summary>public object Data { get; set; }/// <summary> /// Optional name of the HTML view to be rendered/// for HTML responses/// </summary>public string ViewName { get; set; }public static bool FormatOutput { get; set; }static NegotiatedResult() { FormatOutput = HttpContext.Current.IsDebuggingEnabled; }/// <summary> /// Pass in data to serialize/// </summary> /// <param name="data">Data to serialize</param> public NegotiatedResult(object data) { Data = data; }/// <summary> /// Pass in data and an optional view for HTML views/// </summary> /// <param name="data"></param> /// <param name="viewName"></param>public NegotiatedResult(string viewName, object data) { Data = data; ViewName = viewName; }public override void ExecuteResult(ControllerContext context) {if (context == null)throw new ArgumentNullException("context");HttpResponseBase response = context.HttpContext.Response;HttpRequestBase request = context.HttpContext.Request;// Look for specific content types if (request.AcceptTypes.Contains("text/html")) { response.ContentType = "text/html";if (!string.IsNullOrEmpty(ViewName)) {var viewData = context.Controller.ViewData; viewData.Model = Data;var viewResult = new ViewResult{ ViewName = ViewName, MasterName = null, ViewData = viewData, TempData = context.Controller.TempData, ViewEngineCollection = ((Controller)context.Controller).ViewEngineCollection }; viewResult.ExecuteResult(context.Controller.ControllerContext); }elseresponse.Write(Data); }else if (request.AcceptTypes.Contains("text/plain")) { response.ContentType = "text/plain"; response.Write(Data); }else if (request.AcceptTypes.Contains("application/json")) {using (JsonTextWriter writer = new JsonTextWriter(response.Output)) {var settings = new JsonSerializerSettings();if (FormatOutput) settings.Formatting = Newtonsoft.Json.Formatting.Indented;JsonSerializer serializer = JsonSerializer.Create(settings); serializer.Serialize(writer, Data); writer.Flush(); } } else if (request.AcceptTypes.Contains("text/xml")) { response.ContentType = "text/xml";if (Data != null) {using (var writer = new XmlTextWriter(response.OutputStream, new UTF8Encoding())) {if (FormatOutput) writer.Formatting = System.Xml.Formatting.Indented;XmlSerializer serializer = new XmlSerializer(Data.GetType()); serializer.Serialize(writer, Data); writer.Flush(); } } } else{// just write data as a plain stringresponse.Write(Data); } } }/// <summary> /// Extends Controller with Negotiated() ActionResult that does/// basic content negotiation based on the Accept header./// </summary>public static class NegotiatedResultExtensions{/// <summary> /// Return content-negotiated content of the data based on Accept header./// Supports:/// application/json - using JSON.NET/// text/xml - Xml as XmlSerializer XML/// text/html - as text, or an optional View/// text/plain - as text/// </summary> /// <param name="controller"></param> /// <param name="data">Data to return</param> /// <returns>serialized data</returns> /// <example> /// public ActionResult GetCustomers()/// {/// return this.Negotiated( repo.Customers.OrderBy( c=> c.Company) )/// }/// </example>public static NegotiatedResult Negotiated(this Controller controller, object data) {return new NegotiatedResult(data); }/// <summary> /// Return content-negotiated content of the data based on Accept header./// Supports:/// application/json - using JSON.NET/// text/xml - Xml as XmlSerializer XML/// text/html - as text, or an optional View/// text/plain - as text/// </summary> /// <param name="controller"></param> /// <param name="viewName">Name of the View to when Accept is text/html</param> /// /// <param name="data">Data to return</param> /// <returns>serialized data</returns> /// <example> /// public ActionResult GetCustomers()/// {/// return this.Negotiated("List", repo.Customers.OrderBy( c=> c.Company) )/// }/// </example>public static NegotiatedResult Negotiated(this Controller controller, string viewName, object data) {return new NegotiatedResult(viewName, data); } }

Output Generation – JSON and XML

Generating output for XML and JSON is simple – you use the desired serializer and off you go. Using XmlSerializer and JSON.NET it’s just a handful of lines each to generate serialized output directly into the HTTP output stream.

Please note this implementation uses JSON.NET for its JSON generation rather than the default JavaScriptSerializer that MVC uses which I feel is an additional bonus to implementing this custom action. I’d already been using a custom JsonNetResult class previously, but now this is just rolled into this custom ActionResult.

Just keep in mind that JSON.NET outputs slightly different JSON for certain things like collections for example, so behavior may change. One addition to this implementation might be a flag to allow switching the JSON serializer.

Html View Generation

Html View generation actually turned out to be easier than anticipated. Initially I used my generic ASP.NET ViewRenderer Class that can render MVC views from any ASP.NET application. However it turns out since we are executing inside of an active MVC request there’s an easier way: We can simply create a custom ViewResult and populate its members and then execute it.

The code in text/html handling code that renders the view is simply this:

response.ContentType = "text/html";if (!string.IsNullOrEmpty(ViewName)) {var viewData = context.Controller.ViewData; viewData.Model = Data;var viewResult = new ViewResult{ ViewName = ViewName, MasterName = null, ViewData = viewData, TempData = context.Controller.TempData, ViewEngineCollection = ((Controller)context.Controller).ViewEngineCollection }; viewResult.ExecuteResult(context.Controller.ControllerContext); }elseresponse.Write(Data);

which is a neat and easy way to render a Razor view assuming you have an active controller that’s ready for rendering. Sweet – dependency removed which makes this class self-contained without any external dependencies other than JSON.NET.

Summary

While this isn’t exactly a new topic, it’s the first time I’ve actually delved into this with MVC. I’ve been doing content negotiation with Web API and prior to that with my REST library. This is the first time it’s come up as an issue in MVC. But as I have worked through this I find that having a way to specify both HTML Views *and* JSON and XML results from a single controller certainly is appealing to me in many situations as we are in this particular application returning identical data models for each of these operations.

Rendering content negotiated views is something that I hope ASP.NET vNext will provide natively in the combined MVC and WebAPI model, but we’ll see how this actually will be implemented. In the meantime having a custom ActionResult that provides this functionality is a workable and easily adaptable way of handling this going forward. Whatever ends up happening in ASP.NET vNext the abstraction can probably be changed to support the native features of the future.

Anyway I hope some of you found this useful if not for direct integration then as insight into some of the rendering logic that MVC uses to get output into the HTTP stream…

Related Resources

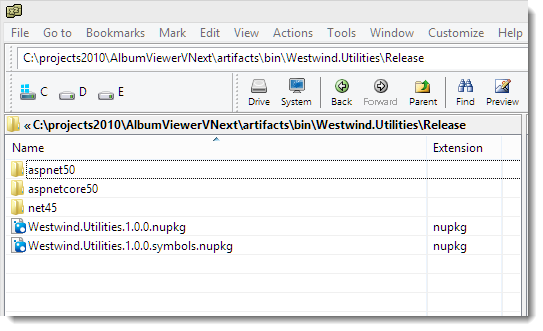

- Latest Version of NegotiatedResult.cs on GitHub

- Understanding Action Controllers

- Rendering ASP.NET Views To String

![FileNesting[1] FileNesting[1]](http://weblog.west-wind.com/images/2014Windows-Live-Writer/546430947e1f_118F6/FileNesting%5B1%5D_thumb.png)

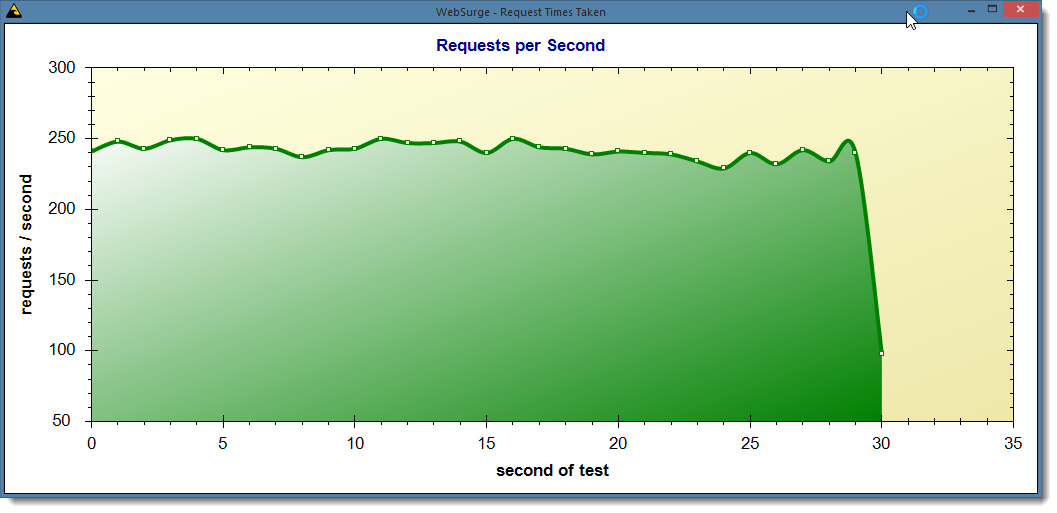

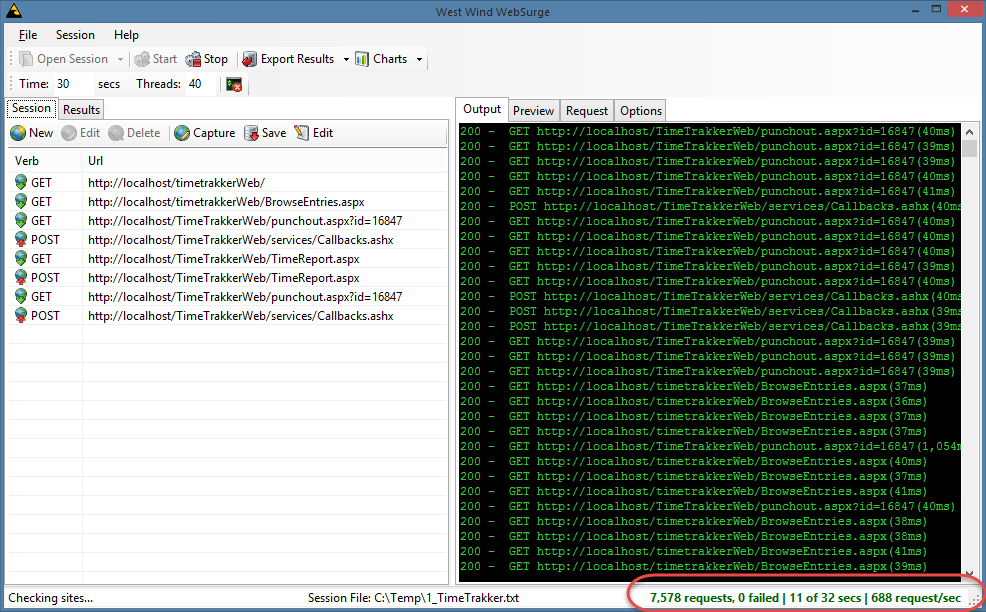

![ResultsDisplay[1] ResultsDisplay[1]](http://weblog.west-wind.com/images/2014Windows-Live-Writer/Web-Load-Testing-West-Wind-WebSurge_EBFD/ResultsDisplay%5B1%5D_thumb.png)