ASP.NET Core and CORS Gotchas

External Network Access to Kestrel and IIS Express in ASP.NET Core

Dealing with Anti-Virus False Positives

I've been working on Markdown Monster for a while now. It's a standalone desktop application and in recent months I've been plagued with Anti-Virus false positives for the installation executable. I didn't realize anything was wrong at first, until a few occasional emails came rolling in from users telling me their anti-virus flagged the installer - in many cases completely blocking the install process.

My first reaction was - "ah, just a fluke with a false positive". After all I know what's in my code and there's nothing threatening in here. But to my chagrin, using VirusTotal - which is used by Chocolatey and other distribution sources - I was coming away with 9 AV failures:

Looks nasty doesn't it? I had to take a closer look.

Figuring out what's going on

Anti-Virus false positives are a pain because it's quite likely if you open the package and see a virus warning you're going to be very hesitant to go any further, my assurances aside :-) Several people contacted me in recent weeks and let me know that the installer was flagged by their Anti-Virus tool. A few were brave and installed anyway - saying they trusted me that there was no malice in these files since they are coming from me. Brave indeed - I'm not sure I'd do the same. Seeing AV warnings on software is something you generally want to take serious.

In this case however, it turns out that it's definitely a case of false positives. How do I know this?

Well, let me tell you a story...

As it turns out there were a number of factors at play here:

- One third party library that had been flagged as malicious

- One installer platform apparently tagged

In order to track down the problem I tried a boatload of things to try and isolate where the problem was coming from. It took a while but I think I'm out of the woods for now. In this post I walk through the morass of trying to figure out what was causing the false positives and the workarounds that eventually allowed me to get past the problem - after quite a bit of sleuthing and wasted time. I figure it might be useful you find yourself in a similar position with your application...

Third Party Problem

I started by removing all DLL dependencies from the installed distribution before compiling into the installer. To my surprise, after removing all dependencies VirusTotal came down to 3 AV hits, instead of the previous 9 I started with - a definite improvement.

It turns out that one third party library - hunspell spell checker library specifically - has had a problem with a very particular version. Doing some research I found that another vendor had built a custom version of hunspell.dll that did some monkey business - and that's what got hunspell flagged as a potential trojan. Removing hunspell immediately dropped a number of the AV hits (down to 3 from 9).

I played around with several different versions of hunspell and found that only the latest version of NHUnspell was flagging AV. I uninstalled and installed an older version and AV no longer flagged those particular items.

I suspect this is also a false positive. After all hunspell is open source and quite popular as it it's used by major pieces of software like most browsers and most open source editors. It's also open source so the code is there for all to see - it'd be hard to hide a trojan in broad view especially in tool with such tightly defined scope and size. But... nevertheless it got flagged and the only way for me to get past this was to use an older version.

Which is ridiculous if you think about it!

Installer Woes

This still left me with 3 AV hits one of which was a major vendor - Avast.

At this point I wasn't sure what to try. I had removed all external binaries, and I was still getting errors.

I then zipped up all code without using the installer software (InstallMate is what I use) - IOW, I just packaged up the entire distribution folder minus Installer package that provides the shortcut, registry keys (for IE version and file association) and Environment (adding to path) registration. And lo and behold - no AV hits.

I then built an empty installer - nothing in it except all the text and image resources - also no AV hits. I then added back the DLLs - no AV hits. Added back my main EXE - and BAM! - back to having AV troubles.

I then also tried just checking the main EXE on its own on VirusTotal and that comes away clean with 0 AV hits as well.

MADNESS!

In summary - on its own the Exe is fine. On its own the installer minus EXE is fine. The full distribution zipped up plain without the installer is also fine. All fine, but the combination of installer plus my EXE results in multiple AV hits.

Yup that makes perfect sense. NOT!

This really makes you wonder how much faith you should have in these anti-virus solutions. If the individual parts are clean but the combined parts trigger, something is seriously amiss in the detection process. Further if you look at the original screen shot of the AV hits, every vendor seems to be triggering on a completely different threat. Again how should this be possible if individually the files are fine, but packaged they are not? How reliable is this stuff really?

Rebuilding the Installer

At this point the only solution I have left to me is to rebuild the installer.

I've used Tarma's Installmate for years and years. It's been easy to work with, very reliable and with all my other products I never had an AV problem. In this case though clearly some magic combination is triggered that sets of AV alarms and I was just not able to shake it.

So - I created a new installer using Inno Setup which is a very popular and free solution. My install is fairly straight forward in that it copies a fixed file structure and just needs a couple of registry entries, an environment setting and a file association, so it was relatively painless to build a new installer that duplicates what I had with InstallMate. The hardest part was re-creating the installer images in the right format :-)

After building the final install I sent it off to VirusTotal and... it came back clean - no AV hits:

Yay!

Now here's the scary part - I've sent up the file several times and had the very same file reanlysed a few times. 2 out of 5 times it came up with one AV hit (for some obscure Chinese AV software). But again - that makes you wonder how reliable all of this AV testing is when several consecutive runs of the same exact file produce different results?

Signing the Installer and Main Executable

While I was fretting about the fact that this software was probably not getting used at all due to the AV issues, I also decided it's probably a good idea to have the installer and the main Exe signed to prove the identity where it comes from. This is probably doubly important since the code for Markdown Monster is available and it's possible to recompile the application. The Sign Code signature clearly identifies the official version that is published by West Wind Technologies.

I've put off signing my software for a long time, because it's quite the hassle to aquire a certificate, get it installed properly, sign your code and then make sure the certificate is renewed each year. I found out that prices for these Code Signing certs have come down somewhat since I last checked. I ended up with a Commodo Certificate from the SSL Store. And true to form the first certificate that was issued didn't want to properly install into the Windows certificate store. I was unable to export it or otherwise reference it. I had to have it re-issued, but not after wasting an hour trying to get it to work. When the new certificate arrived it 'just' worked.

Security on Windows is always a hassle because it's so bloody unclear on where certificates need to live so that applications that need it can find it.

Here are a few hints about Code Signing an Exe:

- Use the same browser to generate and then install the certificate

- FireFox worked best for me - it allowed easy export of the Certificate

- Chrome and IE installed the cert in the wrong place and I couldn't export to PFX

- FireFox could export to PKCS#12 which is the same as PFX format

- I had to install the certificate to Local Machine/Person to get it to work

- Signtool was not able to find the cert when installed in Current User/Personal

- Use SignTool.exe to sign using Installed Certificate (rather than PFX)

- Run SignTool as Admin (when cert is installed in Local Machine)

To actually sign a certificate I use the following in my Powershell build script:

& "C:\Program Files (x86)\Microsoft SDKs\Windows\v7.1A\Bin\signtool.exe"

sign /v /n "West Wind Technologies"

/sm /s MY

/tr "http://timestamp.digicert.com" /td SHA256 /fd SHA256".\Builds\CurrentRelease\MarkdownMonsterSetup.exe"The /n "West Wind Technologies" switch specifies a substring of the Certificate's subject item which includes CN=West Wind Technologies so that works. The /sm switch uses the machine level certificate which was the only way I could get it to work. I also had to run as an Administrator in order to get at that certificate.

For me this only worked with the certificate installed in the Local Machine store, not the current user and having to run as an Administrator. I couldn't get SignTool to work with the Current User/Personal installed certificate and running without the /sm switch - when running as a regular user I always get the message that SignTool can't locate an appropriate certificate. There probably is a way to make this work with current user but I couldn't find the right incantation. If you know how to sign without running as an Admin (and using the certificate store rather than the PFX with a password) please drop a comment.

So when you fire up the unsigned installer it looks like this:

After signing the setup EXE and running the signed installer it goes to a the much less scary looking dialog:

with the MM icon and the West Wind publisher name applied.

While this ended up not having any effect AV hits, it does provide more confidence for people downloading the software.

So now I have a clean AV slate (for now - fingers crossed) and a properly signed Exe which is nice. But man a lot of effort went into making this all happen.

Now to go and sign all the rest of my products properly as well.

Anti-Virus Hell

At the end of the day, this was a major pain in the ass, when essentially it came down to false positive AV scores. But, there's really nothing I could do other than try to work around the issues I mentioned in the end having to completely ditch my perfectly fine installer software for an alternative, just to get a different result. Nothing has changed - the same binaries are deployed as before, the same installation changes are made - one solution flags AV, the other does not. And that is just not cool and leads me to think that much of the AV tracking is not as sophisticated as we'd expect it to be.

To be fair most AV vendors have Web sites to submit false positives and the three I submitted to were responsive to rescanning (and ultimately stating there's nothing wrong with files). But that's not a sustainable solution if you push out new builds that are likely to trigger again in the future.

This is a pain for software vendors to say the least. I'm at the mercy of the AV software that is essentially holding software hostage based on false positives. Nobody wants to install software that is flagged as malware - even if you trust the source.

While searching around and Twittering about the issues I ran into, I got an earful from other developers who've gone through similar pains.

Markdown Monster

If you previously tried to use Markdown Monster and ran into Anti-Virus warnings, you might want to give it another try. Starting with Version 0.51 the new installer and signed code is live.

Error Handling and ExceptionFilter Dependency Injection for ASP.NET Core APIs

While working on my ASP.NET Core API and Angular 2.0 AlbumViewer sample, one last thing I need to round out the feature set is to make sure that consistent error results are returned to the client. Unhandled errors should also be logged to disk for later reference.

ASP.NET Core does not provide a consistent error response for API errors out of the box. In fact, an error in an API results in the same error result as any other type controller result - an HTML error page (which you can configure) or nothing at all if you don't hook up any error handling middleware. For API's this is generally useless - a client application expecting a JSON result is not going to be able to do anything useful with an HTML error page, so some extra work implementing an ExceptionFilter is required. Actually there are a several ways you can implement error handling but ExceptionFilters are amongst the easiest and most flexible to implement. Other alternatives might include custom middleware but I won't cover that in this post.

ASP.NET Core also does not include a built-in file logging service so I have to rely on the excellent 3rd Party Serilog library to provide file logging for me. Additionally getting a logging dependency into a filter via Dependency Injection requires a little extra work.

In this post I describe how to create an ExceptionFilter to create consistent API error responses and use a Dependency Injected logging provider. In the process I'll talk a bit about error handling in my API implementation.

API Error Handling - A Use Case for an ExceptionFilter

In my AlbumViewer API I capture all errors using an MVC ExceptionFilter. As you might remember from previous posts, in ASP.NET Core MVC and APIs share a common processing pipeline so any filters you create can be used by both MVC and API controllers. In this case the controller will be specific to API results.

Inside of my API code any unhandled Exception should trigger the ExceptionFilter, which then captures the exception and in response returns a JSON error response in the form of a standard error object. The idea is that any error I can possibly intercept will be returned as a JSON response so that the client can provide some meaningful error information. The object returned always has a .message property that can that can potentially be used to display error information in a front end.

To start with, here is my initial error filter implementation without any logging:

public class ApiExceptionFilter : ExceptionFilterAttribute

{

public override void OnException(ExceptionContext context)

{

ApiError apiError = null;

if (context.Exception is ApiException)

{

// handle explicit 'known' API errors

var ex = context.Exception as ApiException;

context.Exception = null;

apiError = new ApiError(ex.Message);

apiError.errors = ex.Errors;

context.HttpContext.Response.StatusCode = ex.StatusCode;

}

else if (context.Exception is UnauthorizedAccessException)

{

apiError = new ApiError("Unauthorized Access");

context.HttpContext.Response.StatusCode = 401;

// handle logging here

}

else

{

// Unhandled errors

#if !DEBUG

var msg = "An unhandled error occurred.";

string stack = null;

#else

var msg = context.Exception.GetBaseException().Message;

string stack = context.Exception.StackTrace;

#endif

apiError = new ApiError(msg);

apiError.detail = stack;

context.HttpContext.Response.StatusCode = 500;

// handle logging here

}

// always return a JSON result

context.Result = new JsonResult(apiError);

base.OnException(context);

}

}

The exception filter differentiates between several different exception types. First it looks at a custom ApiException type, which is a special application generated Exception that can be used to send user acceptable error messages to the client. I use these in my controllers for handled errors that I want to use to display in the front end. Typically these are validation errors, or known failures like a login failure.

Next are unauthorized execptions which are handled specially by returning a forced 401 exception which can be used on the client to force authentication. The client can check for 401 errors and redirect to the login page. Note that ApiError results can also generate 401 responses (such as on a login error for example).

Finally there are unhandled exceptions - these are unexpected failures that the application doesn't explicitly know about. This could be a hardware failure, a null reference exception, an unexpected parsing error or - horror of horrors - a good old developer introduced bug. Basically anything that's - unhandled. These errors generate a generic error message in production so that no sensitive data is returned. Or at debug time it can optionally return the error message and stack trace to provide debugging information.

In all the use cases the exception filter returns an API error object as a response:

context.Result = new JsonResult(apiError);

base.OnException(context);which triggers the custom response that always ensures an object result with a .message property to the client.

Displaying Default Error Results

As an alternative - if you want to see the developer error page on unhandled exceptions - you can also exit without setting the

context.Resultvalue which triggers whatever the default error behavior was. If you want the default behavior for one or another of the use cases just return. For example for unhandled exceptions I could do:context.HttpContext.Response.StatusCode = 500; #if DEBUG return // early exit - result not set #ENDIF

Note that the filter implementation uses a couple support types:

ApiException

A custom exception that used to throw explicit, application generated errors that can be funnelled back into the UI and used there. These typically are used for validation errors or common operations that can have known negative responses such as a failed login attempt. The idea is that this error is used to return a well defined error message that is safe to be used on the client.ApiError

A custom serialization type used to return the error information via JSON to the client. Exceptions are not good for serialization because of the sensitive data and complex structure, so a simpler type is needed for serialization. The key property is.messagethat contains a message that is always set - even if it is a non-descript message in the case of unhandled exception. The type also has a.detailproperty that can contain additional information and a collection of.errorsthat can return a set of errors such as a list of validation errors to the client.

The two classes are implemented like this:

public class ApiException : Exception

{

public int StatusCode { get; set; }

public ValidationErrorCollection Errors { get; set; }

public ApiException(string message,

int statusCode = 500,

ValidationErrorCollection errors = null) :

base(message)

{

StatusCode = statusCode;

Errors = errors;

}

public ApiException(Exception ex, int statusCode = 500) : base(ex.Message)

{

StatusCode = statusCode;

}

}

public class ApiError

{

public string message { get; set; }

public bool isError { get; set; }

public string detail { get; set; }

public ValidationErrorCollection errors { get; set; }

public ApiError(string message)

{

this.message = message;

isError = true;

}

public ApiError(ModelStateDictionary modelState)

{

this.isError = true;

if (modelState != null && modelState.Any(m => m.Value.Errors.Count > 0))

{

message = "Please correct the specified errors and try again.";

//errors = modelState.SelectMany(m => m.Value.Errors).ToDictionary(m => m.Key, m=> m.ErrorMessage);

//errors = modelState.SelectMany(m => m.Value.Errors.Select( me => new KeyValuePair<string,string>( m.Key,me.ErrorMessage) ));

//errors = modelState.SelectMany(m => m.Value.Errors.Select(me => new ModelError { FieldName = m.Key, ErrorMessage = me.ErrorMessage }));

}

}

}Using the Exception Filter

To use the Exception Filter I can now simply attach it to my controllers like this:

[ApiExceptionFilter]

[EnableCors("CorsPolicy")]

public class AlbumViewerApiController : ControllerOr you can globally add it like this:

services.AddMvc(options =>

{

options.Filters.Add(new ApiExceptionFilter());

})We can now try this out in a controller method like this for simulating an unhandled exception:

[HttpGet]

[Route("api/throw")]

public object Throw()

{

throw new InvalidOperationException("This is an unhandled exception");

}and we should end up with a result like this when in debug mode:

{

message: "This is an unhandled exception",

isError: true,

detail: " at AlbumViewerAspNetCore.AlbumViewerApiController.Throw() in

C:\...\AlbumViewerApiController.cs:line 53 at

Microsoft.AspNetCore.Mvc.Internal.ControllerActionInvoker.<InvokeActionFilterAsync>d__28.MoveNext()",

errors: null

}and like this in non-debug mode:

{

message: "An unhandled error occurred.",

isError: true,

detail: null,

errors: null

}Consistent Errors on the Client

A client application can now make some assumptions around the error it receives. For example in my Angular 2.0 client application I use a custom response error parser that explicitly checks for objects with a message property and if it finds one uses it or else creates one as part of the Observable http call:

parseObservableResponseError(response) {

if (response.hasOwnProperty("message"))

return Observable.throw(response);

if (response.hasOwnProperty("Message")) {

response.message = response.Message;

return Observable.throw(response);

}

// always create an error object

let err = new ErrorInfo();

err.response = response;

err.message = response.statusText;

try {

let data = response.json();

if (data && data.message)

err.message = data.message;

}

catch(ex) { }

if (!err.message)

err.message = "Unknown server failure.";

return Observable.throw(err);

}I can then generically parse all API exceptions like inside of the service that calls the server API using the Angular Http service:

// service method

saveAlbum(album) {

return this.http.post(this.config.urls.url("album"),

album)

.map( response => {

this.album = response.json();

return this.album;

})

.catch( new ErrorInfo().parseObservableResponseError );

}The service is then called from a component like this:

// component method

saveAlbum(album) {

return this.albumService.saveAlbum(album)

.subscribe((album: Album) => {

this.error.info("Album saved.");

},

err => {

// display the error in error component

this.error

.error(`Unable to save album: ${err.message}`);

});

};In the UI this looks something like this then:

This makes the client side error handling very clean as I never have to figure out what format the error is in. The server returns errors with a .message property and the client error parser automatically tries to parse any errors that don't already have an error object into an error object, so that the UI code always is guaranteed a consistent object.

This makes error handling in API calls very easy following a very simple passthrough pattern where the expectation is that everything has a .message property (plus some optional additional information).

Adding Logging with Serilog

Back on the server we now have error handling, but now I also want to log my errors to disk.

If you look back at the initial filter code I left a couple of comment holes for logging.

When unhandled exceptions occur I would like to log those errors to the configured log provider. Personally I prefer file logs, but ASP.NET Core doesn't include support for a built-in file log provider (it's coming in future versions). For now I'm going to use Serilog, which is an excellent third party log package with tons of integration options including a rolling file sink. I'll use it to write logs that roll over to a new file daily.

To set this up I'm going to add a couple of Serilog packages and add some additional ASP.NET Logging packages in project.json:

"dependencies": {

..."Microsoft.Extensions.Logging": "1.0.0","Microsoft.Extensions.Logging.Filter": "1.0.0","Microsoft.Extensions.Logging.Console": "1.0.0","Microsoft.Extensions.Logging.Debug": "1.0.0","Serilog.Extensions.Logging": "1.2.0","Serilog.Sinks.RollingFile": "3.1.0"

}Next I need to configure logging in the Startup.Configure() method:

// Serilog configuration

Log.Logger = new LoggerConfiguration()

.WriteTo.RollingFile(pathFormat: "logs\\log-{Date}.log")

.CreateLogger();

if (env.IsDevelopment())

{

// ASP.NET Log Config

loggerFactory.WithFilter(new FilterLoggerSettings

{

{"Trace",LogLevel.Trace },

{"Default", LogLevel.Trace},

{"Microsoft", LogLevel.Warning},

{"System", LogLevel.Warning}

})

.AddConsole()

.AddSerilog();

app.UseDeveloperExceptionPage();

}

else

{

loggerFactory.WithFilter(new FilterLoggerSettings

{

{"Trace",LogLevel.Trace },

{"Default", LogLevel.Trace},

{"Microsoft", LogLevel.Warning},

{"System", LogLevel.Warning}

})

.AddSerilog();

// ...

}Serilog requires some configuration and the first line above configures the default logger.

Use the Serilog Singleton?

Serilog actually works as a Singleton, so in theory you could just use Logger.Log directly to log to file. Once configured you can simply do something like this:

Log.Logger.Information("Applicaton Started");

...

Log.Logger.Information(ex,"Failed sending message data to server.");However, using this approach you bypass any other providers hooked up to the ASP.NET logging pipeline. In this case my application also logs to the Console in debug mode, and I also want to log warnings and errors that ASP.NET generates internally. If I want all of this to go to Serilog's output I have to run through the ASP.NET Logging pipeline which requires that I use configuration through Dependency Injection.

Injecting the Logger into the Exception Filter

In order to use the logger in my Exception filter I have to first allow it to be injected into the constructor:

public class ApiExceptionFilter : ExceptionFilterAttribute

{

private ILogger<ApiExceptionFilter> _Logger;

public ApiExceptionFilter(ILogger<ApiExceptionFilter> logger)

{

_Logger = logger;

}

// ...

}

I can then add following for the Unhandled Exception handler without:

_Logger.LogError(new EventId(0), context.Exception, msg);But we're not quite there yet. The standard filter attribute doesn't support dependency injecttion. Once you add dependencies a different approach is required:

- Use the

[ServiceFilter]Attribute - Add the filter to the Injection list

Let's change the filter declaration on the controller to:

[ServiceFilter(typeof(ApiExceptionFilter))]

public class AlbumViewerApiController : ControllerIn addition I need to declare the ApiExceptionFilter as a dependency in the DI configuration in the ConfigureServices() method of Startup.cs:

services.AddScoped<ApiExceptionFilter>();And voila - now our logging should work with the injected ASP.NET Logger and I can do:

_Logger.LogWarning($"Application thrown error: {ex.Message}", ex);

_Logger.LogWarning("Unauthorized Access in Controller Filter.");

_Logger.LogError(new EventId(0), context.Exception, msg); Which produces output like the following (for the last item which is the unhandled exception):

2016-10-14 18:58:43.109 -10:00 [Error] This is an unhandled exception

System.InvalidOperationException: This is an unhandled exception

at AlbumViewerAspNetCore.AlbumViewerApiController.Throw() in

C:\...\AlbumViewerApiController.cs:line 53

at Microsoft.AspNetCore.Mvc.Internal.ControllerActionInvoker

.<InvokeActionFilterAsync>d__28.MoveNext()Yay!

Dependency Injection - not always easier

If you're new to Dependency Injection you probably think that the DI implementation here adds a ton of ceremony around what should be a really simple task. I agree. Having to register a filter and then explicitly using a special attribute syntax to get the injection to work seems like a huge pain in the ass. Especially since it seems that DI could automatically be handled by the standard Filter implemenation. I'm not sure why that doesn't just work, but my guess it's for performance as DI does add some overhead.

FWIW, Serilog supports a static Singleton logger instance that you can use and bypass all of the ceremony. If you just want to log your own errors and don't care about the rest of ASP.NET's logging features, then you can skip dependency injection entirely and just use the Serilog's Singleton directly:

Log.Logger.Error(context.Exception, "An unhandled error occurred. Error has been logged.");which produces the following in the log file:

2016-10-15 11:02:09.207 -10:00 [Error] An unhandled error occurred. Error has been logged.

System.InvalidOperationException: This is an unhandled exception

at AlbumViewerAspNetCore.AlbumViewerApiController.Throw() in

C:\projects2010\AlbumViewerVNext\src\AlbumViewerNetCore\Controllers\AlbumViewerApiController.cs:line 53

at Microsoft.AspNetCore.Mvc.Internal.ControllerActionInvoker.<InvokeActionFilterAsync>d__28.MoveNext()Clearly this is a much simpler approach, but you lose the ability to also log to other log providers configured in ASP.NET. In short you have options - you can do things the 'recommended' way or choose a simpler route if that works for you. Choice is good.

Alternatives

Creating an exception filter to handle unhandled exceptions is one way to handle errors. It's a reasonably reusable approach - you can create an easily reusable implmentation of a filter that can easily be applied to many APIs and applications.

Another approach could be to create custom middleware that automatically scans for API requests (ie. requests that Accept: application/json or text/xml perhaps) and handle errors as part of the middleware pipeline. The implementation of such middleware would be conceptually similar, but with the added bonus of having a more common configuration point in the ASP.NET Core configuration pipeline (via ConfigureServices and Configure methods in Startup.cs).

However, personally I feel that using a filter is actually a better choice as in most application exception handling tends to be more 'personal' in that you end up configuring your error logging and perhaps also the error response logic. Using a simple baseline to inherit from or even just re-implment seems more effective than trying to have a do-everything piece of middleware with cryptic configuration switches.

If there's interest in this we can explore that in a future post - leave a comment if that's of interest to you. For now I feel that an API filter that you can apply to specific controllers offers more control.

Summary

Exception handling in API application's is rather important as errors - both handled and unhandled - need to be passed to the client in some way to let it know that something went wrong. By having a consistent error format for all errors the client can use a common approach to handle errors - you don't want the client to receive HTML error pages that it won't be able to do anything useful with.

The best way to handle this in your ASP.NET Core API is to create an ExceptionFilter that intercepts all exceptions and then returns an error object in a common format. You'll also want to make sure you capture the error information and log it, and to make sure you differentiate between 'known' errors and unhandled errors, in order to not return sensitive data back to the client.

I've been using this approach forever for services, even in pre-JSON days and it works well. It's almost silly that this isn't a built-in feature of the API implementation in ASP.NET core. Since content-negotiation is a thing, why shouldn't error results in those same content negotiated formats also be automatic? But using MVC/API Exception Filters are pretty easy to implement so it's not difficult to create the behavior you want on your own. Either way there's no reason not to return consistent error information for your APIs.

Resources

Image Copyright: aruba2000 / 123RF Stock PhotoPost created with Markdown Monster

Automating Installation Builds and Chocolatey Packaging

In my work with Markdown Monster I've been pushing new release (ok pre-release) builds out several times a week. It's trivial for me to package my current build into a setup executable and publish it into the right place in a few minutes, simply by using some automated Powershell scripts.

This may not be news to some of you, but for me - someone who doesn't work frequently with desktop applications - having a full build pipeline that goes from build all the way through a deployable Installer and Chocolatey package has been a huge boon for me, when I started implementing it about a year ago or so for all of my desktop apps.

In this post I'll describe the admittedly simple process I use to create my Markdown Monster packages - installer and Chocolatey package and upload my code to my server and GitHub for releases. I'm writing this more for myself in order to have one place to keep all of this information, but some of you may also find this useful, and perhaps have some anecdotes of your own that you might share in the comments.

Build Automation

Markdown Monster is a WPF desktop application that has a fairly simple footprint and installation process. It's basically a single folder hierarchy installation (assuming .NET is installed), plus a bunch of registration features - file extension, PATH, IE Version etc. - handled by the installer. Even though it's pretty simple install, getting all the pieces together to create a one click install still involves quite a few steps.

In my build process there are four grouped sets of operations:

- Building the installer

- Uploading the installer to the Web site

- Pushing a release to Github

- Creating and publishing a Chocolatey Package

which breaks down into a lot more detailed steps:

- Create a distribution folder

- Copy files from various locations into this distribution folder

- Code sign the main EXE

- Run the Installer Packaging (Inno Setup)

- Code sign the resulting Setup EXE

- Create a zip file from the Setup EXE

- Create a version specific copy into the Releases folder

- Publish the release version to my Web server for download

- Commit and Push the release to Github (which will be linked to Chocolatey)

- Build a Chocolatey package that references the release on Git

- Publish the Chocolatey package

Doing all of this manually would be crazy time consuming, but - ahem - I usually start with manual publishing initially before I hit the "this is too much work" stage and create an automated build. I think I'm to the point now where, when I create a new project, the build process is hooked up right from the start, because it's easier to do it in stages than all at once. I think when I did last with Markdown Monster, it took the better part of the day to get the build steps all working and tested properly.

Let's take a look and see how I automate these operations.

Building an Installer

As is often the case I started simple with a Powershell script a while back and then kept adding stuff. The first and perhaps most time consuming task is the Install package builder.

My build script looks something like this:

$cur="$PSScriptRoot"

$source="$PSScriptRoot\..\MarkdownMonster"

$target="$PSScriptRoot\Distribution"

robocopy ${source}\bin\Release ${target} /MIR

copy ${cur}\mm.bat ${target}\mm.bat

del ${target}\*.vshost.*

del ${target}\*.pdb

del ${target}\*.xml

del ${target}\addins\*.pdb

del ${target}\addins\*.xml

cd $PSScriptRoot

& "C:\Program Files (x86)\Microsoft SDKs\Windows\v7.1A\Bin\signtool.exe" sign /v /n "West Wind Technologies" /sm /s MY /tr "http://timestamp.digicert.com" /td SHA256 /fd SHA256 ".\Distribution\MarkdownMonster.exe""Running Inno Setup..."& "C:\Program Files (x86)\Inno Setup 5\iscc.exe" "MarkdownMonster.iss" & "C:\Program Files (x86)\Microsoft SDKs\Windows\v7.1A\Bin\signtool.exe" sign /v /n "West Wind Technologies" /sm /tr "http://timestamp.digicert.com" /td SHA256 /fd SHA256 ".\Builds\CurrentRelease\MarkdownMonsterSetup.exe""Zipping up setup file..."

7z a -tzip "$PSScriptRoot\Builds\CurrentRelease\MarkdownMonsterSetup.zip" ".\Builds\CurrentRelease\MarkdownMonsterSetup.exe"The first part deals with setting up the Distribution folder that can be pushed into the installer (and that actually lives in an external .ps1 file). The code then signs the EXE, runs the installation packaging (Inno Setup's CLI), signs the final Setup EXE and finally also creates a zip file that embeds the setup EXE (apparently lots of organizations don't allow downloading of EXEs, but some a zipped EXE is fine - go figure).

All in all this process takes about 20 seconds to run - most of it taken up by Inno Setup - which is not bad for an automated build at any time, using only spit and duct tape :-)

Creating Releases and Uploading

Once I have a build that's ready to be released I create a copy of the setup exe and store it in a releases folder with a version number. The EXE releases are checked into the Git repo, and published along with the rest of the project and pushed to GitHub.

These releases are ultimately referenced by Chocolatey for specific version downloads when doing a choco install.

The checking process and release file creation is manual, because it only happens for actual releases which is not that frequent (ie. maybe a few times a week).

I also upload the the final Setup package, the Zip file and a version file to my Web site. The version file is an XML file that is used to check for the latest version and again, I change the version here manually because it only happens when I'm ready to create a final published release.

The version XML file looks like this:

<?xml version="1.0" encoding="utf-8"?><VersionInfo><Version>0.57</Version><ReleaseDate>October 26th, 2016</ReleaseDate><Title>West Wind Markdown Monster 0.57 now available</Title><Detail>

This update adds a new Markdown Parser that better supports Github Flavored Markdown courtesy

of a new Markdown parse: MarkDig. There are also new options for creating links that open in a new window.

The latest version of the installer and binaries are now code-signed for source verification from West Wind.

For more detailed release info please see:

https://github.com/RickStrahl/MarkdownMonster/blob/master/Changelog.md

</Detail></VersionInfo>This file is used both by Markdown Monster's internal version checking. The file is also used by the Web site to get the latest version to display on the home page and the download page.

Once the build's been completed I upload to the Web site with a small Powershell script:

$uid= Read-Host -Prompt 'Username'

$pwd=Read-Host -Prompt 'Password' -AsSecureString

$pwd = [Runtime.InteropServices.Marshal]::PtrToStringAuto(

[Runtime.InteropServices.Marshal]::SecureStringToBSTR($pwd))

if(!$pwd) {Exit;}

curl.exe -T ".\Builds\CurrentRelease\MarkdownMonsterSetup.exe" "ftps://west-wind.com/Ftp/Files/" -u ${uid}:${pwd} -k

curl.exe -T ".\Builds\CurrentRelease\MarkdownMonsterSetup.zip" "ftps://west-wind.com/Ftp/Files/" -u ${uid}:${pwd} -k

curl.exe -T ".\Builds\CurrentRelease\MarkdownMonster_Version.xml" "ftps://west-wind.com/Ftp/Files/" -u ${uid}:${pwd} -kwhich makes it quick and easy to get all the files uploaded.

Chocolatey Builds

I really love Chocolatey and if you don't know about Chocolatey, do yourself a favor and head over to the site and install it. Chocolatey is a packaging solution for Windows that makes it easy for you to download and silently install software with a few steps. You can find most utility and developer related tools on Chocolatey and you can even install software that has to be licensed although in those cases you might have to apply a license before running. Chocolatey handles downloading and installing packages by running a silent installer. Once installed you can easily update installed packages using the choco upgrade command. Because Chocolatey is a command line tool, it's easy to automate and create install scripts with that can download and install all sort of software. Chocolatey has made it much easier to set up a working and dev environment on a new machine - quickly.

As a software vendor or tool provider I also publish several packages on Chocolatey - cause it's pretty easy to create packages. What's not so nice right now is that it takes quite a while for new packages to get approved due to the review requirements, but that's supposed to be getting better with better automated tooling and additional reviewers.

Publishing on Chocolatey

I distribute Markdown Monster via Chocolatey. I have to admit that even though I publish several packages I've found it hard to decide on how to best publish my packages on Chocolatey. They provide a number of different ways, where you can either provide a downloaded installer or an embedded installer. After a few iterations I've settled on downloaded installs, but I played around for a while with embedded installers. It seems that embedded installs are more work to create, take longer to upload (and often seem to time out) and take much longer to get approved. There are also a number of rules surrounding embedded installs, that caused me eventually to abandon the idea of embedded installs. So I went back to using downloaded installs.

One key thing to remember about downloaded installs is that you need to make sure to keep all versions you distributed on Chocolatey around - it's not good enough (anymore now with Checksums) to simply point at the current release URL. Rather each version has to provide a unique checksum for each file.

Currently I distribute the current release version via download from my Web site, and release tied versions that provide a version history from Github as part of the Markdown Monster repository. It's important to have access to historic releases if you plan on keeping Chocolatey's version history whereby you can download old versions. The Chocolatey versions then point at the raw files in the GitHub repo.

These releases all point at the GitHub release versions:

Scripting Chocolatey Package Creation

One of the nice things about Chocolatey is that it's pretty easy to create a package. A package is really just a Nuget XML manifest file and a Powershell script that describes how to run the installer.

This gets tedious though - as you have to grab a checksum and add it to the script file each time, so for a long time my process was manual.

But finally decided to automate that part as well and it's surprisingly easy with Powershell. The Chocolatey Packaging script I use does the following:

- Grabs just the filename of the latest release build from release folder

- Captures a CheckSum from the file

- Rewrites the

chocolateyinstall.ps1file with filename and checksum - Creates Chocolatey Package

- Uninstalls existing Choco package

- Installs new Choco Package from current folder

When I finally decided to automate this process I ended up with this code:

# Script builds a Chocolatey Package and tests it locally

#

# Assumes: Uses latest release out of Pre-release folder

# Release has been checked in to GitHub Repo

# Builds: ChocolateyInstall.ps1 file with download URL and sha256 embedded

cd "$PSScriptRoot"

# Example: "MarkdownMonsterSetup-0.55.exe"

$file = gci ..\builds\prerelease | sort LastWriteTime | select -last 1 | select -ExpandProperty "Name"

$sha = get-filehash -path ..\builds\prerelease\$file -Algorithm SHA256 | select -ExpandProperty "Hash"

# Echo

write-host $file

write-host $sha

# Fill into Choco Install Template

$filetext = @"

`$packageName = 'markdownmonster'

`$fileType = 'exe'

`$url = 'https://github.com/RickStrahl/MarkdownMonster/raw/master/Install/Builds/PreRelease/$file'

`$silentArgs = '/SILENT'

`$validExitCodes = @(0)

Install-ChocolateyPackage "`packageName" "`$fileType" "`$silentArgs" "`$url" -validExitCodes `$validExitCodes -checksum "$sha" -checksumType "sha256""@

# Write it to disk

out-file -filepath .\tools\chocolateyinstall.ps1 -inputobject $filetext

# Delete any existing NuGet Packages

del *.nupkg

# Create .nupkg from .nuspec

choco pack

choco uninstall "MarkdownMonster"

# Forced install out of current folder

choco install "MarkdownMonster" -fdv -s ".\"This script builds the package and tests it locally from the current folder. By running it I can check for errors, before submitting the package to Chocolatey.

When it's all said and done and I know the package works locally, I end up with .nupkg package file for my version and I can then just manually do:

choco pushto push the package to the server. Yay!

As is usually the case with tools, it takes a little time and tweaking to get everything to work just right and work out the odds and ends of edge cases. But overall the process I describe here is easy to setup and also easy to run and maintain.

At the end of the day I basically have a few scripts:

- CopyFile.ps1 // called form Build.ps1

- Build.ps1

- Upload.ps1

- Build.ps1 // chocolatey

In addition there are still a few manual steps for final releases:

- Change the version number and release notes in Version.xml file

- Create a version release copy of current build and copy to release folder

- Publish to Github

- Manually change the version number in the Nuget

.nuspecfile

Which is minor for an actual published release and is usually done in a few minutes. I could also automate these steps, but it doesn't seem worth it and... more importantly it's a good idea to review the release version and nuget files to update release notes and double check whether any other info has changed, so I'm OK with manually fixing these up.

In the end the main thing for me is: It works well with minimal fuss.

If you want to take a closer look at how this all works you can check it out in the Markdown Monster GitHub repo:

Here you can find the build scripts, the Inno Installer script, the Chocolatey package and the current and versioned releases.

Build Tools?

So I've been using script files to do this, and it works pretty well. But error handling is pretty marginal, although that's not really been an issue and this isn't exactly a mission critical piece of kit, so if something fails I can look at the errors, fix or comment out whatever code doesn't work and try again.

But recently after listening to Scott Hanselman's episode on HanselMinutes about Cake I'm wondering if I should be using a more fully featured build tool. I would love not having to wade through Powershell's godawful syntax.

Looking over the documentation, Cake does look really nice - especially for cross platform projects - but I'm not sure if it buys me much for a relatively simple desktop install as mine. It's something I'm going to keep in mind next time I need to build an install 'pipeline' like what I described above from scratch.

What are you doing for builds - especially for standalone applications like this? Are you doing what I do and use simple script? Or do you use something more sophisticated - drop a line in the comments, curious to hear what others are doing.

Summary

Regardless of whether you use duct tape and spit as I do with my Powershell scripts, or use a full blown build tool, having a quick and easy way to build a final deployable application in a few minutes has been a huge motivation for me. I can update code and release a new build as soon as a bug is fixed, and my release cycle is much more frequent than it was before. I used to be much more conservative with releases - if something was broken it'd have to wait. But with an easy release mechanism all of that has changed and I can push new releases out much more frequently and I really like the fact that it works this way.

Resources

Post created with West Wind Markdown Monster

Excluding the node_modules Folder in Visual Studio WebSite Projects

If you're working on a client side project that includes an NPM folder with a large number of dependencies and you're using a WebSite Project in Visual Studio, you've probably found that this is a terrible combination out of the box. It results in very slow load times and/or file load errors.

What's the problem?

WebSite Projects (WSP) are a pure file representation on disk, so they show you all files, including the crazy large number of files in the node_modules folder which isn't excluded by default.

WebSite Projects are created and mapped to a folder:

The problem is that WSPs don't have a project file. There's no file mapping, which is one of the primary reasons why it seems like a good idea for client side projects in the first place; nobody wants to maintain and add files manually to a project when managing files externally.

The downside is that you have no control over what gets pulled into the project. There is absolutely no configuration in Web site projects. This affects both the files you see in the 'project' as well as for publishing, although for publishing there is at least some control via a *.pubxml file where you can exclude files and folders from publishing. Unfortunately that's not true for the file content of the 'project'.

In short if you have a massive node_modules folder that folder shows in the project. There's no official, built-in way to limit files or folders - which seems rather silly given that this is such a common use case and we're easily 5 years in from when NPM became a common Web development 'thing'.

Why WebSite Projects?

For most ASP.NET applications I use Web Applications which are functionally opposite - you have all the control over files in a project so much so that you have to explicitly add every single one. For applications that include .NET code Applications that makes good sense. As it does when you actually work entirely in Visual Studio for your client side project where you manage all files through the IDE.

But Web Applications fall apart when files are not added and maintained through Visual Studio.

I use WebSite Projects only for static content sites (main site, product sites), or as is the case now when working for a client who insists that the project I'm working on with other tools shows up in Visual Studio and uses the same source control access.

I'm working work on an Angular 2 project, and although I don't actually edit the Web code in Visual Studio - I'm using WebStorm - due to requirements and the TFS source control in use, the Web site needs to be part of the larger Visual Studio Solution. The Web site is pure client side code, with the API and service projects that the Angular app calls living in completely separate projects. Since I'm modifying files external to Visual Studio a WebSite Project seems like the only reasonable choice.

WebSite Projects Experience

When I created the WebSite Project and added it into the larger Visual Studio solution, I found out quickly how terrible the support for client projects is in that configuration.

I ran into two major issues:

- Extremely slow load time as VS parses through 20,000 NPM files

- TFS Errors due to funky filenames (files starting with

$.)

Initial load of the project took about 5 minutes while Visual Studio white screened and only eventually returned. When it came back I got a ton of TFS errors for $. files - about 100 of them that I had to CR through.

It's quite remarkable that Microsoft hasn't addressed such a common scenario. Eventually I end up with the node_modules folder in the project.

But - refreshing the folder, or re-opening the solution goes right back to the slow load time and then those very same errors. Seriously???

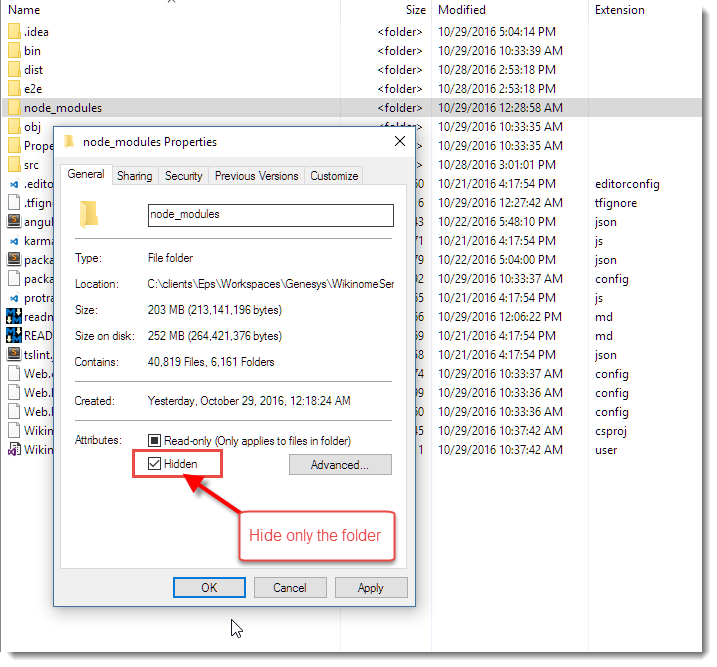

Solution: Make node_modules Hidden

The solution to provide a reasonable experience is to mark the node_modules folder as a hidden folder. This effectively hides the folder from Visual Studio and it ignores it in the Solution Explorer.

You can set the hidden attribute on the folder only - no need to set it on all the child items. NPM continues to work with the hidden folder, so there appears to be no ill effect for actual package access.

Once I did this the node_modules folder is no longer included in the project and load times are fast:

Yay!

For source control and TFS, I also had to add a .tfignore with:

\node_modulesto ensure that source control also ignores the folder - it doesn't seem to mind the hidden folder and otherwise would still try to add it. Hiding the folder also prevents Web Deploy from publishing the files.

So, if you must use WebSite Projects, hiding the node_modules folder is the way to go.

I can't take credit for this - I found this as part of a few StackOverFlow posts - but the solution is not clearly identified or searchable, so hopefully this post will make that a little easier to find.

Looking Forward: Visual Studio 15 (VS2017?)

The next version of Visual Studio - version 15 apparently will introduce a new Web project model that's supposed to be a hybrid between Web Applications and WebSite projects.

There is a project file, but files by default add without explicit adding using an exclusion template. This seems like a pretty obvious solution - shame it took well over 10 years for Visual Studio to figure that one out especially since just about any other Web development tool operates that way.

I haven't played with Visual Studio 15 yet, but I really hope this will be a workable scenario going forward. Visual Studio really needs to have an easier way to deal with free form file based sites.

C# NumberFormat Sections

Windows Update Hell

Once again I'm in Windows Update hell. It's not the first time I've arrived here, but this time around it's a special kind of hell because of Microsoft's misguided policy on update management that makes it damn near impossible to opt out of updates.

The problem

Some time ago - prior to release of the Windows 10 Aniversary update - I was signed up to the Insiders Program because I wanted to play with some of the new features that came in AU. Specifically the integrated Bash shell and some of the IIS improvements. All went well at the time and when the final of AU rolled around I turned off receiving of Insiders builds to get back onto a stable machine that doesn't update every couple of weeks. Life was back to normal.

About a month ago however things started going sideways. Updates started to fail starting with KB3194798 and then later with a newer update KB3197954. In both cases the updates start installing, get to 96% and then:

All in all this takes 3 restarts to get through.

@#!!@ Daily Update Retries

This is bad enough, but it gets worse: The updates continue coming daily wanting to update EVERY day installing the same failed update again and again.

Due to Windows 10's new policy of an update schedule that checks daily for updates these failed updates - and their 3 restarts - fire every day, without prompting or asking. They just shut down Windows (or wake it up from sleep) in the middle of whatever is happening even if the computer is off. The result is when I return from dinner each night the machine is rebooted. This is made worse for me as I dual boot into the Mac so the updates don't automatically go through their reboot dance.

Check out this fucked up update log:

You'd think the updater might be smart enough to figure out after a couple of failed update attempts that this update isn't going to work. But no that would be too simple...

I also continually see this dialog:

It says there's an update pending even though I've previously - unsuccessfully - installed this update just minutes before. So Windows failed to install an update and immediately decides it wants to immediately install the update AGAIN.

The real kicker however is that I can't turn the updates off.

There's a Microsoft Utility that you can download to explicitly keep certain updates from installing. I did this with an earlier install and it worked with KB3194798, but doesn't with KB3197954 which never shows up on the list of updates to install. So that's out.

What I tried

A lot of people had problems with KB3194798 and there were a lot of workarounds floating around for this one. I tried all of them and had no luck.

I'm on

Windows 10 (1607 14393.187)

Use the Windows Update TroubleShooter

This thing says it found things to fix every time it runs:

but in the end this has no effect and the updates continue to fail.

Remove XBox Scheduled Tasks

Apparently with the original failing update many people reported that the issue was related to a XBox related scheduled task that wasn't shutting down properly and couldn't update. I first disabled, then removed those tasks (I don't use XBox - WTH is this even here, much less screwing with a Windows update?). Microsoft had originally released Windows 10 1607 Script fix to unblock update for Windows Insiders to address this. I ran this but again - no luck.

Offline Install

Finally I downloaded and installed the initial update completely offline. I:

- Disconnected all devices from the machine

- Shutdown network connections

- Ran the update install locally

Didn't work either.

Hide Updates

When I originally started having problems with KB3197954 I was able to use the Windows Show Hide Update Utility from Microsoft.

But with KB3197954 that update doesn't even show up in there so I can't hide it.

- ??? I'm fresh of ideas at this point, short of paving the machine.

Who you're gonna call?

What's really frustrating about this is that there seems to be no good online resources you can turn to for these type of issues. The answers I did find tended to be scattered all over the place from ServerFault to Microsoft forums (with some really lame Microsoft employee responses - anything useful came from other users) to obscure forums. There was lots of interesting information that was overall helpful but unfortunately not what it took to resolve my issue.

At this point I'm at a loss. I'm not totally adverse to reinstalling - this Windows install pre-dates the initial Windows 10 release, but it's otherwise stable and still quite fast (ie. not Window bit rot that I notice) so I would rather avoid it.

Anybody out there have any other suggestions on what else to look at?

Sad, Sad, Sad

All I can say is that this sad - really sad. Updates have always been a pain, and failed updates are a reality of life in general. Before those Mac heads go on and say - get a Mac (this IS on a Mac) I've had a similar FAIL on the OS X with the Yosemite update a year or so back that would not want to install and required a repave as well.

Failed Updates are OK - but retrying failures over and over again without a way to turn off the pain is definitely not.

Doing the same failed thing over again, is... Insanity

But continuing to fail repeatedly, doing the same failed update over and over is just plain dumb. With all of this machine logic Microsoft talks about day in and day out, maybe they should spend of that common sense to the Windows Update engine and have some rudimentary logic that can figure out a basic failure pattern and stop making life miserable.

Tell us what the problem is!

Worst of all is that there are no good troubleshooting tools. Why isn't there a link somewhere that points at a log when an update fails. Or hey, how about an error message that actually says something useful like - hey your video driver is locked or we can't connect to the registration server - anything - instead of "We couldn't complete the updates - now buzz off". I get that you don't want to scare non-technical folks, but a link a small link that allows getting at that info would be freaking glorious compared to this insane opacity.

Windows update logs aren't the right answer here either because those can be near impossible to parse for a non-Microsoft person, but heck the installer has to know what the last thing it was working on that failed. This isn't rocket science.

Ok I'm done ranting :-)

Post created with Markdown MonsterIntroducing Markdown Monster - a new Markdown Editor

I'm happy to announce that Markdown Monster 1.0 RTM is now available. Yay!

If you just want to check it out here are links for the download and more info:

- Markdown Monster Site

- Markdown Monster Download

- Markdown Monster on Chocolatey

- Markdown Monster on GitHub

Getting Started Video

A couple of weeks ago I created a Getting Started video that goes over most of Markdown Monster's features. You can find it on YouTube:

What's Markdown Monster?

Markdown Monster is an easy to use and attractive Markdown Editor and Viewer as well as a Weblog Publishing tool. The editor sports syntax colored editing of Markdown text, inline spell checking, an optional live and themable HTML preview, easy embedding of screen shots, images and links, along with a few gentle toolbar helpers to facilitate embedding content into your markdown. The editor's goal is to let you focus on your content creation and not get in your way of your creativity.

There are many small features to make working with Markdown easier: You can import HTML into Markdown from files or the clipboard, export Markdown to HTML files on disk or the clipboard, and quickly access a document's folder in Explorer or a Windows Console Window.

You can also publish your Markdown directly to your Weblog if it supports WordPress or MetaWebLog endpoints. You can manage multiple blogs and even download existing posts as Markdown.

Why another Markdown Editor

So you might ask, Why another Markdown Editor? After all there are several of them already out there and Markdown editing isn't exactly a sexy thing.

Markdown Monster provides all the features you'd expect from a Markdown editor:

- Syntax Colored Markdown Editing

- Fast text editing using a code editor

- Live Markdown Preview

- Inline Spellchecking and Correction

- Minimal Toolbar/menu support

- Support for fenced code blocks

- Support for many advanced Markdown features (coutesy of MarkDig)

but it also provides a number of additional features that I think are rather useful and important:

- Capturing and Embedding Screen Shots

- Quick embedding of Images and Links

- Easily customizable Preview Themes

- Themeable Editor

- HTML to Markdown conversion

- Built-in Weblog Publishing

- Add-in Interface to allow extensions

Extensibility

The last point of extensibility is important to me and a couple of Markdown Monster's key features - the Screen Capture and Weblog Publishing modules - are actually implemented as add-ins. The add-in extensibility allows you to create custom extensions that can hook into the editing and document life cycle so you can embed custom content into posts and perform custom tasks on the document.

For example, I have a custom add-in that I use to download and publish inventory item information to my Web store using a custom API wich makes product editing a lot easier than the online textbox typically used. Other ideas include a snippet manager for custom Markdown Snippets to inject or a Git commit plug-in that can quickly commit and push changes to a git repository, which is useful for Git based documentation or blog systems.

Whether people will take advantage of the .NET Extensibility is another thing, but I know I already have and will continue to benefit from this extensibility and keep the core version of Markdown Monster relatively small.

All Things Markdown

I use Markdown for a lot of things these days:

- Source Code Repositories

- Documentation in my Help Builder tool

- On my Support Message Board

- Creating Blog Posts

- General purpose Document editing

- Application integration for any Free Form text

- Support for maximum Markdown Features

In short Markdown for me is just about everywhere. It's amazing how quickly it has gone from being something I used for Git repo docs to becoming something I use in just about all aspects of development and daily work.

I started Markdown Monster off as a small pet project for myself a little over a half year ago when another editor I was using started failing. It quickly grew from there as I shared the initial editor with a few people I worked with and they started asking for a few additional features and it quickly grew more serious from there. I decided to make this tool something to be proud of and something that I would love to use, and for me at least that's been absolutely true. I practically live in the editor these days - mainly for documentation and blog editing.

A half a year later and a lot of effort later, I'm really happy that I went down this path because I ended up with (IMHO) much more usable editor than what I'd found before, and an editor that addresses many of the missing features that I - and most likely many of you - care about to make my editing experience quick and easy.

Open Source, but licensed

Markdown Monster is open source with code out in the open on GitHub, but the software itself is licensed and requires registration for continued use. The download is fully functional, except for the occasional nag screen.

A few Screenshots and Feature Overview

The best way to see Markdown Monster is just to try it out, but if you want to quickly get a feel for it here are a few screenshots and some commentary on features.

Main Editor

Here's what the main workarea of Markdown Monster looks like:

The main area is made of the edit area on the left, and the preview on the right. The preview is optional and can be collapsed using the World button at the of the window.

Theming for Preview and Editor

Both the preview window and the editor can be themed using the two dropdowns on the status bar on the right. The default editor theme is the dark twilight theme and Github for the preview. Several other themes are available and you can easily create new themes that match your preferences. Preview theme customization can be very useful to match your site's styling especially when posting Weblog posts.

Here's the MM with the visualstudio editor theme and blackout preview theme:

Syntax Colored Markdown and Code

The editor uses syntax colored highlighting for markdown text that highlights things like bold text, headers, lists, html and code in different colors than main body text.

Certain types of code - HTML, JavaScript, CSS, XML, JSON - also show as syntax colored text inside of fenced code blocks.

Inline Spell Checking

The editor also supports indline spell checking which provides suggestions. The editor supports different languages and you can add any Open Office style dictionary for other languages that are not provided as shipped(en-US,es-ES,de-DE,fr-FR). If there are words that you don't want highlighted you can add them to a custom dictionary that will be used when editing.

Multiple Documents

You can have multiple editor windows open at the same time and switch between them. Markdown Monster can optionally remember open documents and re-load them when it restarts.

Gentle Toolbar support

The editor can use toolbar and menu options to inject Markdown markup into the document, but that's of course optional. However, operations like link and image embedding provide some smart features like preloading URLs from the clipboard and for images optionally copying images to the document folder if loaded from a different location.

Tab Operations

Tabs show you the active document name, including the full filename on hover. Right clicking on a document tab brings up a context menu that allows you to open the document's folder in Explorer or open a Terminal window.

These are useful for quickly editing images, or for using the command line to do things like make a commit to Git.

Screen Captures

You can easily capture screen shots with Markdown Monster using either a built-in minimalistic capture utility that allows you to capture windows, or using the popular SnagIt utility from Techsmith.

Here's what the built-in screen capture looks like:

The built-in tool lets you select Windows or Window areas to capture and then displays them in a preview window. Using this capture window you can also capture the active desktop, or an image from the clipboard.

Using SnagIt offers some additional features, like the abililty to choose the capture mechanism for free form selection captures or Window captures and you direct access to the Editor and the various image tools and effects you can apply to the captured image.

SnagIt 13 Issues

SnagIt 13 currently doesn't work properly with Markdown Monster due to a bug in SnagIt's COM interface - this will be fixed by Techsmith in a future update of SnagIt 13)

Weblog Publishing

One important feature for me is the ability to take my Markdown and publish it to my blog. Markdown is ultimately turned into HTML and you can use Markdown Monster to create your blog entry text in Markdown and it can publish the document to your Weblog that uses MetaWeblog or Wordpress APIs.

When you're done editing your Markdown text, you can simply click on the blog icon and provide some basic publish info for your entry:

You first need to set up your blog, providing the publish endpoint, username and password and name for the blog. Once configured it will show up in the list.

Weblog posts can be re-posted multiple times as the meta data associated with a post is stored as part of the Markdown at the end of the document.

You can also download existing blog posts and edit them in Markdown Monster. You can use the Weblog post browser to download and search for specific posts to download and edit, and then post them back to the site.

Note that Markdown Monster will try to parse the HTML from the existing Weblog post into Markdown, which - depending on the formatting of the HTML - may or may not result in clean Markdown for you to edit. HTML that is simple and uses simple document formatting has a good chance of getting translated, but if the HTML is full of custom tags and inline HTML markup, this HTML will show up as HTML in the document. Your mileage may vary for this feature.

Feedback

If you try out Markdown Monster and there's a problem, or you're using it and you think there's a common use case that you'd like to see, please, please let us know. We had a lengthy beta/RC period but unfortunately very little feedback in that cycle and I want to make sure that any issues are addressed.

You can post any bugs or feature suggestions on GitHub in the Issue section or you can start a more open ended discussion on our message board.

Let Markdown Monster Eat your Markdown!

Markdown Monster has become an integral part of my toolset and I use it constantly for a lot of different writing tasks. In fact, I'm writing this blog post with it right now.

Give Markdown Monster a try and see if you can't improve your writing productivity with some of its helpful features and functionality.

Chomp!

.NET Standard 2.0 - Making Sense of .NET Again

At last week's .NET Connect event and two-weeks ago at the MVP Summit we got to hear about Microsoft's vision for .NET going forward. A lot of people - myself included - in recent years have wondered what the future of .NET is.

A key component in this process is .NET Standard...

It's taken Microsoft a few years of floundering and unclear messaging about the future of .NET, but it seems Microsoft is finally nailing the message for .NET going forward and .NET Standard, with its common API specification, is a huge part in making sure that the same base library functionality of .NET is available on all .NET platforms in the future.

In this post I look at what .NET Standard is, how it works and what some of the surrounding issues, impacts and benefits are for the .NET Eco system.

What is .NET Standard?

Here's my definition of what .NET Standard is:

.NET Standard is a specification, not an implementation

.NET Standard describes what a specific implementation like .NET Core, Mono, Xamarin or .NET 4.6 has to implement - at minimum - in terms of API surface in order to be compliant with a given version of .NET Standard.

The actual implementations of today's shipped .NET Standard 1.6 are .NET Core, the full .NET Framework and Mono. The current version of the standard is .NET Standard 1.6 which shipped when .NET Core 1.0 was released. Implementations can implement additional features beyond .NET Standard in their base libraries but at minimum each implementation has to implement the standard APIs of the Standard even if specific APIs end up with NotSupportedExceptions.

.NET Core as a Reference Implementation

As you might expect the API surface of .NET Standard 1.6 coincides pretty closely with the API surface of .NET Core 1.0.x and I expect that .NET Core 1.2 (or whatever the next version will be named) is likely to match very closely to what .NET Standard 2.0 specifies. In that sense it's almost like .NET Core is the reference implementation for .NET Standard at the moment. That may change in the future, but for now that's certainly holding true.

Since .NET Standard is based on full framework APIs, full framework is pretty much compatible with .NET Standard without changes, although there are a few small variations that are addressed by small update releases in .NET 4.6.1 and 4.6.2 etc.

.NET Standard corresponds to the BCL

If you're looking at .NET Standard in terms of the full version of .NET you've used for the last 15+ years, you can think of .NET Standard roughly representing the feature set of the BCL. This is the core .NET library of what used to live exclusively in mscorlib.dll and the various system dlls.

This roughly corresponds to the core .NET Base Class Library (BCL) and includes the basic type system, the runtime loading and querying operations, network and file IO, and some additional APIs like System.Data. With .NET going cross platform it's important to know that this subset has to be platform agnostic and not expect to run on any specific Operating System.

Here's a rough idea of what you can expect to see in .NET Standard 2.0:

The BCL always has been about core operating system, runtime and language services, so this OS agnostic requirement shouldn't be a huge issue. Much of the porting work that goes into moving full framework libraries to .NET Core deals with making sure that functionality works across platforms. There are lots of small issues that can trip up things like the difference in path separators between Operating Systems and the way that operating system services like threads/processes are implemented on various platforms.

Application Frameworks are not part of .NET Standard

The key thing to remember is that if you compare the full .NET Framework to what will be in .NET Standard you are only getting what is in the BCL, not what we know as the Framework Class Library (FCL). Full framework as we know it today has additional features piled on top with the FCL Libraries that sit on top of the BCL. Frameworks in this case are application platforms like ASP.NET (System.Web and OWin based), WinForms, WPF, WCF etc. For .NET Core ASP.NET 5 is an Application Framework that lives ontop of .NET Standard. These libraries are not part of .NET Standard, but they are implemented on top of it.

.NET Standard Versions

The current shipped version of .NET Standard is 1.6 and it roughly coincides with the feature set of .NET Core 1.0/1.1. The feature set in this version is fairly limited and significantly less featured than the full version of .NET and that has caused a lot grumbling in the community. Some of the missing APIs seem really arbitrary often removing overloads or extended features that just end up creating refactoring or code bracketing work.

Because many APIs and features are missing, it's been difficult to port existing libraries to .NET Core/.NET Standard as many basic features are missing. While the missing APIs can often be worked around, it's a not a trivial task to hunt down all the missing APIs in a large applications and adjust code using code bracketing (#if/#else blocks) to potentially run both in .NET Standard and full Framework applications.

Worse - knowing that .NET Standard 2.0 is on the horizon, it's very likely that those workarounds and adjustments won't be necessary in the future, so it's hard to justify putting in the effort to port now. It's no surprise that many library authors - including myself - are holding off and waiting for more API surface to port libraries to .NET Standard/.NET Core.