As of version 4.0 ASP.NET natively supports routing via the now built-in System.Web.Routing namespace. Routing features are automatically integrated into the HtttpRuntime via a few custom interfaces.

New Web Forms Routing Support

In ASP.NET 4.0 there are a host of improvements including routing support baked into Web Forms via a RouteData property available on the Page class and RouteCollection.MapPageRoute() route handler that makes it easy to route to Web forms.

To map ASP.NET Page routes is as simple as setting up the routes with MapPageRoute:

protected void Application_Start(object sender, EventArgs e) { RegisterRoutes(RouteTable.Routes); } void RegisterRoutes(RouteCollection routes) { routes.MapPageRoute("StockQuote", "StockQuote/{symbol}", "StockQuote.aspx"); routes.MapPageRoute("StockQuotes", "StockQuotes/{symbolList}", "StockQuotes.aspx"); }

and then accessing the route data in the page you can then use the new Page class RouteData property to retrieve the dynamic route data information:

public partial class StockQuote1 : System.Web.UI.Page { protected StockQuote Quote = null; protected void Page_Load(object sender, EventArgs e) { string symbol = RouteData.Values["symbol"] as string; StockServer server = new StockServer(); Quote = server.GetStockQuote(symbol); // display stock data in Page View } }

Simple, quick and doesn’t require much explanation. If you’re using WebForms most of your routing needs should be served just fine by this simple mechanism. Kudos to the ASP.NET team for putting this in the box and making it easy!

How Routing Works

To handle Routing in ASP.NET involves these steps:

- Registering Routes

- Creating a custom RouteHandler to retrieve an HttpHandler

- Attaching RouteData to your HttpHandler

- Picking up Route Information in your Request code

Registering routes makes ASP.NET aware of the Routes you want to handle via the static RouteTable.Routes collection. You basically add routes to this collection to let ASP.NET know which URL patterns it should watch for. You typically hook up routes off a RegisterRoutes method that fires in Application_Start as I did in the example above to ensure routes are added only once when the application first starts up. When you create a route, you pass in a RouteHandler instance which ASP.NET caches and reuses as routes are matched.

Once registered ASP.NET monitors the routes and if a match is found just prior to the HttpHandler instantiation, ASP.NET uses the RouteHandler registered for the route and calls GetHandler() on it to retrieve an HttpHandler instance. The RouteHandler.GetHandler() method is responsible for creating an instance of an HttpHandler that is to handle the request and – if necessary – to assign any additional custom data to the handler.

At minimum you probably want to pass the RouteData to the handler so the handler can identify the request based on the route data available. To do this you typically add a RouteData property to your handler and then assign the property from the RouteHandlers request context. This is essentially how Page.RouteData comes into being and this approach should work well for any custom handler implementation that requires RouteData.

It’s a shame that ASP.NET doesn’t have a top level intrinsic object that’s accessible off the HttpContext object to provide route data more generically, but since RouteData is directly tied to HttpHandlers and not all handlers support it it might cause some confusion of when it’s actually available. Bottom line is that if you want to hold on to RouteData you have to assign it to a custom property of the handler or else pass it to the handler via Context.Items[] object that can be retrieved on an as needed basis.

It’s important to understand that routing is hooked up via RouteHandlers that are responsible for loading HttpHandler instances. RouteHandlers are invoked for every request that matches a route and through this RouteHandler instance the Handler gains access to the current RouteData. Because of this logic it’s important to understand that Routing is really tied to HttpHandlers and not available prior to handler instantiation, which is pretty late in the HttpRuntime’s request pipeline. IOW, Routing works with Handlers but not with earlier in the pipeline within Modules.

Specifically ASP.NET calls RouteHandler.GetHandler() from the PostResolveRequestCache HttpRuntime pipeline event. Here’s the call stack at the beginning of the GetHandler() call:

which fires just before handler resolution.

Non-Page Routing – You need to build custom RouteHandlers

If you need to route to a custom Http Handler or other non-Page (and non-MVC) endpoint in the HttpRuntime, there is no generic mapping support available. You need to create a custom RouteHandler that can manage creating an instance of an HttpHandler that is fired in response to a routed request. Depending on what you are doing this process can be simple or fairly involved as your code is responsible based on the route data provided which handler to instantiate, and more importantly how to pass the route data on to the Handler.

Luckily creating a RouteHandler is easy by implementing the IRouteHandler interface which has only a single GetHttpHandler(RequestContext context) method. In this method you can pick up the requestContext.RouteData, instantiate the HttpHandler of choice, and assign the RouteData to it. Then pass back the handler and you’re done.

Here’s a simple example of GetHttpHandler() method that dynamically creates a handler based on a passed in Handler type.

/// <summary> /// Retrieves an Http Handler based on the type specified in the constructor /// </summary> /// <param name="requestContext"></param> /// <returns></returns> IHttpHandler IRouteHandler.GetHttpHandler(RequestContext requestContext) { IHttpHandler handler = Activator.CreateInstance(CallbackHandlerType) as IHttpHandler; // If we're dealing with a Callback Handler // pass the RouteData for this route to the Handler if (handler is CallbackHandler) ((CallbackHandler)handler).RouteData = requestContext.RouteData; return handler; }

Note that this code checks for a specific type of handler and if it matches assigns the RouteData to this handler. This is optional but quite a common scenario if you want to work with RouteData.

If the handler you need to instantiate isn’t under your control but you still need to pass RouteData to Handler code, an alternative is to pass the RouteData via the HttpContext.Items collection:

IHttpHandler IRouteHandler.GetHttpHandler(RequestContext requestContext) { IHttpHandler handler = Activator.CreateInstance(CallbackHandlerType) as IHttpHandler; requestContext.HttpContext.Items["RouteData"] = requestContext.RouteData; return handler; }

The code in the handler implementation can then pick up the RouteData from the context collection as needed:

RouteData routeData = HttpContext.Current.Items["RouteData"] as RouteData

This isn’t as clean as having an explicit RouteData property, but it does have the advantage that the route data is visible anywhere in the Handler’s code chain. It’s definitely preferable to create a custom property on your handler, but the Context work-around works in a pinch when you don’t’ own the handler code and have dynamic code executing as part of the handler execution.

An Example of a Custom RouteHandler: Attribute Based Route Implementation

In this post I’m going to discuss a custom routine implementation I built for my CallbackHandler class in the West Wind Web & Ajax Toolkit. CallbackHandler can be very easily used for creating AJAX, REST and POX requests following RPC style method mapping. You can pass parameters via URL query string, POST data or raw data structures, and you can retrieve results as JSON, XML or raw string/binary data. It’s a quick and easy way to build service interfaces with no fuss.

As a quick review here’s how CallbackHandler works:

- You create an Http Handler that derives from CallbackHandler

- You implement methods that have a [CallbackMethod] Attribute

and that’s it. Here’s an example of an CallbackHandler implementation in an ashx.cs based handler:

// RestService.ashx.cs

public class RestService : CallbackHandler { [CallbackMethod] public StockQuote GetStockQuote(string symbol) { StockServer server = new StockServer(); return server.GetStockQuote(symbol); } [CallbackMethod] public StockQuote[] GetStockQuotes(string symbolList) { StockServer server = new StockServer(); string[] symbols = symbolList.Split(new char[2] { ',',';' },StringSplitOptions.RemoveEmptyEntries); return server.GetStockQuotes(symbols); } }

CallbackHandler makes it super easy to create a method on the server, pass data to it via POST, QueryString or raw JSON/XML data, and then retrieve the results easily back in various formats. This works wonderful and I’ve used these tools in many projects for myself and with clients. But one thing missing has been the ability to create clean URLs.

Typical URLs looked like this:

http://www.west-wind.com/WestwindWebToolkit/samples/Rest/StockService.ashx?Method=GetStockQuote&symbol=msft

http://www.west-wind.com/WestwindWebToolkit/samples/Rest/StockService.ashx?Method=GetStockQuotes&symbolList=msft,intc,gld,slw,mwe&format=xml

which works and is clear enough, but also clearly very ugly. It would be much nicer if URLs could look like this:

http://www.west-wind.com//WestwindWebtoolkit/Samples/StockQuote/msft

http://www.west-wind.com/WestwindWebtoolkit/Samples/StockQuotes/msft,intc,gld,slw?format=xml

(the Virtual Root in this sample is WestWindWebToolkit/Samples and StockQuote/{symbol} is the route)

(If you use FireFox try using the JSONView plug-in make it easier to view JSON content)

So, taking a clue from the WCF REST tools that use RouteUrls I set out to create a way to specify RouteUrls for each of the endpoints. The change made basically allows changing the above to:

[CallbackMethod(RouteUrl="RestService/StockQuote/{symbol}")] public StockQuote GetStockQuote(string symbol) { StockServer server = new StockServer(); return server.GetStockQuote(symbol); } [CallbackMethod(RouteUrl = "RestService/StockQuotes/{symbolList}")] public StockQuote[] GetStockQuotes(string symbolList) { StockServer server = new StockServer(); string[] symbols = symbolList.Split(new char[2] { ',',';' },StringSplitOptions.RemoveEmptyEntries); return server.GetStockQuotes(symbols); }

where a RouteUrl is specified as part of the Callback attribute. And with the changes made with RouteUrls I can now get URLs like the second set shown earlier.

So how does that work? Let’s find out…

How to Create Custom Routes

As mentioned earlier Routing is made up of several steps:

- Creating a custom RouteHandler to create HttpHandler instances

- Mapping the actual Routes to the RouteHandler

- Retrieving the RouteData and actually doing something useful with it in the HttpHandler

In the CallbackHandler routing example above this works out to something like this:

- Create a custom RouteHandler that includes a property to track the method to call

- Set up the routes using Reflection against the class Looking for any RouteUrls in the CallbackMethod attribute

- Add a RouteData property to the CallbackHandler so we can access the RouteData in the code of the handler

Creating a Custom Route Handler

To make the above work I created a custom RouteHandler class that includes the actual IRouteHandler implementation as well as a generic and static method to automatically register all routes marked with the [CallbackMethod(RouteUrl="…")] attribute.

Here’s the code:

/// <summary> /// Route handler that can create instances of CallbackHandler derived /// callback classes. The route handler tracks the method name and /// creates an instance of the service in a predictable manner /// </summary> /// <typeparam name="TCallbackHandler">CallbackHandler type</typeparam> public class CallbackHandlerRouteHandler : IRouteHandler { /// <summary> /// Method name that is to be called on this route. /// Set by the automatically generated RegisterRoutes /// invokation. /// </summary> public string MethodName { get; set; } /// <summary> /// The type of the handler we're going to instantiate. /// Needed so we can semi-generically instantiate the /// handler and call the method on it. /// </summary> public Type CallbackHandlerType { get; set; } /// <summary> /// Constructor to pass in the two required components we /// need to create an instance of our handler. /// </summary> /// <param name="methodName"></param> /// <param name="callbackHandlerType"></param> public CallbackHandlerRouteHandler(string methodName, Type callbackHandlerType) { MethodName = methodName; CallbackHandlerType = callbackHandlerType; } /// <summary> /// Retrieves an Http Handler based on the type specified in the constructor /// </summary> /// <param name="requestContext"></param> /// <returns></returns> IHttpHandler IRouteHandler.GetHttpHandler(RequestContext requestContext) { IHttpHandler handler = Activator.CreateInstance(CallbackHandlerType) as IHttpHandler; // If we're dealing with a Callback Handler // pass the RouteData for this route to the Handler if (handler is CallbackHandler) ((CallbackHandler)handler).RouteData = requestContext.RouteData; return handler; } /// <summary> /// Generic method to register all routes from a CallbackHandler /// that have RouteUrls defined on the [CallbackMethod] attribute /// </summary> /// <typeparam name="TCallbackHandler">CallbackHandler Type</typeparam> /// <param name="routes"></param> public static void RegisterRoutes<TCallbackHandler>(RouteCollection routes) { // find all methods var methods = typeof(TCallbackHandler).GetMethods(BindingFlags.Instance | BindingFlags.Public); foreach (var method in methods) { var attrs = method.GetCustomAttributes(typeof(CallbackMethodAttribute), false); if (attrs.Length < 1) continue; CallbackMethodAttribute attr = attrs[0] as CallbackMethodAttribute; if (string.IsNullOrEmpty(attr.RouteUrl)) continue; // Add the route routes.Add(method.Name, new Route(attr.RouteUrl, new CallbackHandlerRouteHandler(method.Name, typeof(TCallbackHandler)))); } } }

The RouteHandler implements IRouteHandler, and its responsibility via the GetHandler method is to create an HttpHandler based on the route data.

When ASP.NET calls GetHandler it passes a requestContext parameter which includes a requestContext.RouteData property. This parameter holds the current request’s route data as well as an instance of the current RouteHandler. If you look at GetHttpHandler() you can see that the code creates an instance of the handler we are interested in and then sets the RouteData property on the handler. This is how you can pass the current request’s RouteData to the handler.

The RouteData object also has a RouteData.RouteHandler property that is also available to the Handler later, which is useful in order to get additional information about the current route. In our case here the RouteHandler includes a MethodName property that identifies the method to execute in the handler since that value no longer comes from the URL so we need to figure out the method name some other way. The method name is mapped explicitly when the RouteHandler is created and here the static method that auto-registers all CallbackMethods with RouteUrls sets the method name when it creates the routes while reflecting over the methods (more on this in a minute). The important point here is that you can attach additional properties to the RouteHandler and you can then later access the RouteHandler and its properties later in the Handler to pick up these custom values. This is a crucial feature in that the RouteHandler serves in passing additional context to the handler so it knows what actions to perform.

The automatic route registration is handled by the static RegisterRoutes<TCallbackHandler> method. This method is generic and totally reusable for any CallbackHandler type handler.

To register a CallbackHandler and any RouteUrls it has defined you simple use code like this in Application_Start (or other application startup code):

protected void Application_Start(object sender, EventArgs e) { // Register Routes for RestService CallbackHandlerRouteHandler.RegisterRoutes<RestService>(RouteTable.Routes); }

If you have multiple CallbackHandler style services you can make multiple calls to RegisterRoutes for each of the service types. RegisterRoutes internally uses reflection to run through all the methods of the Handler, looking for CallbackMethod attributes and whether a RouteUrl is specified. If it is a new instance of a CallbackHandlerRouteHandler is created and the name of the method and the type are set.

routes.Add(method.Name,

new Route(attr.RouteUrl, new CallbackHandlerRouteHandler(method.Name, typeof(TCallbackHandler) )) );

While the routing with CallbackHandlerRouteHandler is set up automatically for all methods that use the RouteUrl attribute, you can also use code to hook up those routes manually and skip using the attribute. The code for this is straightforward and just requires that you manually map each individual route to each method you want a routed:

protected void Application_Start(objectsender, EventArgs e)

{

RegisterRoutes(RouteTable.Routes);

}

void RegisterRoutes(RouteCollection routes)

{ routes.Add("StockQuote Route",new Route("StockQuote/{symbol}",

new CallbackHandlerRouteHandler("GetStockQuote",typeof(RestService) ) ) );

routes.Add("StockQuotes Route",new Route("StockQuotes/{symbolList}",

new CallbackHandlerRouteHandler("GetStockQuotes",typeof(RestService) ) ) );

}

I think it’s clearly easier to have CallbackHandlerRouteHandler.RegisterRoutes() do this automatically for you based on RouteUrl attributes, but some people have a real aversion to attaching logic via attributes. Just realize that the option to manually create your routes is available as well.

Using the RouteData in the Handler

A RouteHandler’s responsibility is to create an HttpHandler and as mentioned earlier, natively IHttpHandler doesn’t have any support for RouteData. In order to utilize RouteData in your handler code you have to pass the RouteData to the handler.

In my CallbackHandlerRouteHandler when it creates the HttpHandler instance it creates the instance and then assigns the custom RouteData property on the handler:

IHttpHandler handler = Activator.CreateInstance(CallbackHandlerType) as IHttpHandler; if (handler is CallbackHandler) ((CallbackHandler)handler).RouteData = requestContext.RouteData; return handler;

Again this only works if you actually add a RouteData property to your handler explicitly as I did in my CallbackHandler implementation:

/// <summary> /// Optionally store RouteData on this handler /// so we can access it internally /// </summary> public RouteData RouteData {get; set; }

and the RouteHandler needs to set it when it creates the handler instance.

Once you have the route data in your handler you can access Route Keys and Values and also the RouteHandler. Since my RouteHandler has a custom property for the MethodName to retrieve it from within the handler I can do something like this now to retrieve the MethodName (this example is actually not in the handler but target is an instance pass to the processor):

// check for Route Data method name if (target is CallbackHandler) { var routeData = ((CallbackHandler)target).RouteData; if (routeData != null) methodToCall = ((CallbackHandlerRouteHandler)routeData.RouteHandler).MethodName; }

When I need to access the dynamic values in the route ( symbol in StockQuote/{symbol}) I can retrieve it easily with the Values collection (RouteData.Values["symbol"]). In my CallbackHandler processing logic I’m basically looking for matching parameter names to Route parameters:

// look for parameters in the route

if(routeData != null)

{

string parmString = routeData.Values[parameter.Name] as string;

adjustedParms[parmCounter] = ReflectionUtils.StringToTypedValue(parmString, parameter.ParameterType);

}

And with that we’ve come full circle. We’ve created a custom RouteHandler() that passes the RouteData to the handler it creates. We’ve registered our routes to use the RouteHandler, and we’ve utilized the route data in our handler.

For completeness sake here’s the routine that executes a method call based on the parameters passed in and one of the options is to retrieve the inbound parameters off RouteData (as well as from POST data or QueryString parameters):

internal object ExecuteMethod(string method, object target, string[] parameters,

CallbackMethodParameterType paramType,

ref CallbackMethodAttribute callbackMethodAttribute) { HttpRequest Request = HttpContext.Current.Request; object Result = null; // Stores parsed parameters (from string JSON or QUeryString Values) object[] adjustedParms = null; Type PageType = target.GetType(); MethodInfo MI = PageType.GetMethod(method, BindingFlags.Instance | BindingFlags.Public | BindingFlags.NonPublic); if (MI == null) throw new InvalidOperationException("Invalid Server Method."); object[] methods = MI.GetCustomAttributes(typeof(CallbackMethodAttribute), false); if (methods.Length < 1) throw new InvalidOperationException("Server method is not accessible due to missing CallbackMethod attribute"); if (callbackMethodAttribute != null) callbackMethodAttribute = methods[0] as CallbackMethodAttribute; ParameterInfo[] parms = MI.GetParameters(); JSONSerializer serializer = new JSONSerializer(); RouteData routeData = null; if (target is CallbackHandler) routeData = ((CallbackHandler)target).RouteData; int parmCounter = 0; adjustedParms = new object[parms.Length]; foreach (ParameterInfo parameter in parms) { // Retrieve parameters out of QueryString or POST buffer if (parameters == null) { // look for parameters in the route if (routeData != null) { string parmString = routeData.Values[parameter.Name] as string; adjustedParms[parmCounter] = ReflectionUtils.StringToTypedValue(parmString, parameter.ParameterType); } // GET parameter are parsed as plain string values - no JSON encoding else if (HttpContext.Current.Request.HttpMethod == "GET") { // Look up the parameter by name string parmString = Request.QueryString[parameter.Name]; adjustedParms[parmCounter] = ReflectionUtils.StringToTypedValue(parmString, parameter.ParameterType); } // POST parameters are treated as methodParameters that are JSON encoded else if (paramType == CallbackMethodParameterType.Json) //string newVariable = methodParameters.GetValue(parmCounter) as string; adjustedParms[parmCounter] = serializer.Deserialize(Request.Params["parm" + (parmCounter + 1).ToString()], parameter.ParameterType); else adjustedParms[parmCounter] = SerializationUtils.DeSerializeObject( Request.Params["parm" + (parmCounter + 1).ToString()], parameter.ParameterType); } else if (paramType == CallbackMethodParameterType.Json) adjustedParms[parmCounter] = serializer.Deserialize(parameters[parmCounter], parameter.ParameterType); else adjustedParms[parmCounter] = SerializationUtils.DeSerializeObject(parameters[parmCounter], parameter.ParameterType); parmCounter++; } Result = MI.Invoke(target, adjustedParms); return Result; }

The code basically uses Reflection to loop through all the parameters available on the method and tries to assign the parameters from RouteData, QueryString or POST variables. The parameters are converted into their appropriate types and then used to eventually make a Reflection based method call.

What’s sweet is that the RouteData retrieval is just another option for dealing with the inbound data in this scenario and it adds exactly two lines of code plus the code to retrieve the MethodName I showed previously – a seriously low impact addition that adds a lot of extra value to this endpoint callback processing implementation.

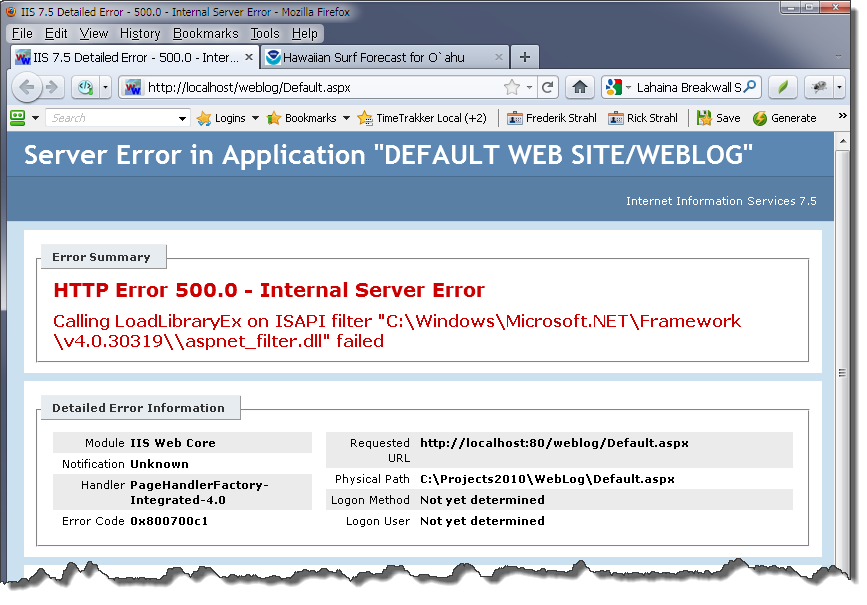

Debugging your Routes

If you create a lot of routes it’s easy to run into Route conflicts where multiple routes have the same path and overlap with each other. This can be difficult to debug especially if you are using automatically generated routes like the routes created by CallbackHandlerRouteHandler.RegisterRoutes.

Luckily there’s a tool that can help you out with this nicely. Phill Haack created a RouteDebugging tool you can download and add to your project. The easiest way to do this is to grab and add this to your project is to use NuGet (Add Library Package from your Project’s Reference Nodes):

which adds a RouteDebug assembly to your project.

Once installed you can easily debug your routes with this simple line of code which needs to be installed at application startup:

protected void Application_Start(object sender, EventArgs e) { CallbackHandlerRouteHandler.RegisterRoutes<StockService>(RouteTable.Routes); // Debug your routes RouteDebug.RouteDebugger.RewriteRoutesForTesting(RouteTable.Routes); }

Any routed URL then displays something like this:

The screen shows you your current route data and all the routes that are mapped along with a flag that displays which route was actually matched. This is useful – if you have any overlap of routes you will be able to see which routes are triggered – the first one in the sequence wins.

This tool has saved my ass on a few occasions – and with NuGet now it’s easy to add it to your project in a few seconds and then remove it when you’re done.

Routing Around

Custom routing seems slightly complicated on first blush due to its disconnected components of RouteHandler, route registration and mapping of custom handlers. But once you understand the relationship between a RouteHandler, the RouteData and how to pass it to a handler, utilizing of Routing becomes a lot easier as you can easily pass context from the registration to the RouteHandler and through to the HttpHandler. The most important thing to understand when building custom routing solutions is to figure out how to map URLs in such a way that the handler can figure out all the pieces it needs to process the request. This can be via URL routing parameters and as I did in my example by passing additional context information as part of the RouteHandler instance that provides the proper execution context. In my case this ‘context’ was the method name, but it could be an actual static value like an enum identifying an operation or category in an application. Basically user supplied data comes in through the url and static application internal data can be passed via RouteHandler property values.

Routing can make your application URLs easier to read by non-techie types regardless of whether you’re building Service type or REST applications, or full on Web interfaces. Routing in ASP.NET 4.0 makes it possible to create just about any extensionless URLs you can dream up and custom RouteHanmdler

References

- Sample Project

Includes the sample CallbackHandler service discussed here along with compiled versions

of the Westwind.Web and Westwind.Utilities assemblies. (requires .NET 4.0/VS 2010) - West Wind Web Toolkit

includes full implementation of CallbackHandler and the Routing Handler - West Wind Web Toolkit Source Code

Contains the full source code to the Westwind.Web and Westwind.Utilities assemblies used

in these samples. Includes the source described in the post.

(Latest build in the Subversion Repository) - CallbackHandler Source

(Relevant code to this article tree in Westwind.Web assembly) - JSONView FireFoxPlugin

A simple FireFox Plugin to easily view JSON data natively in FireFox.

For IE you can use a registry hack to display JSON as raw text.

![RoundedBoxInBrowser[6] RoundedBoxInBrowser[6]](http://www.west-wind.com/Weblog/images/200901/Windows-Live-Writer/Rounded-Corners-and-ShadowsDialogs-in-pu_E57D/RoundedBoxInBrowser%5B6%5D_thumb.png)

![RoundedInnerPokingOut[6] RoundedInnerPokingOut[6]](http://www.west-wind.com/Weblog/images/200901/Windows-Live-Writer/Rounded-Corners-and-ShadowsDialogs-in-pu_E57D/RoundedInnerPokingOut%5B6%5D_thumb.png)

![WorkOfflineMode[4] WorkOfflineMode[4]](http://www.west-wind.com/Weblog/images/200901/Windows-Live-Writer/WinInet-failing-with-Error-2cant-find-re_E0A6/WorkOfflineMode%5B4%5D_thumb.png)

![ScrollTable_NoGood[4] ScrollTable_NoGood[4]](http://www.west-wind.com/Weblog/images/200901/Windows-Live-Writer/A-Scrollable-Table-Plug-in_11AE/ScrollTable_NoGood%5B4%5D_thumb.png)

![PlainView[5] PlainView[5]](http://www.west-wind.com/Weblog/images/200901/Windows-Live-Writer/Show-raw-Text-Code-from-a-URL-with-C.NET_CF22/PlainView%5B5%5D_thumb.png)

![FormattedCode[4] FormattedCode[4]](http://www.west-wind.com/Weblog/images/200901/Windows-Live-Writer/Show-raw-Text-Code-from-a-URL-with-C.NET_CF22/FormattedCode%5B4%5D_thumb.png)