Microsoft recently released ASP.NET MVC 4.0 and .NET 4.5 and along with it, the brand spanking new ASP.NET Web API. Web API is an exciting new addition to the ASP.NET stack that provides a new, well-designed HTTP framework for creating REST and AJAX APIs (API is Microsoft’s new jargon for a service, in case you’re wondering). Although Web API ships and installs with ASP.NET MVC 4, you can use Web API functionality in any ASP.NET project, including WebForms, WebPages and MVC or just a Web API by itself. And you can also self-host Web API in your own applications from Console, Desktop or Service applications.

If you're interested in a high level overview on what ASP.NET Web API is and how it fits into the ASP.NET stack you can check out my previous post:

Where does ASP.NET Web API fit?

In the following article, I'll focus on a practical, by example introduction to ASP.NET Web API. All the code discussed in this article is available in GitHub:

https://github.com/RickStrahl/AspNetWebApiArticle

[republished from my Code Magazine Article and updated for RTM release of ASP.NET Web API]

Getting Started

To start I’ll create a new empty ASP.NET application to demonstrate that Web API can work with any kind of ASP.NET project.

Although you can create a new project based on the ASP.NET MVC/Web API template to quickly get up and running, I’ll take you through the manual setup process, because one common use case is to add Web API functionality to an existing ASP.NET application. This process describes the steps needed to hook up Web API to any ASP.NET 4.0 application.

Start by creating an ASP.NET Empty Project. Then create a new folder in the project called Controllers.

Add a Web API Controller Class

Once you have any kind of ASP.NET project open, you can add a Web API Controller class to it. Web API Controllers are very similar to MVC Controller classes, but they work in any kind of project.

Add a new item to this folder by using the Add New Item option in Visual Studio and choose Web API Controller Class, as shown in Figure 1.

![Figure 1 -AddNewItemDialog Figure 1 -AddNewItemDialog]()

Figure 1: This is how you create a new Controller Class in Visual Studio

Make sure that the name of the controller class includes Controller at the end of it, which is required in order for Web API routing to find it. Here, the name for the class is AlbumApiController.

For this example, I’ll use a Music Album model to demonstrate basic behavior of Web API. The model consists of albums and related songs where an album has properties like Name, Artist and YearReleased and a list of songs with a SongName and SongLength as well as an AlbumId that links it to the album. You can find the code for the model (and the rest of these samples) on Github. To add the file manually, create a new folder called Model, and add a new class Album.cs and copy the code into it. There’s a static AlbumData class with a static CreateSampleAlbumData() method that creates a short list of albums on a static .Current that I’ll use for the examples.

Before we look at what goes into the controller class though, let’s hook up routing so we can access this new controller.

Hooking up Routing in Global.asax

To start, I need to perform the one required configuration task in order for Web API to work: I need to configure routing to the controller. Like MVC, Web API uses routing to provide clean, extension-less URLs to controller methods. Using an extension method to ASP.NET’s static RouteTable class, you can use the MapHttpRoute() (in the System.Web.Http namespace) method to hook-up the routing during Application_Start in global.asax.cs shown in Listing 1.

using System;

using System.Web.Routing;

using System.Web.Http;

namespace AspNetWebApi

{

public class Global : System.Web.HttpApplication

{

protected void Application_Start(object sender, EventArgs e)

{

RouteTable.Routes.MapHttpRoute(

name: "AlbumVerbs",

routeTemplate: "albums/{title}",

defaults: new { symbol = RouteParameter.Optional,

controller="AlbumApi" }

);

}

}

}

This route configures Web API to direct URLs that start with an albums folder to the AlbumApiController class. Routing in ASP.NET is used to create extensionless URLs and allows you to map segments of the URL to specific Route Value parameters. A route parameter, with a name inside curly brackets like {name}, is mapped to parameters on the controller methods. Route parameters can be optional, and there are two special route parameters – controller and action – that determine the controller to call and the method to activate respectively.

HTTP Verb Routing

Routing in Web API can route requests by HTTP Verb in addition to standard {controller},{action} routing. For the first examples, I use HTTP Verb routing, as shown Listing 1. Notice that the route I’ve defined does not include an {action} route value or action value in the defaults. Rather, Web API can use the HTTP Verb in this route to determine the method to call the controller, and a GET request maps to any method that starts with Get. So methods called Get() or GetAlbums() are matched by a GET request and a POST request maps to a Post() or PostAlbum(). Web API matches a method by name and parameter signature to match a route, query string or POST values. In lieu of the method name, the [HttpGet,HttpPost,HttpPut,HttpDelete, etc] attributes can also be used to designate the accepted verbs explicitly if you don’t want to follow the verb naming conventions.

Although HTTP Verb routing is a good practice for REST style resource APIs, it’s not required and you can still use more traditional routes with an explicit {action} route parameter. When {action} is supplied, the HTTP verb routing is ignored. I’ll talk more about alternate routes later.

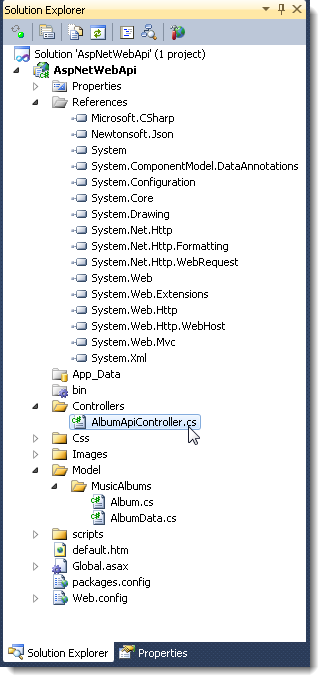

When you’re finished with initial creation of files, your project should look like Figure 2.

![InitialSolution InitialSolution]()

Figure 2: The initial project has the new API Controller Album model

Creating a small Album Model

Now it’s time to create some controller methods to serve data. For these examples, I’ll use a very simple Album and Songs model to play with, as shown in Listing 2.

public class Song

{

public string AlbumId { get; set; }

[Required, StringLength(80)]

public string SongName { get; set; }

[StringLength(5)]

public string SongLength { get; set; }

}

public class Album

{

public string Id { get; set; }

[Required, StringLength(80)]

public string AlbumName { get; set; }

[StringLength(80)]

public string Artist { get; set; }

public int YearReleased { get; set; }

public DateTime Entered { get; set; }

[StringLength(150)]

public string AlbumImageUrl { get; set; }

[StringLength(200)]

public string AmazonUrl { get; set; }

public virtual List<Song> Songs { get; set; }

public Album()

{

Songs = new List<Song>();

Entered = DateTime.Now;

// Poor man's unique Id off GUID hash

Id = Guid.NewGuid().GetHashCode().ToString("x");

}

public void AddSong(string songName, string songLength = null)

{

this.Songs.Add(new Song()

{

AlbumId = this.Id,

SongName = songName,

SongLength = songLength

});

}

}

Once the model has been created, I also added an AlbumData class that generates some static data in memory that is loaded onto a static .Current member. The signature of this class looks like this and that's what I'll access to retrieve the base data:

public static class AlbumData

{

// sample data - static list

public static List<Album> Current = CreateSampleAlbumData();

/// <summary>

/// Create some sample data

/// </summary>

/// <returns></returns>

public static List<Album> CreateSampleAlbumData() { … }}

You can check out the full code for the data generation online.

Creating an AlbumApiController

Web API shares many concepts of ASP.NET MVC, and the implementation of your API logic is done by implementing a subclass of the System.Web.Http.ApiController class. Each public method in the implemented controller is a potential endpoint for the HTTP API, as long as a matching route can be found to invoke it. The class name you create should end in Controller, which is how Web API matches the controller route value to figure out which class to invoke.

Inside the controller you can implement methods that take standard .NET input parameters and return .NET values as results. Web API’s binding tries to match POST data, route values, form values or query string values to your parameters. Because the controller is configured for HTTP Verb based routing (no {action} parameter in the route), any methods that start with Getxxxx() are called by an HTTP GET operation. You can have multiple methods that match each HTTP Verb as long as the parameter signatures are different and can be matched by Web API.

In Listing 3, I create an AlbumApiController with two methods to retrieve a list of albums and a single album by its title .

public class AlbumApiController : ApiController

{

public IEnumerable<Album> GetAlbums()

{

var albums = AlbumData.Current.OrderBy(alb => alb.Artist);

return albums;

}

public Album GetAlbum(string title)

{

var album = AlbumData.Current

.SingleOrDefault(alb => alb.AlbumName.Contains(title));

return album;

}}

To access the first two requests, you can use the following URLs in your browser:

http://localhost/aspnetWebApi/albums

http://localhost/aspnetWebApi/albums/Dirty%20Deeds

Note that you’re not specifying the actions of GetAlbum or GetAlbums in these URLs. Instead Web API’s routing uses HTTP GET verb to route to these methods that start with Getxxx() with the first mapping to the parameterless GetAlbums() method and the latter to the GetAlbum(title) method that receives the title parameter mapped as optional in the route.

Content Negotiation

When you access any of the URLs above from a browser, you get either an XML or JSON result returned back. The album list result for Chrome 17 and Internet Explorer 9 is shown Figure 3.

![Figure 3 - StockQuotesOutput Figure 3 - StockQuotesOutput]()

Figure 3: Web API responses can vary depending on the browser used, demonstrating Content Negotiation in action as these two browsers send different HTTP Accept headers.

Notice that the results are not the same: Chrome returns an XML response and IE9 returns a JSON response. Whoa, what’s going on here? Shouldn’t we see the same result in both browsers?

Actually, no. Web API determines what type of content to return based on Accept headers. HTTP clients, like browsers, use Accept headers to specify what kind of content they’d like to see returned. Browsers generally ask for HTML first, followed by a few additional content types. Chrome (and most other major browsers) ask for:

Accept: text/html,

application/xhtml+xml,application/xml;

q=0.9,*/*;q=0.8

IE9 asks for:

Accept: text/html, application/xhtml+xml, */*

Note that Chrome’s Accept header includes application/xml, which Web API finds in its list of supported media types and returns an XML response. IE9 does not include an Accept header type that works on Web API by default, and so it returns the default format, which is JSON.

This is an important and very useful feature that was missing from any previous Microsoft REST tools: Web API automatically switches output formats based on HTTP Accept headers. Nowhere in the server code above do you have to explicitly specify the output format. Rather, Web API determines what format the client is requesting based on the Accept headers and automatically returns the result based on the available formatters. This means that a single method can handle both XML and JSON results..

Using this simple approach makes it very easy to create a single controller method that can return JSON, XML, ATOM or even OData feeds by providing the appropriate Accept header from the client. By default you don’t have to worry about the output format in your code.

Note that you can still specify an explicit output format if you choose, either globally by overriding the installed formatters, or individually by returning a lower level HttpResponseMessage instance and setting the formatter explicitly. More on that in a minute.

Along the same lines, any content sent to the server via POST/PUT is parsed by Web API based on the HTTP Content-type of the data sent. The same formats allowed for output are also allowed on input. Again, you don’t have to do anything in your code – Web API automatically performs the deserialization from the content.

Accessing Web API JSON Data with jQuery

A very common scenario for Web API endpoints is to retrieve data for AJAX calls from the Web browser. Because JSON is the default format for Web API, it’s easy to access data from the server using jQuery and its getJSON() method. This example receives the albums array from GetAlbums() and databinds it into the page using knockout.js.

$.getJSON("albums/", function (albums) {

// make knockout template visible

$(".album").show();

// create view object and attach array

var view = { albums: albums };

ko.applyBindings(view);

});

Figure 4 shows this and the next example’s HTML output. You can check out the complete HTML and script code at http://goo.gl/Ix33C (.html) and http://goo.gl/tETlg (.js).

Figu

![Figure 4 -GetAlbumSample Figure 4 -GetAlbumSample]()

Figure 4: The Album Display sample uses JSON data loaded from Web API.

The result from the getJSON() call is a JavaScript object of the server result, which comes back as a JavaScript array. In the code, I use knockout.js to bind this array into the UI, which as you can see, requires very little code, instead using knockout’s data-bind attributes to bind server data to the UI. Of course, this is just one way to use the data – it’s entirely up to you to decide what to do with the data in your client code.

Along the same lines, I can retrieve a single album to display when the user clicks on an album. The response returns the album information and a child array with all the songs. The code to do this is very similar to the last example where we pulled the albums array:

$(".albumlink").live("click", function () {

var id = $(this).data("id"); // title

$.getJSON("albums/" + id, function (album) {

ko.applyBindings(album,

$("#divAlbumDialog")[0]);

$("#divAlbumDialog").show();

});

});

Here the URL looks like this: /albums/Dirty%20Deeds, where the title is the ID captured from the clicked element’s data ID attribute.

Explicitly Overriding Output Format

When Web API automatically converts output using content negotiation, it does so by matching Accept header media types to the GlobalConfiguration.Configuration.Formatters and the SupportedMediaTypes of each individual formatter. You can add and remove formatters to globally affect what formats are available and it’s easy to create and plug in custom formatters.The example project includes a JSONP formatter that can be plugged in to provide JSONP support for requests that have a callback= querystring parameter. Adding, removing or replacing formatters is a global option you can use to manipulate content. It’s beyond the scope of this introduction to show how it works, but you can review the sample code or check out my blog entry on the subject (http://goo.gl/UAzaR).

If automatic processing is not desirable in a particular Controller method, you can override the response output explicitly by returning an HttpResponseMessage instance. HttpResponseMessage is similar to ActionResult in ASP.NET MVC in that it’s a common way to return an abstract result message that contains content. HttpResponseMessage s parsed by the Web API framework using standard interfaces to retrieve the response data, status code, headers and so on[MS2] . Web API turns every response – including those Controller methods that return static results – into HttpResponseMessage instances. Explicitly returning an HttpResponseMessage instance gives you full control over the output and lets you mostly bypass WebAPI’s post-processing of the HTTP response on your behalf.

HttpResponseMessage allows you to customize the response in great detail. Web API’s attention to detail in the HTTP spec really shows; many HTTP options are exposed as properties and enumerations with detailed IntelliSense comments. Even if you’re new to building REST-based interfaces, the API guides you in the right direction for returning valid responses and response codes.

For example, assume that I always want to return JSON from the GetAlbums() controller method and ignore the default media type content negotiation. To do this, I can adjust the output format and headers as shown in Listing 4.

public HttpResponseMessage GetAlbums()

{

var albums = AlbumData.Current.OrderBy(alb => alb.Artist);

// Create a new HttpResponse with Json Formatter explicitly

var resp = new HttpResponseMessage(HttpStatusCode.OK);

resp.Content = new ObjectContent<IEnumerable<Album>>(

albums, new JsonMediaTypeFormatter());

// Get Default Formatter based on Content Negotiation

//var resp = Request.CreateResponse<IEnumerable<Album>>(HttpStatusCode.OK, albums);

resp.Headers.ConnectionClose = true;

resp.Headers.CacheControl = new CacheControlHeaderValue();

resp.Headers.CacheControl.Public = true;

return resp;

}

This example returns the same IEnumerable<Album> value, but it wraps the response into an HttpResponseMessage so you can control the entire HTTP message result including the headers, formatter and status code. In Listing 4, I explicitly specify the formatter using the JsonMediaTypeFormatter to always force the content to JSON.

If you prefer to use the default content negotiation with HttpResponseMessage results, you can create the Response instance using the Request.CreateResponse method:

var resp = Request.CreateResponse<IEnumerable<Album>>(HttpStatusCode.OK, albums);

This provides you an HttpResponse object that's pre-configured with the default formatter based on Content Negotiation.

Once you have an HttpResponse object you can easily control most HTTP aspects on this object. What's sweet here is that there are many more detailed properties on HttpResponse than the core ASP.NET Response object, with most options being explicitly configurable with enumerations that make it easy to pick the right headers and response codes from a list of valid codes. It makes HTTP features available much more discoverable even for non-hardcore REST/HTTP geeks.

Non-Serialized Results

The output returned doesn’t have to be a serialized value but can also be raw data, like strings, binary data or streams. You can use the HttpResponseMessage.Content object to set a number of common Content classes. Listing 5 shows how to return a binary image using the ByteArrayContent class from a Controller method.

[HttpGet]

public HttpResponseMessage AlbumArt(string title)

{

var album = AlbumData.Current.FirstOrDefault(abl => abl.AlbumName.StartsWith(title));

if (album == null)

{

var resp = Request.CreateResponse<ApiMessageError>(

HttpStatusCode.NotFound,

new ApiMessageError("Album not found"));

return resp;

}

// kinda silly - we would normally serve this directly

// but hey - it's a demo.

var http = new WebClient();

var imageData = http.DownloadData(album.AlbumImageUrl);

// create response and return

var result = new HttpResponseMessage(HttpStatusCode.OK);

result.Content = new ByteArrayContent(imageData);

result.Content.Headers.ContentType = new MediaTypeHeaderValue("image/jpeg");

return result;

}

The image retrieval from Amazon is contrived, but it shows how to return binary data using ByteArrayContent. It also demonstrates that you can easily return multiple types of content from a single controller method, which is actually quite common. If an error occurs - such as a resource can’t be found or a validation error – you can return an error response to the client that’s very specific to the error. In GetAlbumArt(), if the album can’t be found, we want to return a 404 Not Found status (and realistically no error, as it’s an image).

Note that if you are not using HTTP Verb-based routing or not accessing a method that starts with Get/Post etc., you have to specify one or more HTTP Verb attributes on the method explicitly. Here, I used the [HttpGet] attribute to serve the image. Another option to handle the error could be to return a fixed placeholder image if no album could be matched or the album doesn’t have an image.

When returning an error code, you can also return a strongly typed response to the client. For example, you can set the 404 status code and also return a custom error object (ApiMessageError is a class I defined) like this:

return Request.CreateResponse<ApiMessageError>(

HttpStatusCode.NotFound,

new ApiMessageError("Album not found")

);

If the album can be found, the image will be returned. The image is downloaded into a byte[] array, and then assigned to the result’s Content property. I created a new ByteArrayContent instance and assigned the image’s bytes and the content type so that it displays properly in the browser.

There are other content classes available: StringContent, StreamContent, ByteArrayContent, MultipartContent, and ObjectContent are at your disposal to return just about any kind of content. You can create your own Content classes if you frequently return custom types and handle the default formatter assignments that should be used to send the data out .

Although HttpResponseMessage results require more code than returning a plain .NET value from a method, it allows much more control over the actual HTTP processing than automatic processing. It also makes it much easier to test your controller methods as you get a response object that you can check for specific status codes and output messages rather than just a result value.

Routing Again

Ok, let’s get back to the image example. Using the original routing we have setup using HTTP Verb routing there's no good way to serve the image. In order to return my album art image I’d like to use a URL like this:

http://localhost/aspnetWebApi/albums/Dirty%20Deeds/image

In order to create a URL like this, I have to create a new Controller because my earlier routes pointed to the AlbumApiController using HTTP Verb routing. HTTP Verb based routing is great for representing a single set of resources such as albums. You can map operations like add, delete, update and read easily using HTTP Verbs. But you cannot mix action based routing into a an HTTP Verb routing controller - you can only map HTTP Verbs and each method has to be unique based on parameter signature. You can't have multiple GET operations to methods with the same signature. So GetImage(string id) and GetAlbum(string title) are in conflict in an HTTP GET routing scenario.

In fact, I was unable to make the above Image URL work with any combination of HTTP Verb plus Custom routing using the single Albums controller.

There are number of ways around this, but all involve additional controllers. Personally, I think it’s easier to use explicit Action routing and then add custom routes if you need to simplify your URLs further. So in order to accommodate some of the other examples, I created another controller – AlbumRpcApiController – to handle all requests that are explicitly routed via actions (/albums/rpc/AlbumArt) or are custom routed with explicit routes defined in the HttpConfiguration. I added the AlbumArt() method to this new AlbumRpcApiController class.

For the image URL to work with the new AlbumRpcApiController, you need a custom route placed before the default route from Listing 1.

RouteTable.Routes.MapHttpRoute(

name: "AlbumRpcApiAction",

routeTemplate: "albums/rpc/{action}/{title}",

defaults: new

{

title = RouteParameter.Optional,

controller = "AlbumRpcApi",

action = "GetAblums"

}

);

Now I can use either of the following URLs to access the image:

Custom route: (/albums/rpc/{title}/image)

http://localhost/aspnetWebApi/albums/PowerAge/image

Action route: (/albums/rpc/action/{title})

http://localhost/aspnetWebAPI/albums/rpc/albumart/PowerAge

Sending Data to the Server

To send data to the server and add a new album, you can use an HTTP POST operation. Since I’m using HTTP Verb-based routing in the original AlbumApiController, I can implement a method called PostAlbum()to accept a new album from the client. Listing 6 shows the Web API code to add a new album.

public HttpResponseMessage PostAlbum(Album album)

{

if (!this.ModelState.IsValid)

{

// my custom error class

var error = new ApiMessageError() { message = "Model is invalid" };

// add errors into our client error model for client

foreach (var prop in ModelState.Values)

{

var modelError = prop.Errors.FirstOrDefault();

if (!string.IsNullOrEmpty(modelError.ErrorMessage))

error.errors.Add(modelError.ErrorMessage);

else

error.errors.Add(modelError.Exception.Message);

}

return Request.CreateResponse<ApiMessageError>(HttpStatusCode.Conflict, error);

}

// update song id which isn't provided

foreach (var song in album.Songs)

song.AlbumId = album.Id;

// see if album exists already

var matchedAlbum = AlbumData.Current

.SingleOrDefault(alb => alb.Id == album.Id ||

alb.AlbumName == album.AlbumName);

if (matchedAlbum == null)

AlbumData.Current.Add(album);

else

matchedAlbum = album;

// return a string to show that the value got here

var resp = Request.CreateResponse(HttpStatusCode.OK, string.Empty);

resp.Content = new StringContent(album.AlbumName + " " + album.Entered.ToString(),

Encoding.UTF8, "text/plain");

return resp;

}

The PostAlbum() method receives an album parameter, which is automatically deserialized from the POST buffer the client sent. The data passed from the client can be either XML or JSON. Web API automatically figures out what format it needs to deserialize based on the content type and binds the content to the album object. Web API uses model binding to bind the request content to the parameter(s) of controller methods.

Like MVC you can check the model by looking at ModelState.IsValid. If it’s not valid, you can run through the ModelState.Values collection and check each binding for errors. Here I collect the error messages into a string array that gets passed back to the client via the result ApiErrorMessage object.

When a binding error occurs, you’ll want to return an HTTP error response and it’s best to do that with an HttpResponseMessage result. In Listing 6, I used a custom error class that holds a message and an array of detailed error messages for each binding error. I used this object as the content to return to the client along with my Conflict HTTP Status Code response.

If binding succeeds, the example returns a string with the name and date entered to demonstrate that you captured the data. Normally, a method like this should return a Boolean or no response at all (HttpStatusCode.NoConent).

The sample uses a simple static list to hold albums, so once you’ve added the album using the Post operation, you can hit the /albums/ URL to see that the new album was added.

The client jQuery code to call the POST operation from the client with jQuery is shown in Listing 7.

var id = new Date().getTime().toString();

var album = {

"Id": id,

"AlbumName": "Power Age",

"Artist": "AC/DC",

"YearReleased": 1977,

"Entered": "2002-03-11T18:24:43.5580794-10:00",

"AlbumImageUrl": http://ecx.images-amazon.com/images/…,

"AmazonUrl": http://www.amazon.com/…,

"Songs": [

{ "SongName": "Rock 'n Roll Damnation", "SongLength": 3.12},

{ "SongName": "Downpayment Blues", "SongLength": 4.22 },

{ "SongName": "Riff Raff", "SongLength": 2.42 }

]

}

$.ajax(

{

url: "albums/",

type: "POST",

contentType: "application/json",

data: JSON.stringify(album),

processData: false,

beforeSend: function (xhr) {

// not required since JSON is default output

xhr.setRequestHeader("Accept", "application/json");

},

success: function (result) {

// reload list of albums

page.loadAlbums();

},

error: function (xhr, status, p3, p4) {

var err = "Error";

if (xhr.responseText && xhr.responseText[0] == "{")

err = JSON.parse(xhr.responseText).message;

alert(err);

}

});

The code in Listing 7 creates an album object in JavaScript to match the structure of the .NET Album class. This object is passed to the $.ajax() function to send to the server as POST. The data is turned into JSON and the content type set to application/json so that the server knows what to convert when deserializing in the Album instance.

The jQuery code hooks up success and failure events. Success returns the result data, which is a string that’s echoed back with an alert box. If an error occurs, jQuery returns the XHR instance and status code. You can check the XHR to see if a JSON object is embedded and if it is, you can extract it by de-serializing it and accessing the .message property.

REST standards suggest that updates to existing resources should use PUT operations. REST standards aside, I’m not a big fan of separating out inserts and updates so I tend to have a single method that handles both.

But if you want to follow REST suggestions, you can create a PUT method that handles updates by forwarding the PUT operation to the POST method:

public HttpResponseMessage PutAlbum(Album album)

{

return PostAlbum(album);

}

To make the corresponding $.ajax() call, all you have to change from Listing 7 is the type: from POST to PUT.

Model Binding with UrlEncoded POST Variables

In the example in Listing 7 I used JSON objects to post a serialized object to a server method that accepted an strongly typed object with the same structure, which is a common way to send data to the server. However, Web API supports a number of different ways that data can be received by server methods.

For example, another common way is to use plain UrlEncoded POST values to send to the server. Web API supports Model Binding that works similar (but not the same) as MVC's model binding where POST variables are mapped to properties of object parameters of the target method. This is actually quite common for AJAX calls that want to avoid serialization and the potential requirement of a JSON parser on older browsers.

For example, using jQUery you might use the $.post() method to send a new album to the server (albeit one without songs) using code like the following:

$.post("albums/",{AlbumName: "Dirty Deeds", YearReleased: 1976 … },albumPostCallback);

Although the code looks very similar to the client code we used before passing JSON, here the data passed is URL encoded values (AlbumName=Dirty+Deeds&YearReleased=1976 etc.). Web API then takes this POST data and maps each of the POST values to the properties of the Album object in the method's parameter. Although the client code is different the server can both handle the JSON object, or the UrlEncoded POST values.

Dynamic Access to POST Data

There are also a few options available to dynamically access POST data, if you know what type of data you're dealing with.

If you have POST UrlEncoded values, you can dynamically using a FormsDataCollection:

[HttpPost]

public string PostAlbum(FormDataCollection form)

{

return string.Format("{0} - released {1}",

form.Get("AlbumName"),form.Get("RearReleased"));

}

The FormDataCollection is a very simple object, that essentially provides the same functionality as Request.Form[] in ASP.NET. Request.Form[] still works if you're running hosted in an ASP.NET application. However as a general rule, while ASP.NET's functionality is always available when running Web API hosted inside of an ASP.NET application, using the built in classes specific to Web API makes it possible to run Web API applications in a self hosted environment outside of ASP.NET.

If your client is sending JSON to your server, and you don't want to map the JSON to a strongly typed object because you only want to retrieve a few simple values, you can also accept a JObject parameter in your API methods:

[HttpPost]

public string PostAlbum(JObject jsonData)

{

dynamic json = jsonData;

JObject jalbum = json.Album;

JObject juser = json.User;

string token = json.UserToken;

var album = jalbum.ToObject<Album>();

var user = juser.ToObject<User>();

return String.Format("{0} {1} {2}", album.AlbumName, user.Name, token);

}

There quite a few options available to you to receive data with Web API, which gives you more choices for the right tool for the job.

Unfortunately one shortcoming of Web API is that POST data is always mapped to a single parameter. This means you can't pass multiple POST parameters to methods that receive POST data. It's possible to accept multiple parameters, but only one can map to the POST content - the others have to come from the query string or route values.

I have a couple of Blog POSTs that explain what works and what doesn't here:

Handling Delete Operations

Finally, to round out the server API code of the album example we've been discussin, here’s the DELETE verb controller method that allows removal of an album by its title:

public HttpResponseMessage DeleteAlbum(string title)

{

var matchedAlbum = AlbumData.Current.Where(alb => alb.AlbumName == title)

.SingleOrDefault();

if (matchedAlbum == null)

return new HttpResponseMessage(HttpStatusCode.NotFound);

AlbumData.Current.Remove(matchedAlbum);

return new HttpResponseMessage(HttpStatusCode.NoContent);

}

To call this action method using jQuery, you can use:

$(".removeimage").live("click", function () {

var $el = $(this).parent(".album");

var txt = $el.find("a").text();

$.ajax({

url: "albums/" + encodeURIComponent(txt),

type: "Delete",

success: function (result) {

$el.fadeOut().remove();

},

error: jqError

});

}

Note the use of the DELETE verb in the $.ajax() call, which routes to DeleteAlbum on the server. DELETE is a non-content operation, so you supply a resource ID (the title) via route value or the querystring.

Routing Conflicts

In all requests with the exception of the AlbumArt image example shown so far, I used HTTP Verb routing that I set up in Listing 1. HTTP Verb Routing is a recommendation that is in line with typical REST access to HTTP resources. However, it takes quite a bit of effort to create REST-compliant API implementations based only on HTTP Verb routing only. You saw one example that didn’t really fit – the return of an image where I created a custom route albums/{title}/image that required creation of a second controller and a custom route to work. HTTP Verb routing to a controller does not mix with custom or action routing to the same controller because of the limited mapping of HTTP verbs imposed by HTTP Verb routing.

To understand some of the problems with verb routing, let’s look at another example. Let’s say you create a GetSortableAlbums() method like this and add it to the original AlbumApiController accessed via HTTP Verb routing:

[HttpGet]

public IQueryable<Album> SortableAlbums()

{

var albums = AlbumData.Current;

// generally should be done only on actual queryable results (EF etc.)

// Done here because we're running with a static list but otherwise might be slow

return albums.AsQueryable();

}

If you compile this code and try to now access the /albums/ link, you get an error: Multiple Actions were found that match the request.

HTTP Verb routing only allows access to one GET operation per parameter/route value match. If more than one method exists with the same parameter signature, it doesn’t work. As I mentioned earlier for the image display, the only solution to get this method to work is to throw it into another controller. Because I already set up the AlbumRpcApiController I can add the method there.

First, I should rename the method to SortableAlbums() so I’m not using a Get prefix for the method. This also makes the action parameter look cleaner in the URL - it looks less like a method and more like a noun.

I can then create a new route that handles direct-action mapping:

RouteTable.Routes.MapHttpRoute(

name: "AlbumRpcApiAction",

routeTemplate: "albums/rpc/{action}/{title}",

defaults: new

{

title = RouteParameter.Optional,

controller = "AlbumRpcApi",

action = "GetAblums"

}

);

As I am explicitly adding a route segment – rpc – into the route template, I can now reference explicit methods in the Web API controller using URLs like this:

http://localhost/AspNetWebApi/rpc/SortableAlbums

Error Handling

I’ve already done some minimal error handling in the examples. For example in Listing 6, I detected some known-error scenarios like model validation failing or a resource not being found and returning an appropriate HttpResponseMessage result. But what happens if your code just blows up or causes an exception?

If you have a controller method, like this:

[HttpGet]

public void ThrowException()

{

throw new UnauthorizedAccessException("Unauthorized Access Sucka");

}

You can call it with this:

http://localhost/AspNetWebApi/albums/rpc/ThrowException

The default exception handling displays a 500-status response with the serialized exception on the local computer only. When you connect from a remote computer, Web API throws back a 500 HTTP Error with no data returned (IIS then adds its HTML error page). The behavior is configurable in the GlobalConfiguration:

GlobalConfiguration

.Configuration

.IncludeErrorDetailPolicy = IncludeErrorDetailPolicy.Never;

If you want more control over your error responses sent from code, you can throw explicit error responses yourself using HttpResponseException. When you throw an HttpResponseException the response parameter is used to generate the output for the Controller action.

[HttpGet]

public void ThrowError()

{

var resp = Request.CreateResponse<ApiMessageError>(

HttpStatusCode.BadRequest,

new ApiMessageError("Your code stinks!"));

throw new HttpResponseException(resp);

}

Throwing an HttpResponseException stops the processing of the controller method and immediately returns the response you passed to the exception. Unlike other Exceptions fired inside of WebAPI, HttpResponseException bypasses the Exception Filters installed and instead just outputs the response you provide.

In this case, the serialized ApiMessageError result string is returned in the default serialization format – XML or JSON. You can pass any content to HttpResponseMessage, which includes creating your own exception objects and consistently returning error messages to the client. Here’s a small helper method on the controller that you might use to send exception info back to the client consistently:

private void ThrowSafeException(string message,

HttpStatusCode statusCode = HttpStatusCode.BadRequest)

{

var errResponse = Request.CreateResponse<ApiMessageError>(statusCode,

new ApiMessageError() { message = message });

throw new HttpResponseException(errResponse);

}

You can then use it to output any captured errors from code:

[HttpGet]

public void ThrowErrorSafe()

{

try

{

List<string> list = null;

list.Add("Rick");

}

catch (Exception ex)

{ ThrowSafeException(ex.Message); }

}

Exception Filters

Another more global solution is to create an Exception Filter. Filters in Web API provide the ability to pre- and post-process controller method operations. An exception filter looks at all exceptions fired and then optionally creates an HttpResponseMessage result. Listing 8 shows an example of a basic Exception filter implementation.

public class UnhandledExceptionFilter : ExceptionFilterAttribute

{

public override void OnException(HttpActionExecutedContext context)

{

HttpStatusCode status = HttpStatusCode.InternalServerError;

var exType = context.Exception.GetType();

if (exType == typeof(UnauthorizedAccessException))

status = HttpStatusCode.Unauthorized;

else if (exType == typeof(ArgumentException))

status = HttpStatusCode.NotFound;

var apiError = new ApiMessageError() { message = context.Exception.Message };

// create a new response and attach our ApiError object

// which now gets returned on ANY exception result

var errorResponse = context.Request.CreateResponse<ApiMessageError>(status, apiError);

//var errorResponse = context.Request.CreateResponse(HttpStatusCode.BadRequest,

// context.Exception.GetBaseException().Message);

context.Response = errorResponse;

base.OnException(context);

}

}

Exception Filter Attributes can be assigned to an ApiController class like this:

[UnhandledExceptionFilter]

public class AlbumRpcApiController : ApiController

or you can globally assign it to all controllers by adding it to the HTTP Configuration's Filters collection:

GlobalConfiguration.Configuration.Filters.Add(new UnhandledExceptionFilter());

The latter is a great way to get global error trapping so that all errors (short of hard IIS errors and explicit HttpResponseException errors) return a valid error response that includes error information in the form of a known-error object. Using a filter like this allows you to throw an exception as you normally would and have your filter create a response in the appropriate output format that the client expects. For example, an AJAX application can on failure expect to see a JSON error result that corresponds to the real error that occurred rather than a 500 error along with HTML error page that IIS throws up.

You can even create some custom exceptions so you can differentiate your own exceptions from unhandled system exceptions - you often don't want to display error information from 'unknown' exceptions as they may contain sensitive system information or info that's not generally useful to users of your application/site.

This is just one example of how ASP.NET Web API is configurable and extensible. Exception filters are just one example of how you can plug-in into the Web API request flow to modify output. Many more hooks exist and I’ll take a closer look at extensibility in Part 2 of this article in the future.

Summary

Web API is a big improvement over previous Microsoft REST and AJAX toolkits. The key features to its usefulness are its ease of use with simple controller based logic, familiar MVC-style routing, low configuration impact, extensibility at all levels and tight attention to exposing and making HTTP semantics easily discoverable and easy to use. Although none of the concepts used in Web API are new or radical, Web API combines the best of previous platforms into a single framework that’s highly functional, easy to work with, and extensible to boot. I think that Microsoft has hit a home run with Web API.

Related Resources

© Rick Strahl, West Wind Technologies, 2005-2012

![]()

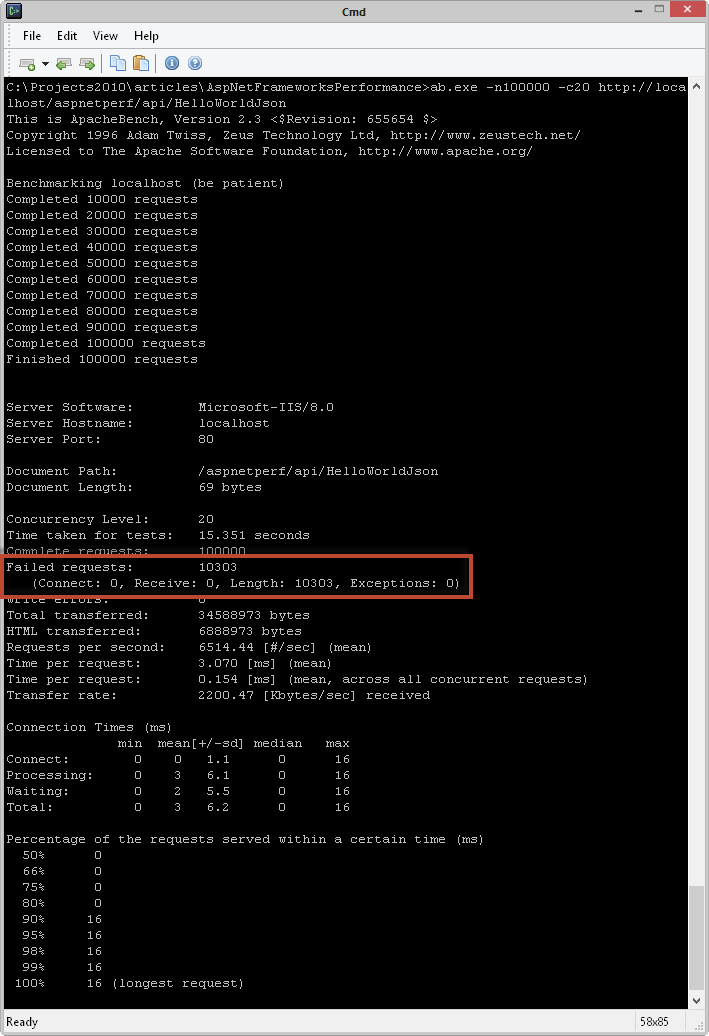

![Console[4] Console[4]](http://www.west-wind.com/Weblog/images/200901/Windows-Live-Writer/ASP.NET-Frameworks-and-Raw-Throughput-Pe_BD12/Console%5B4%5D_thumb.png)

![Results[4] Results[4]](http://www.west-wind.com/Weblog/images/200901/Windows-Live-Writer/ASP.NET-Frameworks-and-Raw-Throughput-Pe_BD12/Results%5B4%5D_thumb.png)

![Results2[4] Results2[4]](http://www.west-wind.com/Weblog/images/200901/Windows-Live-Writer/ASP.NET-Frameworks-and-Raw-Throughput-Pe_BD12/Results2%5B4%5D_thumb.png)