HTML 5 SessionState and LocalStorage are very useful and super easy to use to manage client side state. For building rich client side or SPA style applications it's a vital feature to be able to cache user data as well as HTML content in order to swap pages in and out of the browser's DOM. What might not be so obvious is that you can also use the sessionState and localStorage objects even in classic server rendered HTML applications to provide caching features between pages. These APIs have been around for a long time and are supported by most relatively modern browsers and even all the way back to IE8, so you can use them safely in your Web applications.

SessionState and LocalStorage are easy

The APIs that make up sessionState and localStorage are very simple. Both objects feature the same API interface which is a simple, string based key value store that has getItem, setItem, removeitem, clear and key methods. The objects are also pseudo array objects and so can be iterated like an array with a length property and you have array indexers to set and get values with.

Basic usage for storing and retrieval looks like this (using sessionStorage, but the syntax is the same for localStorage - just switch the objects):

// setvar lastAccess = new Date().getTime();if (sessionStorage)sessionStorage.setItem("myapp_time", lastAccess.toString());// retrieve in another page or on a refreshvar time = null;if (sessionStorage)time = sessionStorage.getItem("myapp_time");

if (time)

time = new Date(time * 1);elsetime = new Date();sessionState stores data that is browser session specific and that has a liftetime of the active browser session or window. Shut down the browser or tab and the storage goes away. localStorage uses the same API interface, but the lifetime of the data is permanently stored in the browsers storage area until deleted via code or by clearing out browser cookies (not the cache). Both sessionStorage and localStorage space is limited. The spec is ambiguous about this - supposedly sessionStorage should allow for unlimited size, but it appears that most WebKit browsers support only 2.5mb for either object. This means you have to be careful what you store especially since other applications might be running on the same domain and also use the storage mechanisms. That said 2.5mb worth of character data is quite a bit and would go a long way.

The easiest way to get a feel for how sessionState and localStorage work is to look at a simple example.

You can go check out the following example online in Plunker:

http://plnkr.co/edit/0ICotzkoPjHaWa70GlRZ?p=preview

which looks like this:

![Session Sample Session Sample]()

Plunker is an online HTML/JavaScript editor that lets you write and run Javascript code and similar to JsFiddle, but a bit cleaner to work in IMHO (thanks to John Papa for turning me on to it).

The sample has two text boxes with counts that update session/local storage every time you click the related button. The counts are 'cached' in Session and Local storage. The point of these examples is that both counters survive full page reloads, and the LocalStorage counter survives a complete browser shutdown and restart. Go ahead and try it out by clicking the Reload button after updating both counters and then shutting down the browser completely and going back to the same URL (with the same browser). What you should see is that reloads leave both counters intact at the counted values, while a browser restart will leave only the local storage counter intact.

The code to deal with the SessionStorage (and LocalStorage not shown here) in the example is isolated into a couple of wrapper methods to simplify the code:

function getSessionCount() {var count = 0;if (sessionStorage) {var count = sessionStorage.getItem("ss_count");

count = !count ? 0 : count * 1;

}

$("#txtSession").val(count);return count;

}function setSessionCount(count) {if (sessionStorage)

sessionStorage.setItem("ss_count", count.toString());

}These two functions essentially load and store a session counter value. The two key methods used here are:

- sessionStorage.getItem(key);

- sessionStorage.setItem(key,stringVal);

Note that the value given to setItem and return by getItem has to be a string. If you pass another type you get an error. Don't let that limit you though - you can easily enough store JSON data in a variable so it's quite possible to pass complex objects and store them into a single sessionStorage value:

var user = { name: "Rick", id="ricks", level=8 }

sessionStorage.setItem("app_user",JSON.stringify(user));to retrieve it:

var user = sessionStorage.getItem("app_user");if (user)

user = JSON.parse(user);Simple!

If you're using the Chrome Developer Tools (F12) you can also check out the session and local storage state on the Resource tab:

![SessionDebugger SessionDebugger]()

You can also use this tool to refresh or remove entries from storage.

What we just looked at is a purely client side implementation where a couple of counters are stored. For rich client centric AJAX applications sessionStorage and localStorage provide a very nice and simple API to store application state while the application is running.

But you can also use these storage mechanisms to manage server centric HTML applications when you combine server rendering with some JavaScript to perform client side data caching. You can both store some state information and data on the client (ie. store a JSON object and carry it forth between server rendered HTML requests) or you can use it for good old HTTP based caching where some rendered HTML is saved and then restored later.

Let's look at the latter with a real life example.

Why do I need Client-side Page Caching for Server Rendered HTML?

I don't know about you, but in a lot of my existing server driven applications I have lists that display a fair amount of data. Typically these lists contain links to then drill down into more specific data either for viewing or editing. You can then click on a link and go off to a detail page that provides more concise content.

So far so good. But now you're done with the detail page and need to get back to the list, so you click on a 'bread crumbs trail' or an application level 'back to list' button and…

…you end up back at the top of the list - the scroll position, the current selection in some cases even filters conditions - all gone with the wind. You've left behind the state of the list and are starting from scratch in your browsing of the list from the top. Not cool!

Sound familiar? This a pretty common scenario with server rendered HTML content where it's so common to display lists to drill into, only to lose state in the process of returning back to the original list. Look at just about any traditional forums application, or even StackOverFlow to see what I mean here. Scroll down a bit to look at a post or entry, drill in then use the bread crumbs or tab to go back…

In some cases returning to the top of a list is not a big deal. On StackOverFlow that sort of works because content is turning around so quickly you probably want to actually look at the top posts. Not always though - if you're browsing through a list of search topics you're interested in and drill in there's no way back to that position. Essentially anytime you're actively browsing the items in the list, that's when state becomes important and if it's not handled the user experience can be really disrupting.

Content Caching

If you're building client centric SPA style applications this is a fairly easy to solve problem - you tend to render the list once and then update the page content to overlay the detail content, only hiding the list temporarily until it's used again later. It's relatively easy to accomplish this simply by hiding content on the page and later making it visible again.

But if you use server rendered content, hanging on to all the detail like filters, selections and scroll position is not quite as easy. Or is it???

This is where sessionStorage comes in handy. What if we just save the rendered content of a previous page, and then restore it when we return to this page based on a special flag that tells us to use the cached version? Let's see how we can do this.

A real World Use Case

Recently my local ISP asked me to help out with updating an ancient classifieds application. They had a very busy, local classifieds app that was originally an ASP classic application. The old app was - wait for it: frames based - and even though I lobbied against it, the decision was made to keep the frames based layout to allow rapid browsing of the hundreds of posts that are made on a daily basis. The primary reason they wanted this was precisely for the ability to quickly browse content item by item. While I personally hate working with Frames, I have to admit that the UI actually works well with the frames layout as long as you're running on a large desktop screen. You can check out the frames based desktop site here:

http://classifieds.gorge.net/

However when I rebuilt the app I also added a secondary view that doesn't use frames. The main reason for this of course was for mobile displays which work horribly with frames. So there's a somewhat mobile friendly interface to the interface, which ditches the frames and uses some responsive design tweaking for mobile capable operation:

![QrCodeList QrCodeList]()

http://classifeds.gorge.net/mobile

(or browse the base url with your browser width under 800px)

Here's what the mobile, non-frames view looks like:

![ClassifiedsListing[4] ClassifiedsListing[4]]()

![ClassifiedsView ClassifiedsView]()

As you can see this means that the list of classifieds posts now is a list and there's a separate page for drilling down into the item. And of course… originally we ran into that usability issue I mentioned earlier where the browse, view detail, go back to the list cycle resulted in lost list state. Originally in mobile mode you scrolled through the list, found an item to look at and drilled in to display the item detail. Then you clicked back to the list and BAM - you've lost your place.

Because there are so many items added on a daily basis the full list is never fully loaded, but rather there's a "Load Additional Listings" entry at the button. Not only did we originally lose our place when coming back to the list, but any 'additionally loaded' items are no longer there because the list was now rendering as if it was the first page hit. The additional listings, and any filters, the selection of an item all were lost. Major Suckage!

Using Client SessionStorage to cache Server Rendered Content

To work around this problem I decided to cache the rendered page content from the list in SessionStorage. Anytime the list renders or is updated with Load Additional Listings, the page HTML is cached and stored in Session Storage. Any back links from the detail page or the login or write entry forms then point back to the list page with a back=true query string parameter. If the server side sees this parameter it doesn't render the part of the page that is cached. Instead the client side code retrieves the data from the sessionState cache and simply inserts it into the page.

It sounds pretty simple, and the overall the process is really easy, but there are a few gotchas that I'll discuss in a minute. But first let's look at the implementation.

Let's start with the server side here because that'll give a quick idea of the doc structure. As I mentioned the server renders data from an ASP.NET MVC view. On the list page when returning to the list page from the display page (or a host of other pages) looks like this:

https://classifieds.gorge.net/list?back=True

The query string value is a flag, that indicates whether the server should render the HTML. Here's what the top level MVC Razor view for the list page looks like:

@model MessageListViewModel@{ ViewBag.Title = "Classified Listing";bool isBack = !string.IsNullOrEmpty(Request.QueryString["back"]);}<form method="post" action="@Url.Action("list")"><div id="SizingContainer">@if (!isBack)

{@Html.Partial("List_CommandBar_Partial", Model)<div id="PostItemContainer" class="scrollbox" xstyle="-webkit-overflow-scrolling: touch;">@Html.Partial("List_Items_Partial", Model)@if (Model.RequireLoadEntry)

{<div class="postitem loadpostitems" style="padding: 15px;"> <div id="LoadProgress" class="smallprogressright"></div><div class="control-progress">Load additional listings...</div> </div>} </div>}</div></form>As you can see the query string triggers a conditional block that if set is simply not rendered. The content inside of #SizingContainer basically holds the entire page's HTML sans the headers and scripts, but including the filter options and menu at the top. In this case this makes good sense - in other situations the fact that the menu or filter options might be dynamically updated might make you only cache the list rather than essentially the entire page. In this particular instance all of the content works and produces the proper result as both the list along with any filter conditions in the form inputs are restored.

Ok, let's move on to the client. On the client there are two page level functions that deal with saving and restoring state. Like the counter example I showed earlier, I like to wrap the logic to save and restore values from sessionState into a separate function because they are almost always used in several places.

page.saveData = function(id) {if (!sessionStorage)return;var data = {

id: id,

scroll: $("#PostItemContainer").scrollTop(),

html: $("#SizingContainer").html()

};

sessionStorage.setItem("list_html",JSON.stringify(data));

};

page.restoreData = function() {if (!sessionStorage)return; var data = sessionStorage.getItem("list_html");if (!data)return null;return JSON.parse(data);

};The data that is saved is an object which contains an ID which is the selected element when the user clicks and a scroll position. These two values are used to reset the scroll position when the data is used from the cache. Finally the html from the #SizingContainer element is stored, which makes for the bulk of the document's HTML.

In this application the HTML captured could be a substantial bit of data. If you recall, I mentioned that the server side code renders a small chunk of data initially and then gets more data if the user reads through the first 50 or so items. The rest of the items retrieved can be rather sizable. Other than the JSON deserialization that's Ok. Since I'm using SessionStorage the storage space has no immediate limits.

Next is the core logic to handle saving and restoring the page state. At first though this would seem pretty simple, and in some cases it might be, but as the following code demonstrates there are a few gotchas to watch out for. Here's the relevant code I use to save and restore:

$( function() {…

var isBack = getUrlEncodedKey("back", location.href); if (isBack) {// remove the back key from URLsetUrlEncodedKey("back", "", location.href);var data = page.restoreData();// restore from sessionStateif (!data) {// no data - force redisplay of the server side default list window.location = "list"; return;

}

$("#SizingContainer").html(data.html);var el = $(".postitem[data-id=" + data.id + "]");

$(".postitem").removeClass("highlight");

el.addClass("highlight");

$("#PostItemContainer").scrollTop(data.scroll);

setTimeout(function() { el.removeClass("highlight"); }, 2500);

}else if (window.noFrames)page.saveData(null);// save when page loads$("#SizingContainer").on("click", ".postitem", function() {var id = $(this).attr("data-id");if (!id)return true;if (window.noFrames)page.saveData(id);var contentFrame = window.parent.frames["Content"];if (contentFrame)

contentFrame.location.href = "show/" + id;elsewindow.location.href = "show/" + id;return false;

});…

The code starts out by checking for the back query string flag which triggers restoring from the client cache. If cached the cached data structure is read from sessionStorage. It's important here to check if data was returned. If the user had back=true on the querystring but there is no cached data, he likely bookmarked this page or otherwise shut down the browser and came back to this URL. In that case the server didn't render any detail and we have no cached data, so all we can do is redirect to the original default list view using window.location. If we continued the page would render no data - so make sure to always check the cache retrieval result. Always!

If there is data the it's loaded and the data.html data is restored back into the document by simply injecting the HTML back into the document's #SizingContainer element:

$("#SizingContainer").html(data.html);

It's that simple and it's quite quick even with a fully loaded list of additional items and on a phone.

The actual HTML data is stored to the cache on every page load initially and then again when the user clicks on an element to navigate to a particular listing. The former ensures that the client cache always has something in it, and the latter updates with additional information for the selected element.

For the click handling I use a data-id attribute on the list item (.postitem) in the list and retrieve the id from that. That id is then used to navigate to the actual entry as well as storing that Id value in the saved cached data. The id is used to reset the selection by searching for the data-id value in the restored elements.

The overall process of this save/restore process is pretty straight forward and it doesn't require a bunch of code, yet it yields a huge improvement in the usability of the site on mobile devices (or anybody who uses the non-frames view).

Some things to watch out for

As easy as it conceptually seems to simply store and retrieve cached content, you have to be quite aware what type of content you are caching. The code above is all that's specific to cache/restore cycle and it works, but it took a few tweaks to the rest of the script code and server code to make it all work. There were a few gotchas that weren't immediately obvious.

Here are a few things to pay attention to:

JavaScript Event Hookups

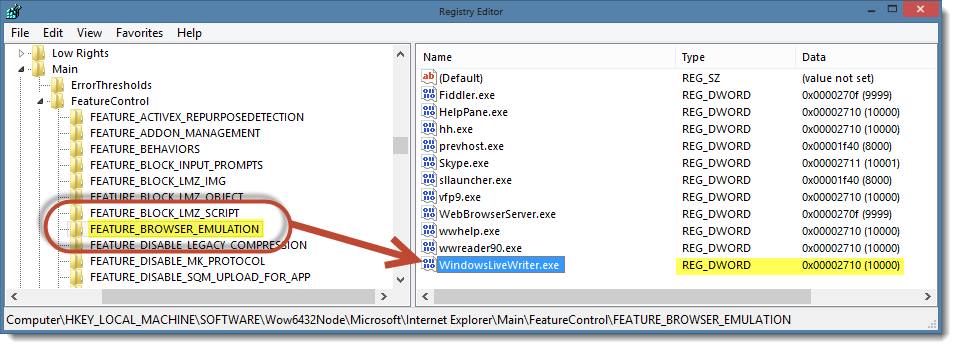

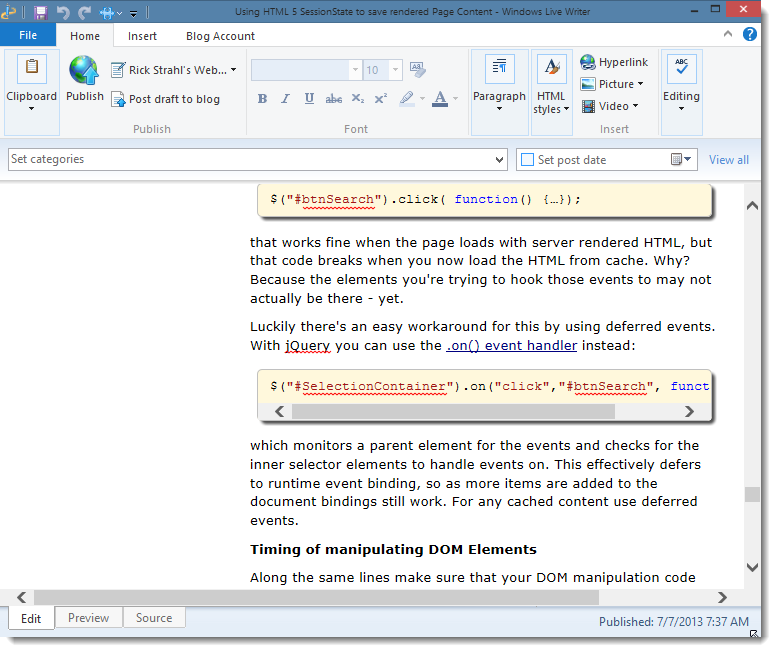

The biggest issue I ran into with this approach almost immediately is that originally I had various static event handlers hooked up to various UI elements that are now cached. If you have an event handler like:

$("#btnSearch").click( function() {…});that works fine when the page loads with server rendered HTML, but that code breaks when you now load the HTML from cache. Why? Because the elements you're trying to hook those events to may not actually be there - yet.

Luckily there's an easy workaround for this by using deferred events. With jQuery you can use the .on() event handler instead:

$("#SelectionContainer").on("click","#btnSearch", function() {…});which monitors a parent element for the events and checks for the inner selector elements to handle events on. This effectively defers to runtime event binding, so as more items are added to the document bindings still work. For any cached content use deferred events.

Timing of manipulating DOM Elements

Along the same lines make sure that your DOM manipulation code follows the code that loads the cached content into the page so that you don't manipulate DOM elements that don't exist just yet. Ideally you'll want to check for the condition to restore cached content towards the top of your script code, but that can be tricky if you have components or other logic that might not all run in a straight line.

Inline Script Code

Here's another small problem I ran into: I use a DateTime Picker widget I built a while back that relies on the jQuery date time picker. I also created a helper function that allows keyboard date navigation into it that uses JavaScript logic. Because MVC's limited 'object model' the only way to embed widget content into the page is through inline script.

This code broke when I inserted the cached HTML into the page because the script code was not available when the component actually got injected into the page. As with the last bullet - it's a matter of timing. There's no good work around for this - in my case I pulled out the jQuery date picker and relied on native <input type="date" /> logic instead - a better choice these days anyway, especially since this view is meant to be primarily to serve mobile devices which actually support date input through the browser (unlike desktop browsers of which only WebKit seems to support it).

Bookmarking Cached Urls

When you cache HTML content you have to make a decision whether you cache on the client and also not render that same content on the server. In the Classifieds app I didn't render server side content so if the user comes to the page with back=True and there is no cached content I have to a have a Plan B. Typically this happens when somebody ends up bookmarking the back URL.

The easiest and safest solution for this scenario is to ALWAYS check the cache result to make sure it exists and if not have a safe URL to go back to - in this case to the plain uncached list URL which amounts to effectively redirecting.

This seems really obvious in hindsight, but it's easy to overlook and not see a problem until much later, when it's not obvious at all why the page is not rendering anything.

Don't use <body> to replace Content

Since we're practically replacing all the HTML in the page it may seem tempting to simply replace the HTML content of the <body> tag. Don't. The body tag usually contains key things that should stay in the page and be there when it loads. Specifically script tags, top level forms and possibly other embedded content. It's best to create a top level DOM element specifically as a placeholder container for your cached content and wrap just around the actual content you want to replace. In the app above the #SizingContainer is that container.

Other Approaches

The approach I've used for this application is kind of specific to the existing server rendered application we're running and so it's just one approach you can take with caching. However for server rendered content caching this is a pattern I've used in a few apps to retrofit some client caching into list displays. In this application I took the path of least resistance to the existing server rendering logic.

Here are a few other ways that come to mind:

- Using Partial HTML Rendering via AJAX

Instead of rendering the page initially on the server, the page would load empty and the client would render the UI by retrieving the respective HTML and embedding it into the page from a Partial View. This effectively makes the initial rendering and the cached rendering logic identical and removes the server having to decide whether this request needs to be rendered or not (ie. not checking for a back=true switch). All the logic related to caching is made on the client in this case.

- Using JSON Data and Client Rendering

The hardcore client option is to do the whole UI SPA style and pull data from the server and then use client rendering or databinding to pull the data down and render using templates or client side databinding with knockout/angular et al. As with the Partial Rendering approach the advantage is that there's no difference in the logic between pulling the data from cache or rendering from scratch other than the initial check for the cache request. Of course if the app is a full on SPA app, then caching may not be required even - the list could just stay in memory and be hidden and reactivated.

I'm sure there are a number of other ways this can be handled as well especially using AJAX. AJAX rendering might simplify the logic, but it also complicates search engine optimization since there's no content loaded initially. So there are always tradeoffs and it's important to look at all angles before deciding on any sort of caching solution in general.

State of the Session

SessionState and LocalStorage are easy to use in client code and can be integrated even with server centric applications to provide nice caching features of content and data. In this post I've shown a very specific scenario of storing HTML content for the purpose of remembering list view data and state and making the browsing experience for lists a bit more friendly, especially if there's dynamically loaded content involved.

Always keep in mind that both SessionState and LocalStorage have size limitations that are per domain, so keep item storage optimized by removing storage items you no longer need to avoid overflowing the available storage space.

If you haven't played with sessionStorage or localStorage I encourage you to give it a try. It's highly useful when it comes to caching information and managing client state even in primarily server driven applications. Check it out…

Resources

© Rick Strahl, West Wind Technologies, 2005-2013

![]()

_thumb_1.png)

![ClassifiedsListing[4] ClassifiedsListing[4]](http://www.west-wind.com/Weblog/images/2013Windows-Live-Writer/Using-HTML5-SessionState-to_128A3/ClassifiedsListing%5B4%5D_thumb.png)