In the last few months I’ve been posting a lot of entries related to some of the work I’ve been doing on the Westwind Globalization 2.0 Library. It’s been a long while coming and I’m happy to announce that the new version is now officially released at version number 2.1.

Version 2.0 has many major improvements including support for multiple database backends (Sql Server, MySql, SqLite, SqlCE – and you can create your own), a much cleaner and more responsive JavaScript based Resource Editor Interface, a new Strongly Typed Class Generator that outputs switchable resource backends that can easily switch between Resx and Database resources, an updated JavaScript Resource handler to serve server side resources (either Db or Resx) to client side JavaScript applications and much improved support for interactive resource editing. There have also been a ton of bug fixes thanks to many reports that have come in as a result of the recent blog posts which is awesome.

Because there’s so much new and different in version 2.0 I’ve created a new 25 minute Introduction to West Wind Globalization Video which is a combination of feature overview and getting started guide. I realize 25 minutes isn’t exactly short but it covers a lot of ground beyond just the basics, describing features, background info and how to. So take a look…

What follows is a more detailed info on most of the same topics covered in the video.

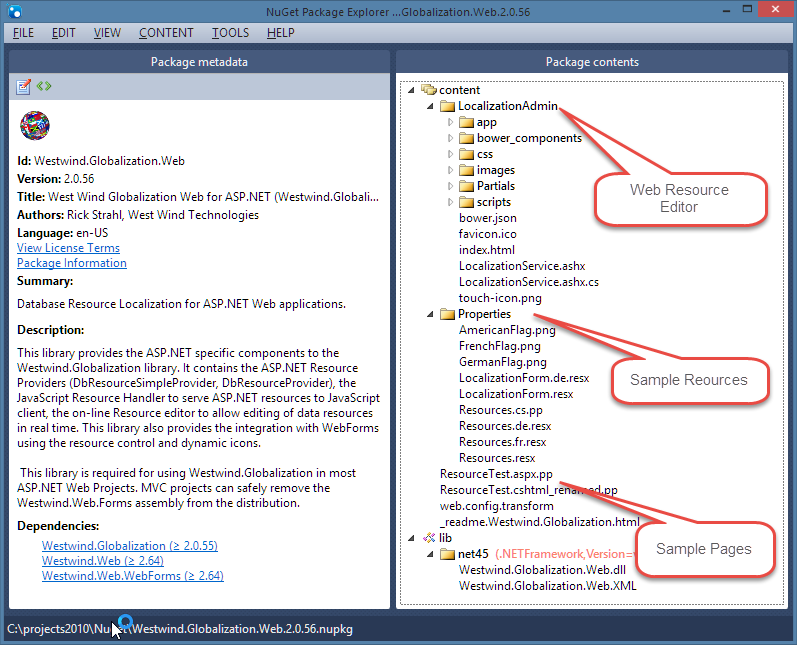

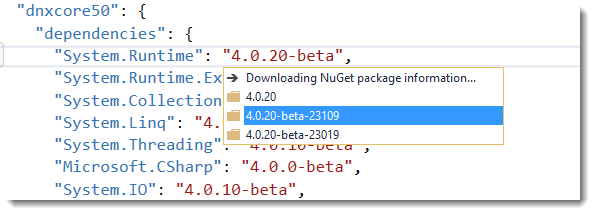

The NuGet Packages

To get started in a Web Project it's best to install the Starter package, which includes some sample resources you can import and a test page you can use to test resources with.

PM> Install-Package Westwind.Globalization.Web.Starter

Once you're up and running and have had a chance to play with your resources – you can remove the starter package leaving the base packages in place.

If you don't want sample resources and a test page, you can install:

PM> Install-Package Westwind.Globalization.Web

If you're not using a Web Project or you're using only MVC/Web API and don't need the Web Resource Editor you can just install the core package:

PM> Install-Package Westwind.Globalization

Please read or watch the video or read the the Installation Section of the Wiki or the Github Homepage all of which describe how to install the packages, configure your project , import existing resources (if any) and then start creating new ones quickly.

Here are a few more resources that you can jump to:

What is West Wind Globalization

Before I go over what’s new in this release, let me give a quick overview of what this library provides. Here are a few of the key features:

- Database Resource Provider

- Database Resource Manager

- Sql Server, MySql, SqLite, SqlCe

- Interactive Web Resource Editor

- Keyboard optimized resource entry

- Translate resources with Google and Bing

- Use Markdown in text resources for basic CMS-like functionality

- Support for interactive linking of content to resources

- Import and export Resx resources

- Generate strongly typed classes from Db resource (supports Db and Resx)

- Serve .NET resources to JavaScript

- Release and reload resources

- Create your own DbProviders

- Open source on GitHub - MIT licensed

Database ResourceManager and ResourceProviders

Traditional ASP.NET localization supports only Resx resources which store resource information in static XML files that are compiled into the binaries of your application. Because resources are static and compiled they tend to be fairly unwieldy to work with when it comes to localizing your application. You have to use the tools available in Visual Studio or whatever custom tooling you end up building for managing resource stored in XML files and any resource changes require a recompile of the application.

West Wind Globalization uses the same .NET resources models – ResourceManagers and ResourceProviders – and adds support for retrieving and updating resources using a database. Database resources are much easier to work when it comes to localizing an application and the library ships with a powerful Web based Resource Editor that lets you edit your application resources in real time as the application is running. You can force resources to be reloaded, so any changes become immediately visible.

Using Database resources doesn’t mean that every resource is loaded from a database each time the resource is accessed. Rather the database is used to retrieve a given ResourceSet and locale as a single ResourceSet at a time just like Resx resources. Resources are then cached by the native .NET resource architecture (.NET ResourceManager or ASP.NET ResourceProvider) and stay in memory for the duration of the application – unless explicitly unloaded. Using database resources is no less efficient than using Resx resources except perhaps for the first load.

If you don’t want to use database resources in production you can also import Resx resources, run with a database provider during development and interactively edit the resources, then export the resources to Resx and compile the resources for your production environment. It’s easy to import and export resources as well as creating strongly typed resources that can work with either Resx or database resources using Westwind Globalization. Switching between Resx and Db providers is as easy as switching a flag value.

The goal of this library is to give you options to let you work the way you want to work with resources and to make it easier to add, update and generally manage resources. You can use the Web Resource editor or use the Code API, or the database directly to interact with your resources. If you have an interactive resource environment in production you might also like the Markdown feature that is built into the library that allows you to flag resources as being Markdown content which is automatically turned into HTML when the resources are created into a resource set. Combined with the interactive resource linking features this allows you use Db Resources as a poor man’s CMS where you can interactively edit content using the Web Resource Editor interface.

The advantage of database resources for a typical Web application is that you can interactively edit and refresh resources so you can quickly see what the results of localizations look like in your applications as you are localizing.

Advertisement

![West Wind Web Surge - Web URL and Load Testing made easy]()

Support for Multiple Database Providers

The previous version of Westwind.Globalization only supported MS SQL Server. A lot of feedback has come in over the years for support of other SQL backends. The new version adds multiple database providers that can connect your resources to several different databases by default, with an extensible model that you can use to hook up additional providers. Out of the box MS SQL, MySql, SqLite and SqLite are supported. You can also create your own providers and hook up any other datasource. Hooking up another relational Sql backends using ADO.NET can be easily hooked up by overriding a few methods that don’t fit the stock SQL syntax by override the DbResourceManger class. Other providers (like a MongoDb provider for example) would require a bit more work as the DbDataResourceManager API is fairly big, but allows hooking any kind of data from a database or anything else. As long as you can read and write the data store you can serve it as resources.Switching providers is easy as adding and requires only specifying the appropriate ADO.NET data providers, setting the DbResourceManagerType and providing a connection string.

For more information on how to use other providers than SQL server see this wiki entry:

Non SQL Server Provider Configuration

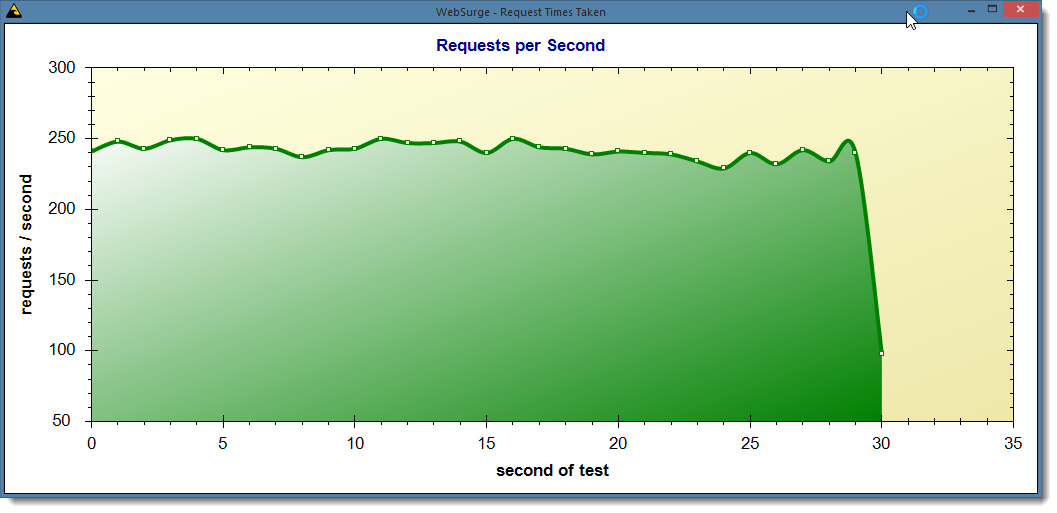

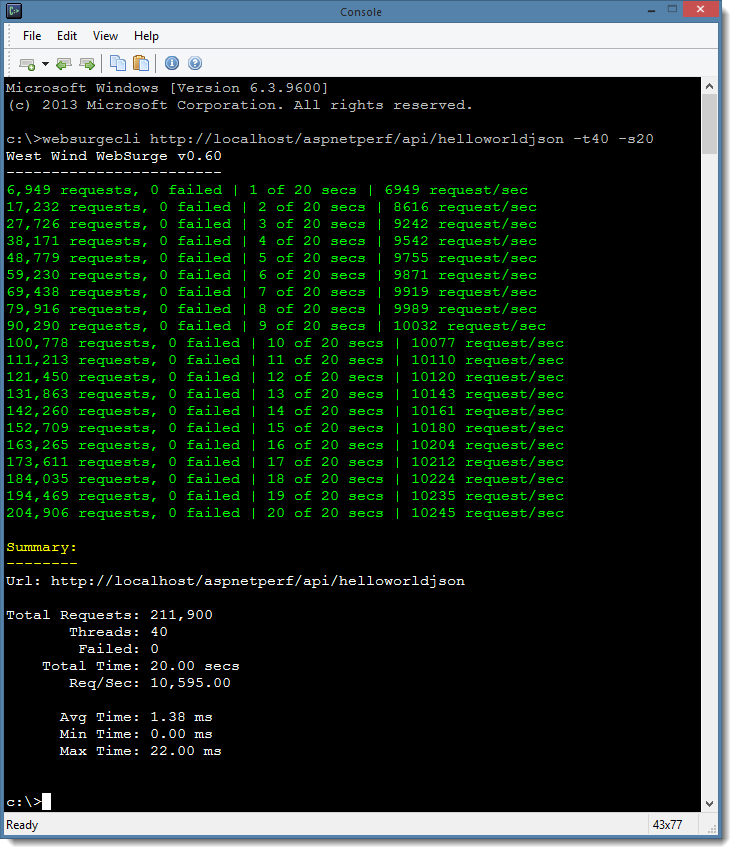

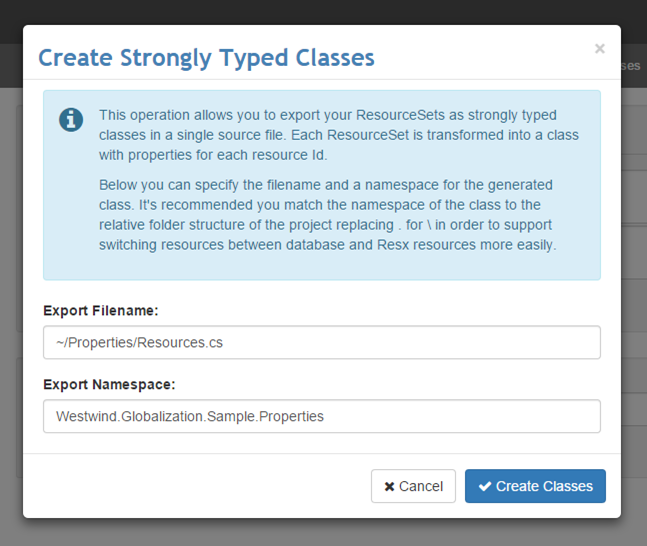

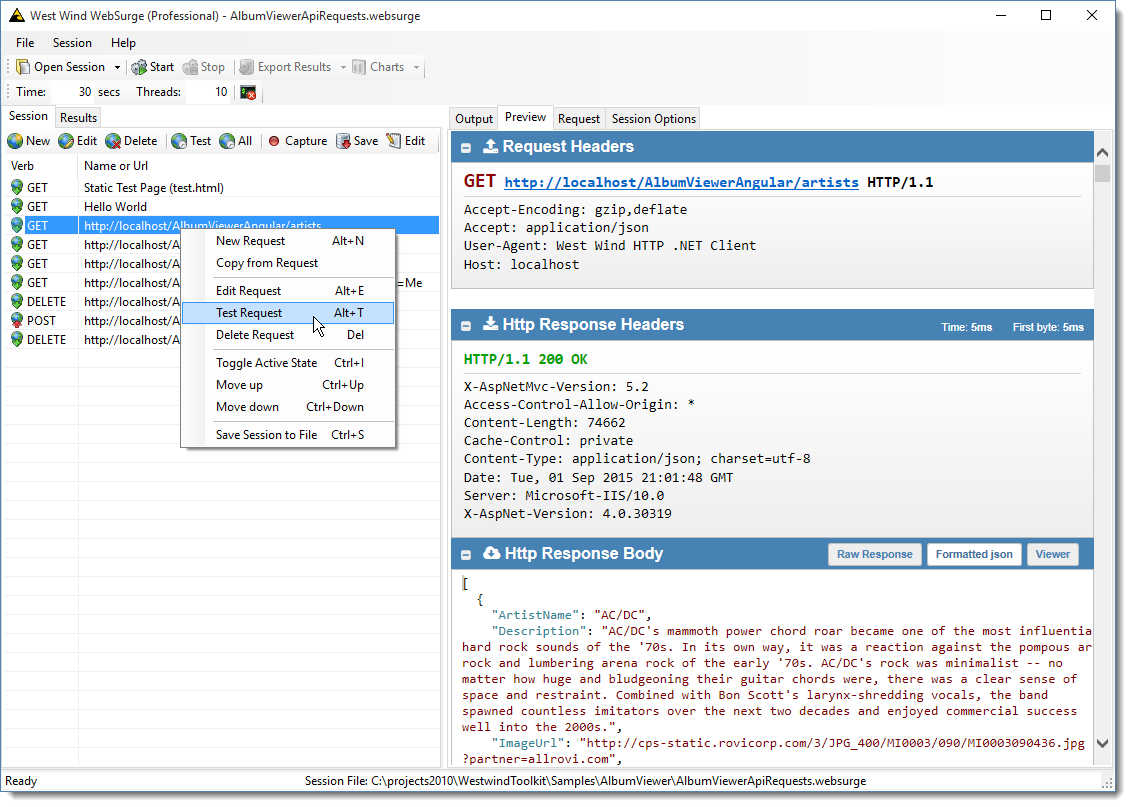

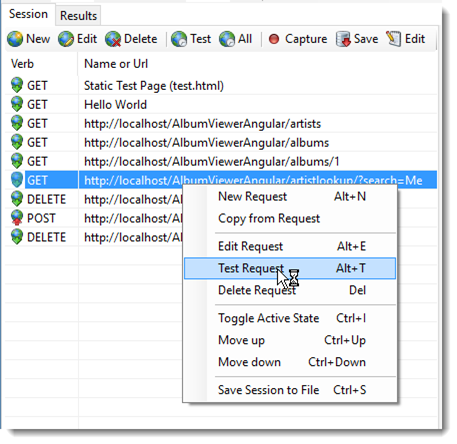

Interactive Web Resource Editor

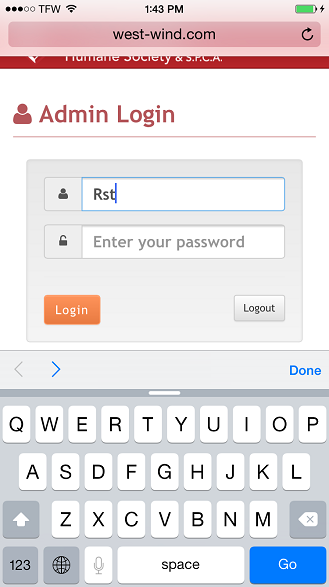

The Web Resource Editor has undergone a complete re-write in this version as a pure client side SPA application, to provide a much smoother and quicker editing experience. The interface is also lot more keyboard friendly with shortcuts for jumping quickly through your resources. Here’s what the new Resource Editor looks like:

![]()

Note that the localization form is also localized to German using West Wind Globalization – so if you switch your browser locale to German you can see the localization in action. This particular interface uses server side database resources and the JavaScript resource handler to push the localized resources into an AngularJs client side application. Here's what the German version looks like:

![GermanResourceEditor GermanResourceEditor]()

It’s very quick to add new resources or even new resource sets for multiple languages through this single form:

![resourceeditor resourceeditor]()

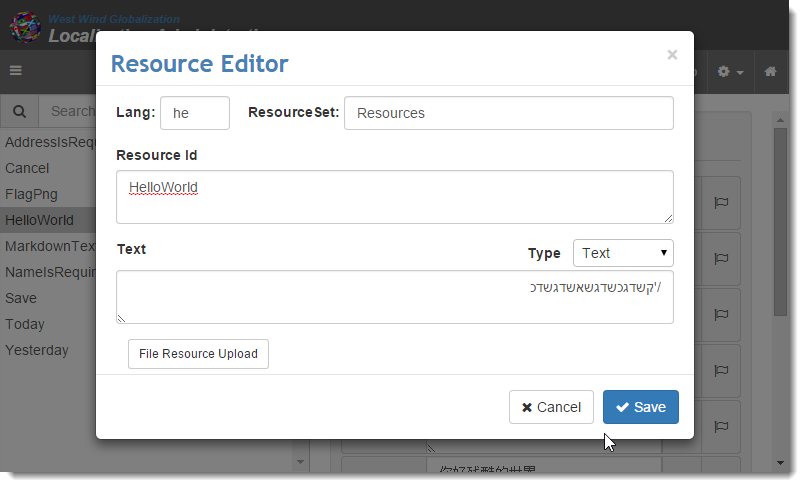

The main editor and resource editor both support RTL languages and the editors autofill new resources with default values for quick editing.

You can also translate resources once you've added them either by hand or by using a translation dialog – accessed by clicking on the flag in the main resource list - that lets you use Google or Bing Translation to help with translation of text:

![]()

Translation using these translators isn't always accurate but I've found them to be a good starting point for localization.

Import and Export Resx Resources

As mentioned earlier you can also import and export Resx resources to and from a database which makes it easy to use resources that you've already created. There are user interface form and code APIs that let you do this. The Web Resource Editor has an import and export form that makes it easy to get resources imported:

![ImportExportResx ImportExportResx]()

The Import (and Export) folder defaults to a project relative path. For an MVC project it assumes resources live in the ~/projects folder, for a WebForms project path is ~/ and the ~/App_LocalResource and ~/App_GlobalResources folders will be scoured to pick up resources. However, you can also specify any path that is accessible to the server here and load/save resources to and from there. What this means it's possible to import resource for any project from arbitrary locations on a development machine, edit them then export them back out which is very powerful if you need to localize resource in say a class library.

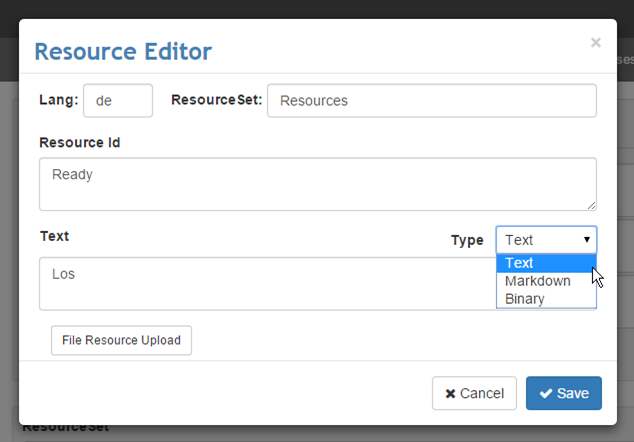

Create Strongly Typed Classes

In order to work with ASP.NET MVC strongly typed classes are a big requirement. MVC uses strongly typed resources for resource binding as well as for localized model validation messages so it's crucial that you can create strongly typed resources from the database resources. Visual Studio includes functionality to automatically create strongly typed resources from Resx resources, but the mechanism unfortunately is very tightly coupled to Resx resources – there's no easy way to override the behavior to load resources from a different source.

So West Wind Globalization uses its own strongly typed resource generation mechanism, one that is a bit more flexible in what type of resources you can use with it. You can use Db Resources from raw projects (what you would use with MVC or a class library/non-Web project), from Web Forms (App_GlobalResources/App_LocalResources using the various ASP.NET Resource Provider functions), as well as Resx Resources.

You can export resources using the following dialog from the Resource Editor:

![StronglyTypedResources StronglyTypedResources]()

The dialog lets you choose a file name the classes are generated into (it's a single file) and a namespace that the resource classes use. Again the file specified here can be generated anywhere on the machine, but by default it goes into the project folder of an ASP.NET Web project.

Here's what the generated classes look like (there can be multiple resource classes in the single source file):

public class GeneratedResourceSettings{// You can change the ResourceAccess Mode globally in Application_Start public static ResourceAccessMode ResourceAccessMode = ResourceAccessMode.DbResourceManager;

} [System.CodeDom.Compiler.GeneratedCodeAttribute("Westwind.Globalization.StronglyTypedResources", "2.0")]

[System.Diagnostics.DebuggerNonUserCodeAttribute()]

[System.Runtime.CompilerServices.CompilerGeneratedAttribute()]public class Resources{public static ResourceManager ResourceManager

{get{if (object.ReferenceEquals(resourceMan, null))

{var temp = new ResourceManager("Westwind.Globalization.Sample.Properties.Resources", typeof(Resources).Assembly);

resourceMan = temp;

}return resourceMan;

}

}private static ResourceManager resourceMan = null;public static System.String Cancel

{get{return GeneratedResourceHelper.GetResourceString("Resources","Cancel",ResourceManager,GeneratedResourceSettings.ResourceAccessMode);

}

}public static System.String Save

{get{return GeneratedResourceHelper.GetResourceString("Resources","Save",ResourceManager,GeneratedResourceSettings.ResourceAccessMode);

}

}public static System.String HelloWorld

{get{return GeneratedResourceHelper.GetResourceString("Resources","HelloWorld",ResourceManager,GeneratedResourceSettings.ResourceAccessMode);

}

}public static System.Drawing.Bitmap FlagPng

{get{return (System.Drawing.Bitmap) GeneratedResourceHelper.GetResourceObject("Resources","FlagPng",ResourceManager,GeneratedResourceSettings.ResourceAccessMode);

}

}

}The class at the top is a static class that is used to allow you to specify where the resources are served from which is the DbResourceManager, AspNetResourceProvider or Resx. This static global value can be set at application startup to determine where resources are loaded from.

Each resource class then includes a reference to a ResourceManager which is required for service Resx Resources. Both the ASP.NET provider and DbResourceManager use internal managers to retireve resources. The GeneratedResourceHelper.GetResourceString() method then determines which mode is active and returns the resources from the appropriate resource store – DbManager, AspNetProvider or Resx.

The helper function is actually pretty simple:

public static string GetResourceString(string resourceSet, string resourceId,ResourceManager manager,ResourceAccessMode resourceMode)

{if (resourceMode == ResourceAccessMode.AspNetResourceProvider)return GetAspNetResourceProviderValue(resourceSet, resourceId) as string;if (resourceMode == ResourceAccessMode.Resx)return manager.GetString(resourceId);return DbRes.T(resourceSet, "LocalizationForm");

}but it's what makes support of the different providers from a single class possible which is nice.

What's cool about this approach and something that's sorely missing in .NET resource management is that you can very easily switch between the 3 different modes – assuming you have both database and Resx resources available. Given that you can easily import and export to and from Resx it's trivial to switch between Resx and Database resources for strongly typed resources.

ASP.NET MVC Support

Although the library has always worked with ASP.NET MVC, the original version was built before MVC was a thing and so catered to Web forms, which was reflected in the documentation. As a result a lot of people assumed the library did not work with MVC. It always did but it wasn’t obvious. The main feature that makes ASP.NET MVC work with West Wind Globalization is the strongly typed resource functionality and you can simply use those strongly typed resources the same way as Resx resources.

To embed a strongly typed resource:

@Resources.HelloWorld

To use strongly typed resources in Model Validation:

public class ViewModelWithLocalizedAttributes{

[Required(ErrorMessageResourceName = "NameIsRequired", ErrorMessageResourceType = typeof(Resources))]public string Name { get; set; }

[Required(ErrorMessageResourceName = "AddressIsRequired", ErrorMessageResourceType = typeof(Resources))]public string Address { get; set; }

}No different than you would do with strongly typed Resx resources in your MVC applications as long as you generate the strongly typed resources into your project.

In addition you can also use the DbRes static class to directly access localized resources. Note that the strongly typed resources that are tied to DbResourceManager also use this DbRes class behind the scenes so resources come from the same ResourceManager instance behind the scenes so there's no resource duplication. Using DbRes you can string based access to resources:

@DbRes.T("HelloWorld", "Resources")Note that this always works – unlike strongly typed resources you don't need to generate anything in order for resources to work so you can update Views without having to recompile anything first. You lose strong typing with this, but you gain the non-compile flexibility instead. DbRes also has DbRes.THtml() which generates a raw HtmlString instance in case you are returning raw Html.

For example if you wanted to display some automatically rendered Markdown text you can use:

@DbRes.THtml("MarkdownText","Resources")to get the raw HTML into the page.

The new documentation on the Wiki has a lot more information and examples specific to ASP.NET MVC. This version has improved support for MVC with a new Strongly Typed Class Generator that works with either Resx or Database resources (ie. you can switch with a single flag value) as well a much more powerful resource importer and exporter that lets you use resources not just from Web projects but any project at all.

I posted a detailed blog post on the ASP.NET MVC specific features a while back:

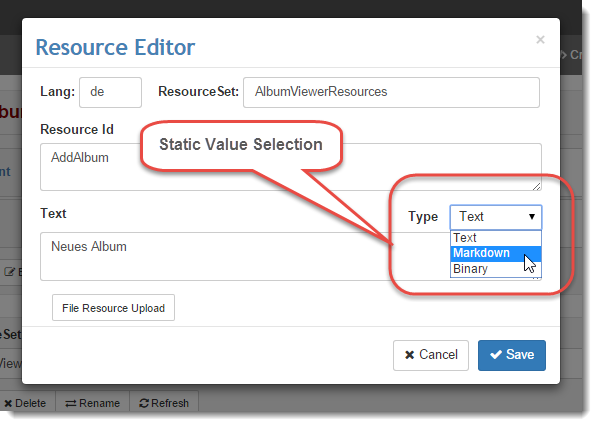

MarkDown Support

As mentioned in the previous section one useful new feature is Markdown support for individual resources. You can mark an individual resource to be a Markdown style resource, which causes the resource to be rendered into HTML when it is loaded into a ResourceSet or when you retrieve a resource value from the API.

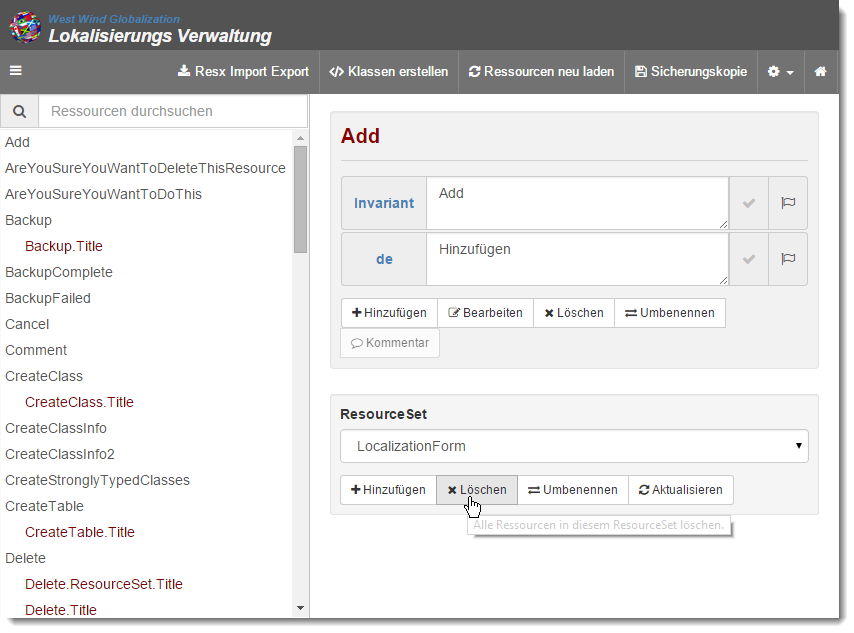

Resources have a ValueType field in the database that identify the type of resource that is being requested and if its Markdown it's automatically translated to HTML. For typical localization the value is rendered to HTML when the resource set is retrieved. To set a resource as Markdown you can do that in the resource editor:

![]()

Note that this is a database specific feature. Once you export resources with the markdown flag to Resx, the markdown flag is lost and the data is exported as the rendered HTML string into Resx instead. If you plan on going back and forth between Resx and Db resources just be aware of this fact.

For more info on the Markdown features you can check the Wiki documentation:

MarkDown For Resource Values

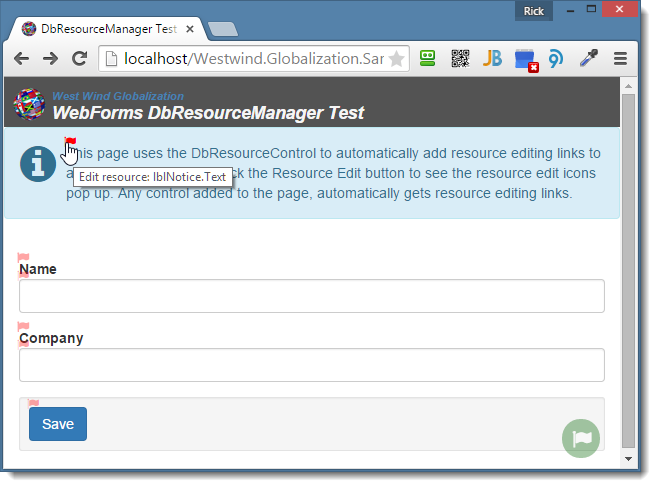

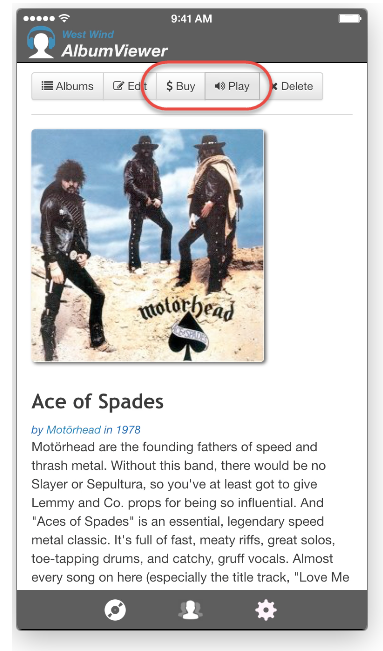

Interactive Resource Linking and Editing

This version also improves on the interactive resource editing functionality that allows you to create links to resources that can be directly embedded into your pages and can be activated when an authorized user wants to edit resources. Basically you can add a few markup tags to any element on the page that makes that element linked to a resource based on its resource id. This makes it possible to quickly jump to resources for editing. This is an especially powerful feature when you combine it with the Markdown features described above, as you can in effect build a mini CMS system based on this mechanism.

West Wind Globalization provides two features: A couple of HTML based tags that can be applied to any DOM element and mark it as editable, as well as a helper JavaScript component that can be used to make the resource links active. Here's what this looks like:

![]()

On this page each element that has a flag associated with it is marked up with one or two mark-up tags.

For example:

<body data-resource-set="Resources">

<span data-resource-id="HelloWorld"><%= DbRes.T("HelloWorld","Resources") %></span>

</body>

The key is the data-resource-id attribute which points at a resource id. data-resource-set can be applied either on the same element, or any element up the hierarchy to be found. Here I'm putting it on the body tag which means any attribute in the page can search up and find the data set. These attributes are used by a small jQuery component to find the resourceId and resourceSet and then open up the Resource Editor with the requested resources activated. If the resource doesn't exist a new Resource Dialog is popped up that allows creating a new resource with the resource name and content preset.

Adding the Resource Linking button that enables the flag links on a page is as easy as adding a small script block (and a little CSS) to your page or script:

<script src="scripts/ww.resourceEditor.js"></script><script> ww.resourceEditor.showEditButton(

{

adminUrl: "./",

editorWindowOpenOptions: "height=600, width=900, left=30, top=30"}

);</script>For more info check out the in depth blog post that describes in detail how this functionality works and how it's implemented.

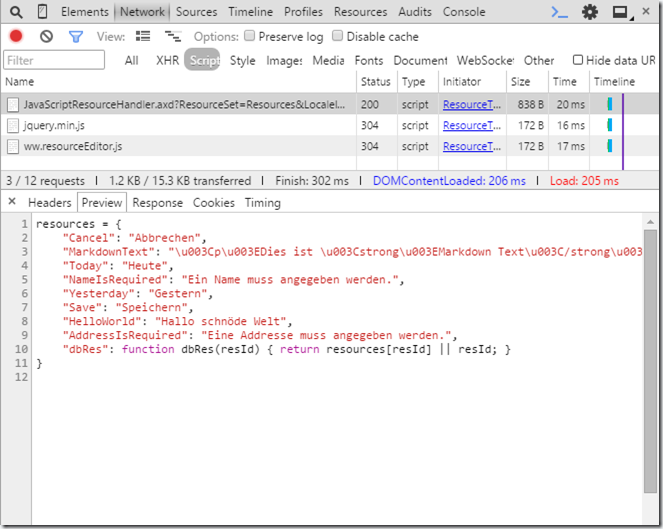

Serving Server Side Resources to JavaScript

More and more Web applications are using fully client centric JavaScript to drive application logic, but you can still use your server side resources with these application by using the JavaScriptResourceHandler included in West Wind Globalization. The JavaScriptResourceHandler works both with database and resx resources and can be used either in ASP.NET client applications or static HTML pages.

It works by using a dynamic resource handler link that specifies which resources to request. The link specifies which Resourceset to load, which locale to use and what type of resource (Db or Resx) to return as well as a variable name to which to generate the resources. The handler responds by creating a JavaScript class map with the localized resources attached as properties for each resource.

Here's what the exported resources look like normalized for German:

![JavaScriptresourceHandler JavaScriptresourceHandler]()

To get resources into the page you can either use a .NET code tag like this:

<script src="@JavaScriptResourceHandler.GetJavaScriptResourcesUrl("resources","Resources")"></script>This creates the above value when accessing the page in the German locale. The first parameter is the name of the variable to create which can be anything you chose including a namespaced name (ie. globals.resources). The second parameter is the resource set to load. There are additional optional parameters that let you explicitly select a language id (ie. de-DE) as well as the resource provider type. The default is to auto-detect which checks to see if the Resource Provider is active. If it is resources are returned from the database, otherwise Resx resources are used (or attempted).

You can also use a raw HTML link instead of the tag above, which is a bit more verbose but has the same result (all one line):

<script src="/Westwind.Globalization.Sample/JavaScriptResourceHandler.axd?

ResourceSet=Resources&LocaleId=de-DE&VarName=resources&

ResourceType=auto"></script>

Note that the JavaScriptResourceHandler works with Resx resources so you can use it without using anything else in Westwind.Globalization. IOW, you don't have to use any of the database localization features if all you want is the JavaScriptResourceHandler functionality.

If you want to see a working example that uses Server Side resources in JavaScript, the Web Resource Editor uses this very approach with AngularJs and binds resources into the page using one-way binding expressions in AngularJs:

<i class="fa fa-download"></i> {{::view.resources.ImportExportResx}}where view.resources holds the server imported resources attached to the local Angular view model.

If you want to see how to integrate server resources into a JavaScript application, the resource editor serves as a good example of how it's done. The source code is available in every project you add the Web package to, or in the base GitHub repository.

For more info on how the resource handler works check out this Wiki topic.

JavaScript Resource Handler Serve JavaScript Resources from the Server

Summary

As you can see there's lot of new stuff in Westwind.Globalization version 2.0 and I'm excited to finally release the full version out of beta. If you're doing localization and you've considered using database resources before in order to have a richer and more flexible resource editing experience, give this library a spin. If you run into any issues, please post issues on GitHub or if you fix up feel free to add a pull request. If you use the project or like it, please star the project on GitHub to show your support. Enjoy.

Resources

© Rick Strahl, West Wind Technologies, 2005-2015

![]()

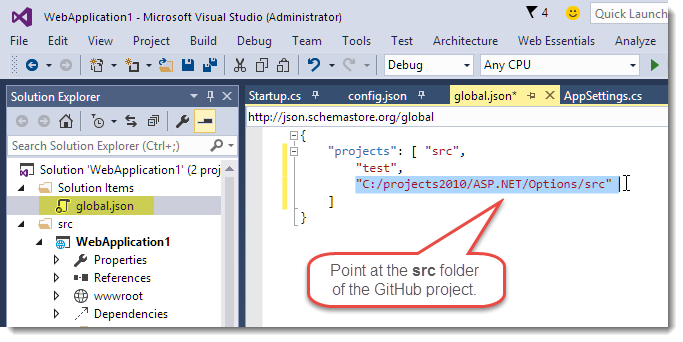

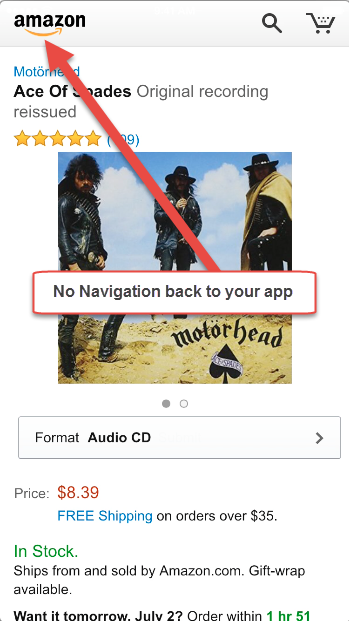

ASP.NET has long had an AppSettings style configuration interface that you see used by most applications. AppSettings in current versions of ASP.NET (4 and prior) is based on very basic string based key value store that allows you to store and read configuration values at runtime. It works reasonably well if your configuration needs are simple, but it quickly falls apart if your configuration requirements are more complex. The biggest issues are that by default you have access to the ‘AppSettings’ key only – with a bit more verbose of a syntax to access other sections. The other big downfall of this tooling is that the values stored have to be strings when often configuration values need to be at the very least numeric or logical values that have to be converted.

ASP.NET has long had an AppSettings style configuration interface that you see used by most applications. AppSettings in current versions of ASP.NET (4 and prior) is based on very basic string based key value store that allows you to store and read configuration values at runtime. It works reasonably well if your configuration needs are simple, but it quickly falls apart if your configuration requirements are more complex. The biggest issues are that by default you have access to the ‘AppSettings’ key only – with a bit more verbose of a syntax to access other sections. The other big downfall of this tooling is that the values stored have to be strings when often configuration values need to be at the very least numeric or logical values that have to be converted.

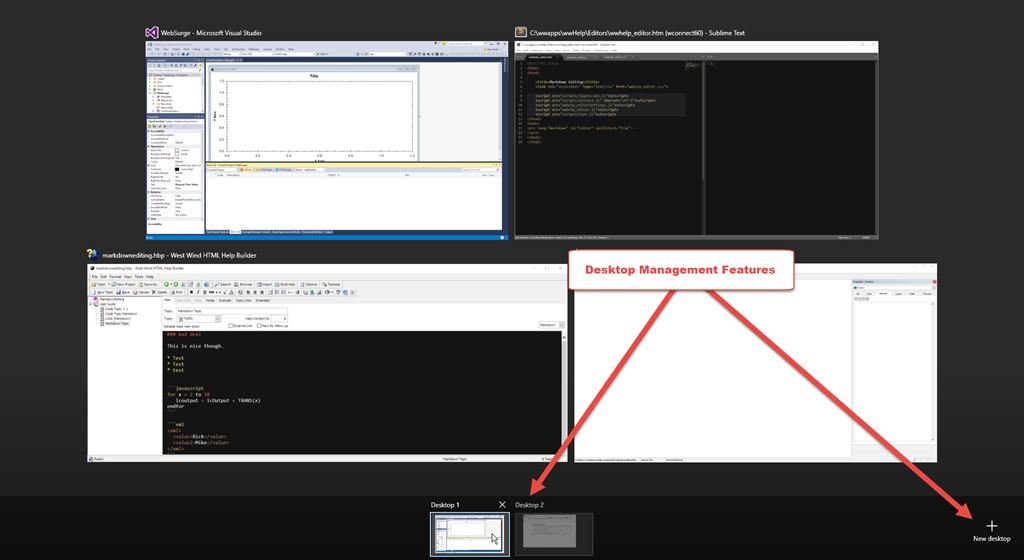

![Desktops[4] Desktops[4]](http://weblog.west-wind.com/images/2015Windows-Live-Writer/Multiple-Desktops-in-Windows_FA07/Desktops%5B4%5D_thumb.png)

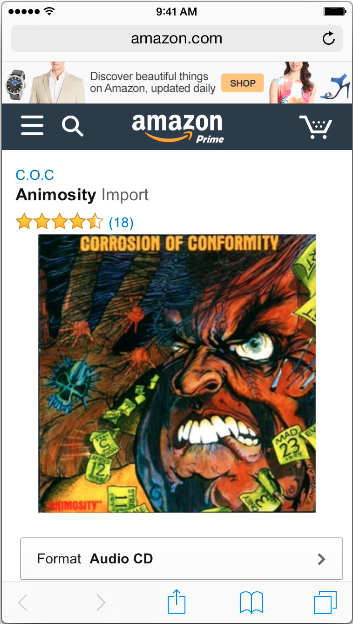

like steaming piles of spaghetti code. Functional – yes. Maintainable – not so much. But compared to managing the complexity without a framework the level of spaghetti-ness is actually more manageable because at least there are isolated piles of spaghetti code in separate modules. Maybe that’s progress too…

like steaming piles of spaghetti code. Functional – yes. Maintainable – not so much. But compared to managing the complexity without a framework the level of spaghetti-ness is actually more manageable because at least there are isolated piles of spaghetti code in separate modules. Maybe that’s progress too…

The built-in module system is probably the most notable feature as it greatly simplifies the alphabet soup of module systems that are in use today. Having a standard module system defined at the language level should - in the future - provide for a more consistent experience across libraries instead of the messy multi-module support most libraries have to support today. ES6's module system doesn't support all the use cases of other module systems (there's no dynamic module loading support for example), so we may still need a supporting module system for that, but at least at the application level the interface will be more consistent.

The built-in module system is probably the most notable feature as it greatly simplifies the alphabet soup of module systems that are in use today. Having a standard module system defined at the language level should - in the future - provide for a more consistent experience across libraries instead of the messy multi-module support most libraries have to support today. ES6's module system doesn't support all the use cases of other module systems (there's no dynamic module loading support for example), so we may still need a supporting module system for that, but at least at the application level the interface will be more consistent. Say what you will about the complexity of the V1 set of frameworks, one thing that is nice about them is you don't need much to get started. You can simply add a script reference to a page and you're off and running. The V2 frameworks are not going to be so easy to get started with.

Say what you will about the complexity of the V1 set of frameworks, one thing that is nice about them is you don't need much to get started. You can simply add a script reference to a page and you're off and running. The V2 frameworks are not going to be so easy to get started with.

![headeroverrides[6] headeroverrides[6]](http://weblog.west-wind.com/images/2015Windows-Live-Writer/a0a71be73c61_8667/headeroverrides%5B6%5D_thumb.png)

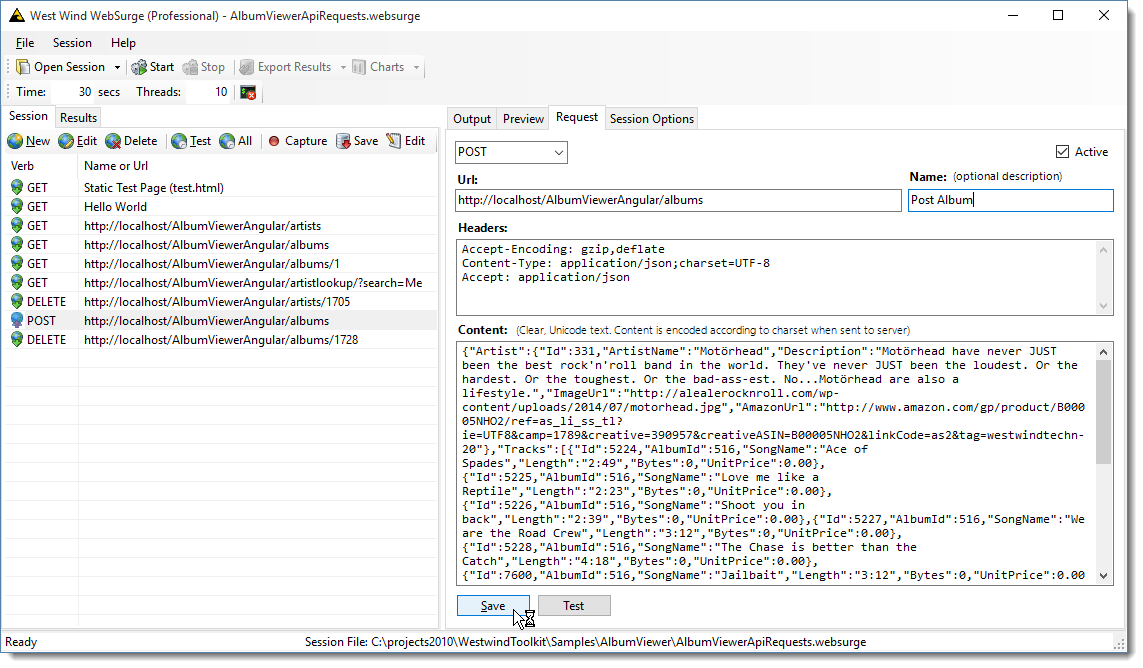

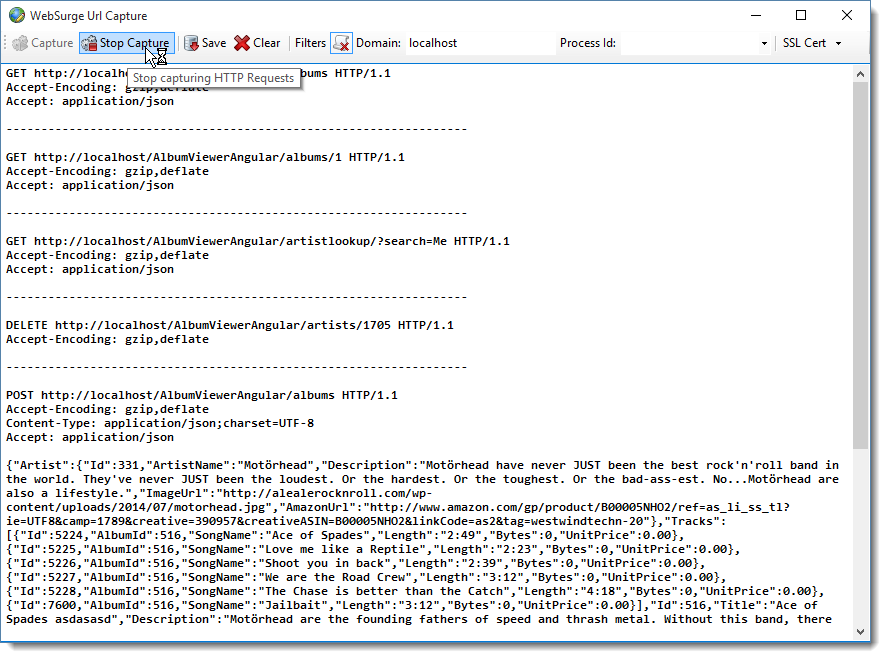

![LoadTest[5]_thumb[2] LoadTest[5]_thumb[2]](http://weblog.west-wind.com/images/2015Windows-Live-Writer/a0a71be73c61_8667/LoadTest%5B5%5D_thumb%5B2%5D_thumb.png)

![ResultDisplay_thumb[2] ResultDisplay_thumb[2]](http://weblog.west-wind.com/images/2015Windows-Live-Writer/a0a71be73c61_8667/ResultDisplay_thumb%5B2%5D_thumb.png)

![RequestView_thumb[2] RequestView_thumb[2]](http://weblog.west-wind.com/images/2015Windows-Live-Writer/a0a71be73c61_8667/RequestView_thumb%5B2%5D_thumb.png)

![ExportResults_thumb[3] ExportResults_thumb[3]](http://weblog.west-wind.com/images/2015Windows-Live-Writer/a0a71be73c61_8667/ExportResults_thumb%5B3%5D_thumb.png)