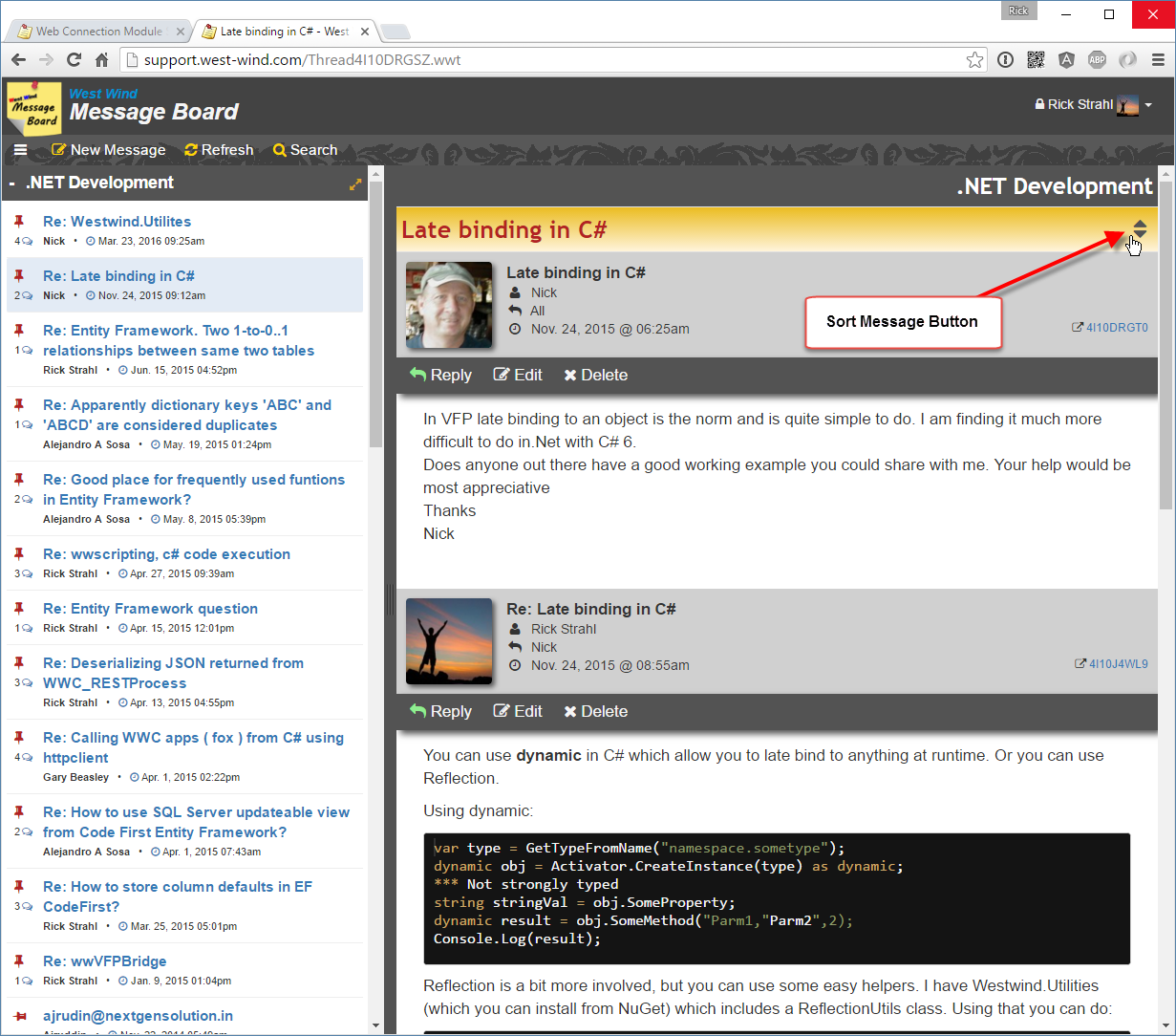

A few days ago I was working on one of my old applications and needed to add support for a resizable panel based layout in HTML. Specifically this is for HTML Help Builder which is an application that generates HTML based documentation from class libraries, databases and that also lets you create help topics manually for full documentation purposes. The generated output for documentation typically has a two panel layout and I needed to integrate resizing functionality from the old school frames interface that had been in use before.

Surprisingly there aren't a lot of resizing libraries out there and the ones that are available tend to be rather large as they are either part of larger libraries or are trying to manage the UI specifically for a scenario such as panel layout components. I couldn't find anything that was lean and can just rely on basic CSS layout to handle the UI part of resizing. So as is often the case, I ended up creating my own small jquery-resizable plug-in as this isn't the first time I've looked into this.

The jquery-resizable Plug-in

jquery-resizable is a small jquery plug-in that handles nothing but the actual resizing of a DOM element. It has no direct UI characteristics other than physically resizing the element. It supports mouse and touch events for resizing and otherwise relies on CSS and HTML to handle the visual aspects of the resizing operations. Despite being minimalistic, I find it really easy to hook up resize operations for things like resizable windows/panels or for things like split panels which is the use case I set out to solve.

If you're impatient and just want to get to it, you can jump straight to the code on GitHub or check out some of the basic examples:

Creating a jQuery-resizable Plug-in

jQuery-resizable is a small jQuery plug-in that – as the name implies – resizes DOM elements when you drag them in or out. The component handles only the actual resizing operation process and doesn't deal with any UI functionality such as managing containers or sizing grips – this is all left up to HTML and CSS, which as it turns out is pretty easy and very flexible. The plug-in itself simply manages the drag operation events for both mouse and touch operation and resizing the specified container(s) that is being resized. The end result is a pretty small component that's easily reusable.

You can use this component to make any DOM element resizable by using a jQuery selector to specify the resizable element as well as specifying a drag handle element. A drag handle is the element that has to be selected initially to start dragging which in a splitter panel would be the splitter bar, or in a resizable dialog would be the sizing handle on the lower left of a window.

The syntax for the component is very simple:

$(".box").resizable({

handleSelector: "size-grip",

resizeHeight: false,

resizeWidth: true});Note that you can and should select a handle selector which is a separate DOM element that is used to start the resize operation. Typically this is a sizing grip or splitter bar. If you don't provide a handleSelector the base element resizes on any drag operation, which generally is not desirable, but may work in some situations.

The options object also has a few event hooks – onDragStart, onDrag, onDragEnd - that let you intercept the actual drag events that occur such as when the element is resized. For full information on the parameters available you can check the documentation or the GitHub page.

A Basic Example: Resizing a Box

Here's a simple example on CodePen that demonstrates how to make a simple box or window resizable:

![ResizeBox[8] ResizeBox[8]]()

In order to resize the window you grab the size-grip and resize the window as you would expect.

The code to enable this functionality involves adding the jQuery and jquery-resizable scripts to the page and attaching the resizable plug-in to the DOM element to resize:

<script src="//ajax.googleapis.com/ajax/libs/jquery/1.11.3/jquery.min.js" type="text/javascript"></script><script src="scripts/jquery-resizable.js"></script><script>$(".box").resizable({ handleSelector: ".win-size-grip" });</script>The key usage requirement is to select the DOM element(s), using a jQuery selector to specify the element(s) to resize. You can provide a number of options with the most important one being the .handleSelector which specifies the element that acts as the resizing initiator – when clicked the resizing operation starts and the as you move the mouse the base element is resized to that width/height.

As mentioned, jquery-resizable doesn't do any visual formatting or fix-up, but rather just handles the actual sizing operations. All the visual behavior is managed via plain HTML and CSS which allows for maximum flexibility and simplicity.

The HTML page above is based on this HTML markup:

<div class="box"><div class="boxheader">Header</div><div class="boxbody">Resize me</div><div class="win-size-grip"></div></div>

All of the UI based aspects – displaying the sizing handles (if any) and managing the min and max sizes etc. can be easily handled via CSS:

.box {margin: 80px;position: relative;width: 500px; height: 400px;

min-height: 100px;min-width: 200px;max-width: 999px;max-height: 800px;

}.boxheader { background: #535353; color: white; padding: 5px;

}.boxbody { font-size: 24pt;padding: 20px;

}.win-size-grip {position: absolute;width: 16px;height: 16px;bottom: 0;right: 0;cursor: nwse-resize;background: url(images/wingrip.png) no-repeat;

}

So to make the UI work – in this case the sizing grip in the bottom right corner – pure CSS is used. The Box is set using position:relative and the grip is rendered to the bottom left corner with position:absolute which allows the grip to be attached to the lower right corner using a background image. You can also control sizing limitations using max/min/width/height in CSS. to constrain the sizing to appropriate limits.

You can check out and play around with this simple example in CodePen or in the sample grabbed from GitHub.

Advertisement

![West Wind Web Surge - Web URL and Load Testing made easy]()

A two panel Splitter with jquery-resizable

I mentioned that I was looking for a light-weight way to implement a two panel display that allows for resizing. There are a number of components available that provide this sort of container management. These are overkill for what I needed and it turns out that it's really easy to create a resizable two panel layout using jquery-resizable.

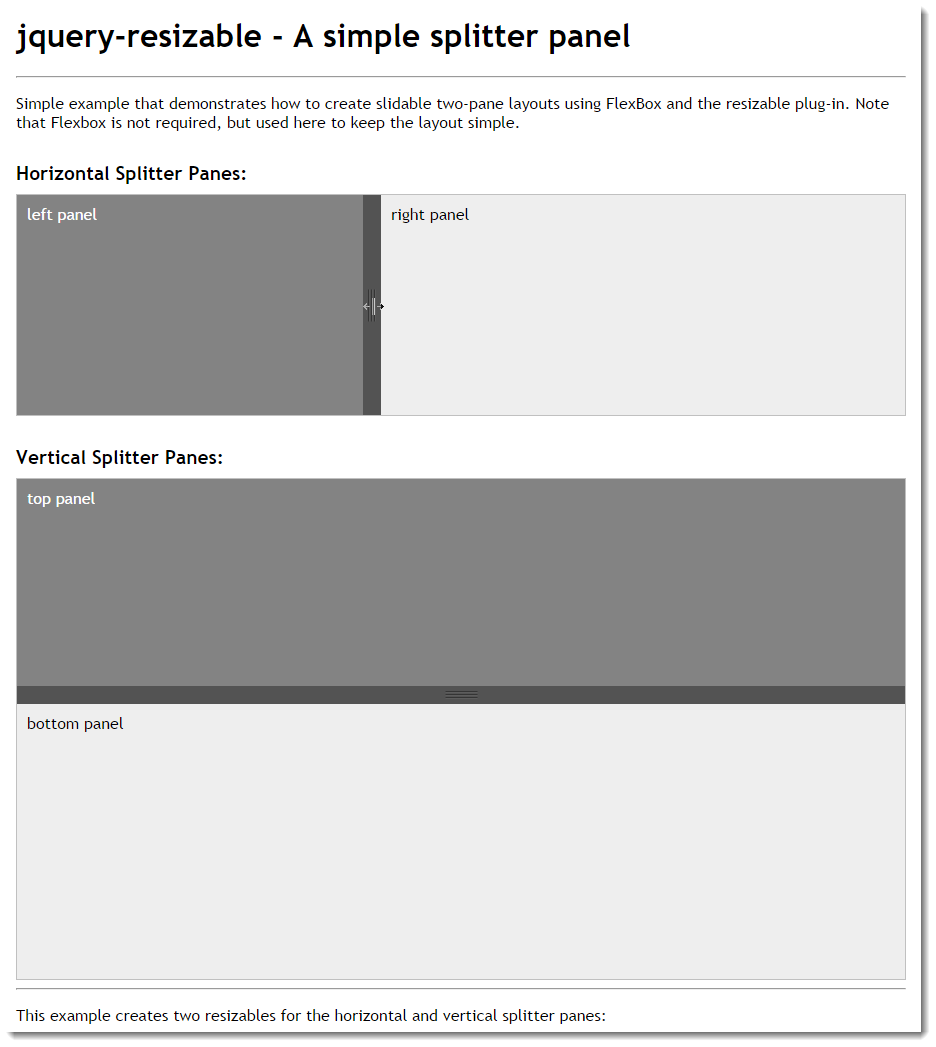

You can take a look at the Resizable Splitter Panels sample on CodePen to see how this works in a simple example.

![SplitterPanelExample SplitterPanelExample]()

Let's take a look and see how this works. Let's start with the top panel that horizontally splits the two panels. This example uses FlexBox to create two panes that span the whole width of the screen, with the left side being a fixed width element, while the right side is a variable width auto-stretching container.

Here's the HTML:

<div class="panel-container"><div class="panel-left">left panel</div><div class="splitter"></div><div class="panel-right">right panel</div></div>

Pretty simple – the three panels are contained in top level container that in this case provides the FlexBox container. Here's the CSS:

/* horizontal panel*/.panel-container {display: flex;flex-direction: row; border: 1px solid silver; overflow: hidden;

}.panel-left {flex: 0 0 auto; /* only manually resize */padding: 10px;width: 300px;min-height: 200px;min-width: 150px;white-space: nowrap;background: #838383;color: white;

}.splitter {flex: 0 0 auto;width: 18px;background: url(images/vsizegrip.png) center center no-repeat #535353;min-height: 200px;cursor: col-resize;

}.panel-right {flex: 1 1 auto; /* resizable */padding: 10px;width: 100%;min-height: 200px;min-width: 200px;background: #eee;

}FlexBox makes this sort of horizontal layout really simple by providing relatively clean syntax to specify how the full width of the container should be filled. The top level container is marked as display:flex and flex-direction: row which sets up the horizontal flow. The panels then specify whether they are fixed in width with flex: 0 0 auto or stretching/shrinking using flex: 1 1 auto. What this means is that right panel is auto-flowing while the right panel and the splitter are fixed in size – they can only be changed by physically changing the width of the element.

And this is where jquery-resizable comes in: We specify that we want the left panel to be resizable and use the splitter in the middle as the sizing handle. To do this with jquery-resizable we can use this simple code:

$(".panel-left").resizable({

handleSelector: ".splitter",

resizeHeight: false});And that's really all there's to it. You now have a resizable two panel layout. As the left panel is resized and the width is updated by the plug-in, the panel on the right automatically stretches to fill the remaining space which provides the appearance of the splitter resizing the list.

The vertical splitter works exactly the same except that the flex-direction is column. The layout for the verticals:

<div class="panel-container-vertical"><div class="panel-top">top panel</div><div class="splitter-horizontal"></div><div class="panel-bottom">bottom panel</div></div>

The HTML is identical to the horizontal except for the names. That's part of the beauty of flexbox layout which makes it easy to change the flow direction of content.

/* vertical panel */.panel-container-vertical {display: flex;flex-direction: column;height: 500px;border: 1px solid silver; overflow: hidden;

}.panel-top {flex: 0 0 auto; /* only manually resize */padding: 10px;height: 150px;width: 100%; background: #838383;color: white;

}.splitter-horizontal {flex: 0 0 auto;height: 18px;background: url(images/hsizegrip.png) center center no-repeat #535353; cursor: row-resize;

}.panel-bottom {flex: 1 1 auto; /* resizable */padding: 10px; min-height: 200px; background: #eee;

}and finally the JavaScript:

$(".panel-top").resizable({

handleSelector: ".splitter-horizontal",

resizeWidth: false});It's pretty nice to see how little code is required to make this sort of layout. You can of course mix displays like this together to do both vertical and horizontal resizing which gets a little more complicated, but the logic remains the same – you just have to configure your containers properly.

The thing I like about this approach is that that JavaScript code is minimal and most of the logic actually resides in the HTML/CSS layout.

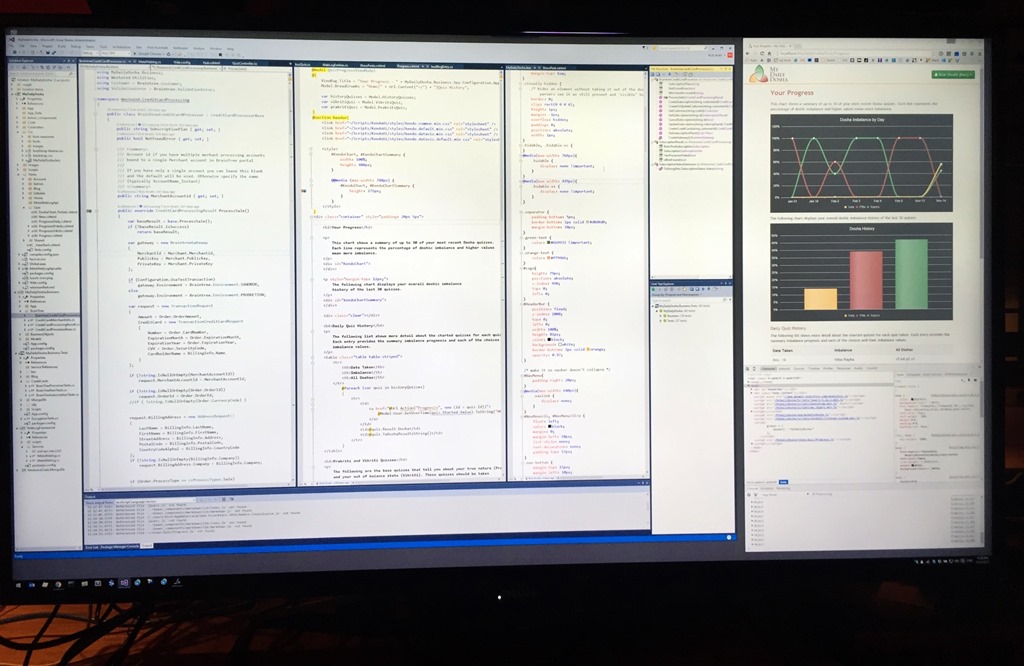

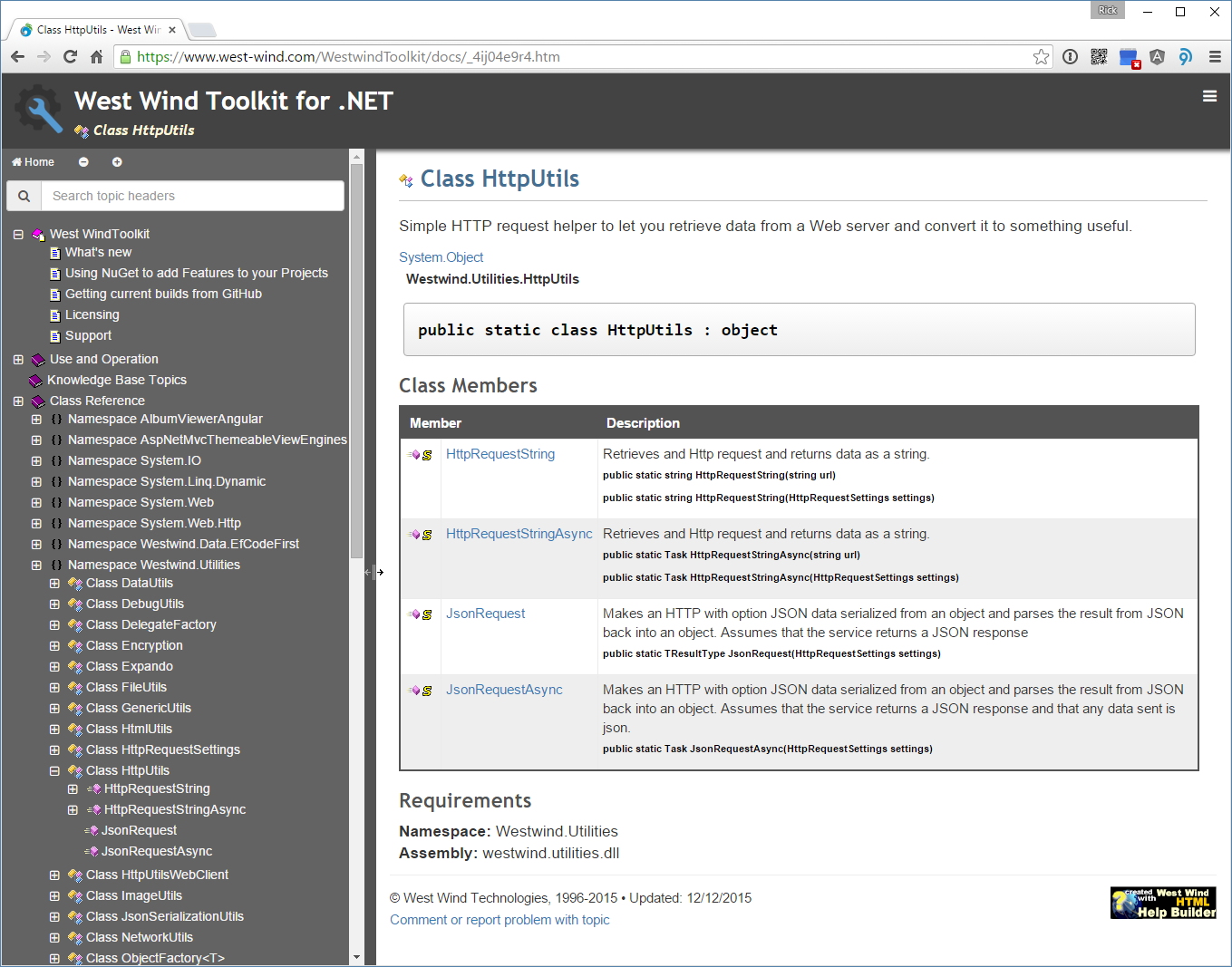

This is pretty close to the implementation I ended up with using for my Html Help Builder implementation of the final help layout, which ended up looking like this:

![HelpBuilderSplitPanel HelpBuilderSplitPanel]()

Sweet!

Implementation

The code for the jquery-resizable is pretty straight forward. The code essentially waits for mouseDown or touchStart events on the sizing handle which indicates the start of the resizing operation. When the resize starts additional mouse and touch events are hooked up for mouseMove and touchMove and mouseUp and touchEnd events. When the move events fire the code captures the current mouse position and resizes the selected element's width or height to that location. Note that the sizing handle itself is not explcitly moved – it should move on its own as part of the layout, so that when the container resizes the handle is moved with it automatically adjusting to the location.

For reference here's the relatively short code for the plug-in (or you can also check out the latest code on GitHub):

/// <reference path="jquery.js" />/*

jquery-watcher

Version 0.13 - 12/22/2015

© 2015 Rick Strahl, West Wind Technologies

www.west-wind.com

Licensed under MIT License

*/

(function($, undefined) { if ($.fn.resizable)return;

$.fn.resizable = function fnResizable(options) {var opt = {// selector for handle that starts dragginghandleSelector: null,// resize the widthresizeWidth: true,// resize the heightresizeHeight: true,// hook into start drag operation (event passed)onDragStart: null,// hook into stop drag operation (event passed)onDragEnd: null,// hook into each drag operation (event passed)onDrag: null,// disable touch-action on $handle

// prevents browser level actions like forward back gesturestouchActionNone: true};if (typeof options == "object") opt = $.extend(opt, options);return this.each(function () { var startPos, startTransition;var $el = $(this);var $handle = opt.handleSelector ? $(opt.handleSelector) : $el;if (opt.touchActionNone)

$handle.css("touch-action", "none");

$el.addClass("resizable");

$handle.bind('mousedown.rsz touchstart.rsz', startDragging);function noop(e) {

e.stopPropagation();

e.preventDefault();

};function startDragging(e) {

startPos = getMousePos(e);

startPos.width = parseInt($el.width(), 10);

startPos.height = parseInt($el.height(), 10);

startTransition = $el.css("transition");

$el.css("transition", "none");if (opt.onDragStart) {if (opt.onDragStart(e, $el, opt) === false)return;

}

opt.dragFunc = doDrag;

$(document).bind('mousemove.rsz', opt.dragFunc);

$(document).bind('mouseup.rsz', stopDragging);if (window.Touch || navigator.maxTouchPoints) {

$(document).bind('touchmove.rsz', opt.dragFunc);

$(document).bind('touchend.rsz', stopDragging);

}

$(document).bind('selectstart.rsz', noop); // disable selection}function doDrag(e) { var pos = getMousePos(e);if (opt.resizeWidth) {var newWidth = startPos.width + pos.x - startPos.x;

$el.width(newWidth);

}if (opt.resizeHeight) {var newHeight = startPos.height + pos.y - startPos.y;

$el.height(newHeight);

}if (opt.onDrag)

opt.onDrag(e, $el, opt);//console.log('dragging', e, pos, newWidth, newHeight);}function stopDragging(e) {

e.stopPropagation();

e.preventDefault();

$(document).unbind('mousemove.rsz', opt.dragFunc);

$(document).unbind('mouseup.rsz', stopDragging);if (window.Touch || navigator.maxTouchPoints) {

$(document).unbind('touchmove.rsz', opt.dragFunc);

$(document).unbind('touchend.rsz', stopDragging);

}

$(document).unbind('selectstart.rsz', noop);// reset changed values$el.css("transition", startTransition);if (opt.onDragEnd)

opt.onDragEnd(e, $el, opt);return false;

}function getMousePos(e) {var pos = { x: 0, y: 0, width: 0, height: 0 }; if (typeof e.clientX === "number") {

pos.x = e.clientX;

pos.y = e.clientY;

} else if (e.originalEvent.touches) {

pos.x = e.originalEvent.touches[0].clientX;

pos.y = e.originalEvent.touches[0].clientY;

} else

return null;return pos;

}

});

};

})(jQuery,undefined);There are a few small interesting things to point out in this code.

Turning off Transitions

The first is a small thing I ran into which was that I needed to turn off transitions for resizing. I had my left panel setup with a width transition so when the collapse/expand button triggers the panel opens with a nice eas-in animation. When resizing this becomes a problem, so the code explicitly disables animations on the resized component.

Hooking into Drag Events

If you run into other things that might interfere with resizing you can hook into the three drag event hooks – onDragStart, onDrag, onDragEnd – that are fired as you resize the container. For example the following code explicitly sets the drag cursor on the container that doesn't use an explicit drag handle when the resize is started and stopped:

$(".box").resizable({

onDragStart: function (e, $el, opt) {

$el.css("cursor", "nwse-resize");

},

onDragStop: function (e, $el, opt) {

$el.css("cursor", "");

}

}); You can return false from onDragStart to indicate you don't want to start dragging.

Touch Support

The resizing implementation was surprisingly simple to implement, but getting the touch support to work took a bit of sleuthing. The tricky part is that touch events and mouse events overlap so it's important to separate where each is coming from. In the plug-in the important part is getting the mouse/finger position reliably which requires looking both at the default jQuery normalized mouse properties as well as at the underlying touch events on the base DOM event:

function getMousePos(e) {var pos = { x: 0, y: 0, width: 0, height: 0 };if (typeof e.clientX === "number") {

pos.x = e.clientX;

pos.y = e.clientY;

} else if (e.originalEvent.touches) {

pos.x = e.originalEvent.touches[0].clientX;

pos.y = e.originalEvent.touches[0].clientY;

} else

return null;return pos;

}It sure would be nice if jQuery could normalize this automatically so properties things like clientX/Y and pageX/Y on jQuery's wrapper event could return the right values or either touch or mouse properties, but for now we still have to normalize manually.

Checking for Mouse and or Touch Support

On the same note the code has to explicitly check for touch support and if available bind the various touch events like touchStart, touchMove and touchEnd which adds a bit of noise to the otherwise simple code. For example, here's the code that decides whether the touchmove and touchend events need to be hooked:

if (window.Touch || navigator.maxTouchPoints) {

$(document).bind('touchmove.rsz', opt.dragFunc);

$(document).bind('touchend.rsz', stopDragging);

}There are a couple of spots like this in the code that make the code less than clean, but… the end result is nice and you can use either mouse or touch to resize the elements.

Arrrggggh! Internet Explorer and Touch

It wouldn't be any fun if there wasn't some freaking problem with IE or Edge, right?

Turns out IE and Edge on Windows weren't working with my original code. I didn't have a decent touch setup on Windows until I finally managed to get my external touch monitor to work in a 3 monitor setup. At least now I can test under this setup. Yay!

Anyway. There are two issues with IE – it doesn't have window.Touch object, and so checking for touch was simply failing to hook up the other touch events that the plug-in is listening for. Instead you have to look for an IE specific navigator.maxTouchPoints property. That was problem #1.

Problem #2 is that IE and Edge have browser level gestures that override element level touch events. Other browsers like Chrome ave those too but they are a bit more lenient in their interference with the document. By default I couldn't get the touchStart event to fire because the browser level events override the behavior.

The workaround for this is the touch-action: none property that basically disables the browser from monitoring for document swipes for previous. This CSS tag can be applied to the document, or any container or as I was happy to see the actual drag handle. Ideally applying it to the drag handle doesn't have any other side effects on the document and prohibit scrolling so the code now optionally forces touch-action: none onto the drag handle via a flagged operation:

if (opt.touchActionNone)

$handle.css("touch-action", "none");You can try it out here with Edge, IE, Chrome on a touch screen. I don't have a Windows Phone to try with – curious whether that would work.

http://codepen.io/rstrahl/pen/eJZQej

Remember: If you support Touch…

As always if you plan on supporting touch make very sure that you make your drag handles big enough to support my fat fingers. It's no fun to try and grab a 3 point wide drag handle 10 times before you actually get it…

Slide on out

All of this isn't rocket science obviously, but I thought I'd post it since I didn't find an immediate solution to a simple way to implement resizing and this fits the bill nicely. It's only been a week since I created this little plug-in and I've retrofitted a number of applications with sliders and resizable window options where it makes sense which is a big win for me. Hopefully some of you might find this useful as well.

Resources

© Rick Strahl, West Wind Technologies, 2005-2015

![]()

![TrustLevels[6] TrustLevels[6]](http://weblog.west-wind.com/images/2015Windows-Live-Writer/1229b5ac916d_CEBE/TrustLevels%5B6%5D_thumb.png)

![ResizeBox[8] ResizeBox[8]](http://weblog.west-wind.com/images/2015Windows-Live-Writer/A-small-jQuery-Resizable-Plug-in_DB94/ResizeBox%5B8%5D_thumb.png)

![PackageManagerEnable[6] PackageManagerEnable[6]](http://weblog.west-wind.com/images/2016Windows-Live-Writer/70c5280a6aa6_C972/PackageManagerEnable%5B6%5D_thumb.png)